Neural Architecture Search (NAS) has emerged as a powerful tool for automating the design of neural network architectures, providing a clear advantage over manual design methods. It significantly reduces the time and expert effort required in architecture development. However, traditional NAS faces significant challenges as it depends on extensive computational resources, particularly GPUs, to navigate large search spaces and identify optimal architectures. The process involves determining the best combination of layers, operations, and hyperparameters to maximize model performance for specific tasks. These resource-intensive methods are impractical for resource-constrained devices, that need rapid deployment, which limits their widespread adoption.

The current approaches discussed in this paper include Hardware-aware NAS (HW NAS) approaches that address the impracticality of resource-constrained devices by integrating hardware metrics into the search process. However, these methods still use GPUs for model optimization, limiting their accessibility. In the TinyML domain, frameworks like MCUNet and MicroNets have become popular in the neural architecture optimization for MCUs, but they too require significant GPU resources. Recent research has introduced CPU-based HW NAS methods for tiny CNNs, but they come with limitations, such as depending on standard CNN layers instead of more efficient options.

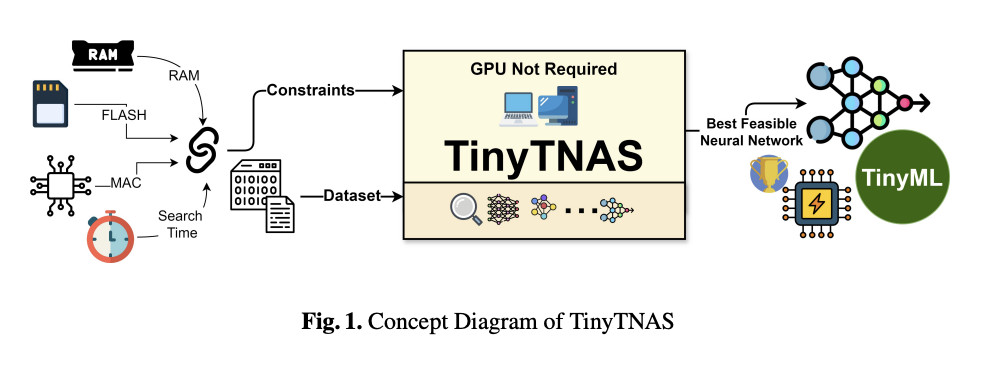

A team of researchers from the Indian Institute of Technology Kharagpur, India have proposed TinyTNAS, a cutting-edge hardware-aware multi-objective Neural Architecture Search tool specially designed for TinyML time series classification. TinyTNAS operates efficiently on CPUs, making it more accessible and practical for a wider range of applications. It allows users to define constraints on RAM, FLASH, and MAC operations to discover optimal neural network architectures within these parameters. A unique feature of TinyTNAS is its ability to perform time-bound searches, ensuring the best possible model is found within a user-specified duration.

TinyTNAS’s architecture is designed to work across various time-series datasets, demonstrating its versatility in lifestyle, healthcare, and human-computer interaction domains. Five datasets are utilized, including UCIHAR, PAMAP2, and WISDM for human activity recognition, and MIT-BIH and PTB Diagnostic ECG Database for healthcare applications. UCIHAR provides 3-axial linear acceleration and angular velocity data, PAMAP2 captures data from 18 physical activities using IMU sensors and a heart rate monitor, and WISDM contains accelerometer and gyroscope data. MIT-BIH includes annotated ECG data covering various arrhythmias, while the PTB Diagnostic ECG Database comprises ECG records from subjects with different cardiac conditions.

The results prove the outstanding performance of TinyTNAS across all five datasets. It achieves remarkable reductions in resource usage on the UCIHAR dataset, including RAM, MAC operations, and FLASH memory. It maintains superior accuracy and reduces latency by 149 times. The results for PAMAP2 and WISDM datasets show 6 times reduction in RAM usage, and a significant reduction in other resource usage, without losing accuracy. TinyTNAS is much more efficient as it completes the search process within 10 minutes in a CPU environment. These results prove the TinyTNAS’s effectiveness in optimizing neural network architectures for resource-constrained TinyML applications.

In this paper, researchers introduced TinyTNAS which represents a significant advancement in bridging Neural Architecture Search (NAS) with TinyML for time series classification on resource-constrained devices. It operates efficiently on CPUs without GPUs and allows users to define constraints on RAM, FLASH, and MAC operations, finding optimal neural network architectures. The results on multiple datasets demonstrate its significant performance improvements over existing methods. This work raises the bar for optimizing neural network designs for AIoT and low-cost, low-power embedded AI applications. It is one of the first efforts to create a NAS tool specifically designed for TinyML time series classification.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel.

If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post TinyTNAS: A Groundbreaking Hardware-Aware NAS Tool for TinyML Time Series Classification appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)