Information retrieval (IR) is a crucial area of research focusing on identifying and ranking relevant documents from extensive datasets to meet user queries effectively. As datasets grow, the need for precise and fast retrieval methods becomes even more critical. Traditional retrieval systems often rely on a two-step process: a computationally efficient method first retrieves a set of candidate documents, which are then re-ranked using more sophisticated models. Neural models, which have become increasingly popular in recent years, are highly effective for re-ranking but often come with significant computational costs. Their ability to consider the query and the document during ranking makes them powerful but difficult to scale for large datasets. The challenge lies in developing methods that maintain efficiency without compromising the accuracy & quality of search results.

A central problem in modern retrieval systems is balancing computational cost and accuracy. While traditional models like BM25 offer efficiency, they often lack the depth needed to rank complex queries accurately. On the other hand, advanced neural models like BERT significantly enhance performance by improving the quality of re-ranked documents. However, their high computational requirements make them impractical for large-scale use, particularly in real-time environments where latency is a major concern. The challenge for researchers has been to create both computationally feasible methods capable of delivering high-quality results. Addressing this issue is crucial for improving IR systems and making them more adaptable to large-scale applications, such as web search engines or specialized database queries.

Several current methods exist for re-ranking documents within retrieval pipelines. One of the most popular methods is cross-encoder models, such as BERT, which process queries and documents simultaneously for higher accuracy. These models, although effective, are computationally intensive and require a significant amount of resources. MonoT5, another method, employs sequence-to-sequence models for re-ranking but shares similar computational demands. ColBERT-based methods use late interaction techniques to improve retrieval but require specific hardware optimizations to be effective. Some recent approaches, such as Cohere-Rerank, offer competitive re-ranking capabilities through online APIs, but access to these models remains limited and dependent on external platforms. These existing solutions, while effective, create a fragmented ecosystem where switching between different re-ranking methods often requires substantial code modification.

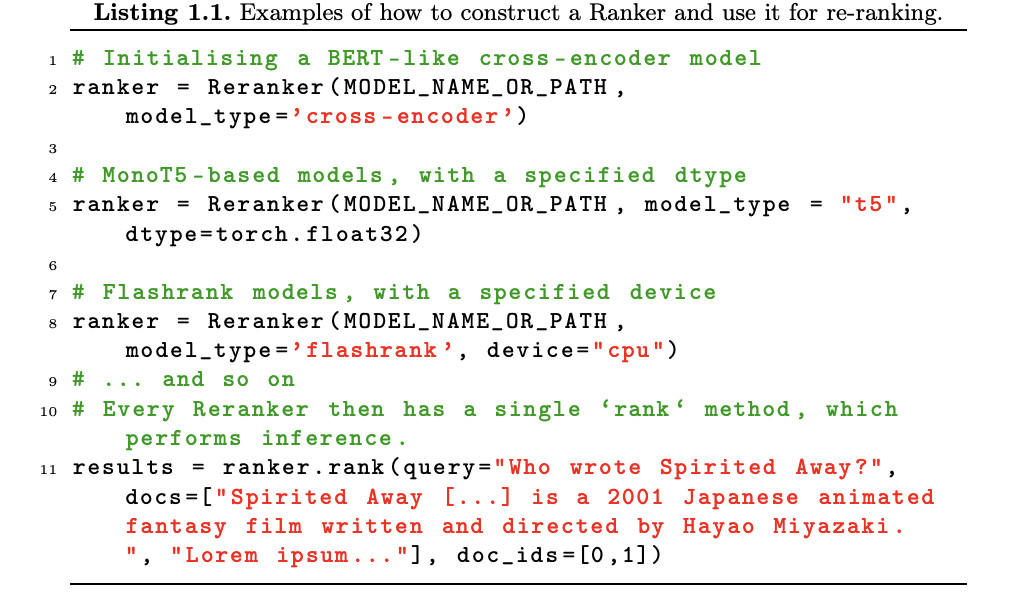

Researchers from Answer.AI introduced rerankers, a lightweight Python library designed to unify various re-ranking methods under a single interface. rerankers provide a simple yet powerful tool that allows researchers to experiment with different re-ranking techniques by changing just a single line of code. This library supports many re-ranking models, including MonoT5, FlashRank, and cross-encoders like BERT. Its primary aim is to reduce the difficulty of integrating new re-ranking methods into existing retrieval pipelines without sacrificing performance. The library’s key principles include minimal code changes, ease of use, and performance parity with original implementations, making it a valuable tool for researchers and practitioners in information retrieval.

The rerankers library revolves around the Reranker class, the primary interface for loading models and handling re-ranking tasks. Users can switch between different re-ranking methods with minimal effort, as rerankers are compatible with modern Python versions and the HuggingFace Transformers library. For example, initializing a BERT-like cross-encoder model can be done by specifying the model type as ‘cross-encoder,’ while switching to a FlashRank model requires only adding a device type like ‘cpu’ to optimize performance. This design allows users to experiment with different models and optimize retrieval systems without extensive coding. The library also supports utility functions for retrieving top-k candidates or outputting scores for knowledge distillation.

Regarding performance, the rerankers library has shown impressive results across various datasets. Evaluations were conducted on three datasets commonly used in the information retrieval community: MS Marco, SciFact, and TREC-COVID, all subsets of the BEIR benchmark. Rerankers maintained performance parity in these tests with existing re-ranking implementations, achieving consistent top-1000 reranking results over five different runs. For instance, in one notable experiment with MonoT5, rerankers produced scores nearly identical to the original implementation with a performance difference of less than 0.05%. Although the library struggled with reproducing results for certain models, such as RankGPT, these deviations were minimal. Moreover, rerankers played a pivotal role in knowledge distillation tasks, enabling first-stage retrieval models to emulate the scores generated by re-ranking models, thereby enhancing the accuracy of initial retrieval stages.

In conclusion, the rerankers library addresses the inefficiencies and complexities of current retrieval pipelines by unifying different approaches into a single, easy-to-use interface. It allows for flexible experimentation with other models, reducing the barrier to entry for users, researchers, and practitioners alike. The rerankers library ensures that switching between re-ranking methods does not compromise performance, offering a modular, extensible, and high-performing solution for document retrieval. This innovation enhances the accuracy and efficiency of retrieval systems but also contributes to future advancements in the field of information retrieval.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and LinkedIn. Join our Telegram Channel. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

The post Answer.AI Releases ‘rerankers’: A Unified Python Library Streamlining Re-ranking Methods for Efficient and High-Performance Information Retrieval Systems appeared first on MarkTechPost.

Source: Read MoreÂ