This is a guest post from Luke Walker Goetzke, PhD, and Director of Data Science at Phreesia.

Phreesia is the trusted leader in patient activation, providing tools to healthcare providers, advocacy groups, life sciences companies, and other organizations to help make care easier every day and activate patients in their care. Health and wellness organizations can use Phreesia’s network solutions offerings to serve personalized health messages directly to clinically relevant patients who opt in to receive such content on its platform.

Phreesia delivers tens of millions of pieces of personalized content each year across the United States via its platform, including educational materials, public health campaigns, clinical trial opportunities, market research studies, financial support resources, diagnosis and screening prompts for at-risk and symptomatic individuals, and information on treatment options. Because messages are delivered at critical decision-making moments, such as just before doctor-patient discussions, content leads to behavioral change and measurable improvements in the health outcomes of recipients. In one recent example, a lung cancer screening reminder was delivered to individuals 50-80 years old with a history of smoking, since catching lung cancer early provides much better treatment options for those affected. Individuals who saw the content were 234% more likely to get screened for lung cancer and 105% more likely to be diagnosed with lung cancer than an identical audience who did not see the message.

Phreesia had an on-premises SQL Server 2019 database supporting both production transactional and analytical workloads. This 30 TB database had experienced significant growth, expanding from 24 TB to 30 TB during the course of 2023. As data volumes continued to increase rapidly to meet business demands, the current database infrastructure was struggling to support the projected load and was at risk for disruption. Any downtime could potentially lead to loss of revenue and lead to poorer health outcomes for patients. With ongoing analytical queries also using this database, response times for production workloads had slowed down significantly, negatively impacting time-sensitive business operations. With the given projections for data growth, the current system couldn’t sustain performance at the levels needed to support future business expansion.

To resolve these system limitations and make sure analytical workloads don’t continue to impact production system performance, Phreesia decided to replicate analytical applications and underlying data to a new Amazon Simple Storage Service (Amazon S3) data lake environment.

In this post, we discuss how Phreesia used AWS Database Migration Service (AWS DMS) to replicate their on-premises database to AWS in an effective and cost-optimized manner. Because of the database’s large size and complex data structure, properly tuning the AWS DMS configuration was critical to minimize the migration duration and cost. We outline the fine-tuning techniques that were applied to optimize the AWS DMS task settings, instance size, IOPS provisioning, and table mappings. Applying these performance optimizations allowed Phreesia to develop a migration strategy to move this 30 TB database to Amazon S3 in just 2 days without disruption to production workloads.

Solution overview

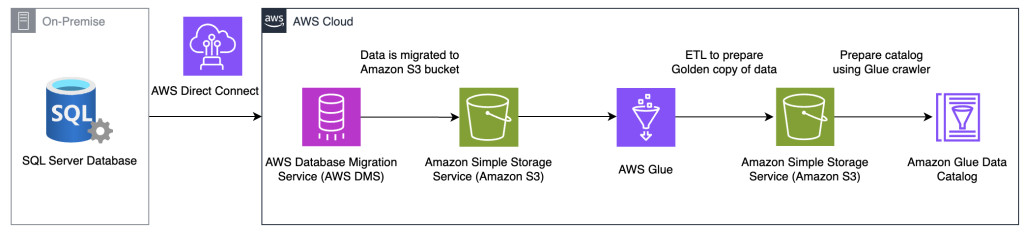

The following diagram illustrates the solution architecture.

The on-premises SQL Server is connected to AWS DMS through AWS Direct Connect. The data from the on-premises SQL Server is transferred to an S3 bucket using AWS DMS.

After the data is stored in the S3 bucket, AWS Glue performs an extract, transform, and load (ETL) process to prepare a golden copy of the data. This golden copy is stored in another S3 bucket.

The golden copy of the data is then used by an AWS Glue crawler to prepare a data catalog, which is stored in the AWS Glue Data Catalog.

In the following sections, we discuss the key components and considerations in the migration approach.

Impact on the source

Understanding server specifications is vital for assessing the hardware capabilities required for efficient SQL Server migration. Proper server specifications make sure the migration process can run smoothly without encountering performance bottlenecks or resource constraints. Adequate server resources contribute to the overall success and performance of the migration, facilitating seamless data transfer and replication.

Phreesia had the following server specifications:

Version: SQL Server 2019 Enterprise edition

Processors: 112

Memory: 2.5 TB

Transaction logs storage space: 2 TB

Transaction logs retention period: 7 days

We observed load on the SQL Server instance increase by at least 10% due to enabling bulk-logged mode and change data capture (CDC) on multiple large tables with large binary object (LOB) columns.

Source database recovery strategy

Before we delve into the intricacies of AWS DMS, it’s crucial to understand the different recovery models in SQL Server:

Simple – This is the most basic form of recovery model in SQL Server. It enables you to take full or differential backups since the last full backup, making it efficient for regular daily backups. However, this model doesn’t provide point-in-time recovery.

Full – This model keeps all transaction data in the transaction log until either a transaction log backup occurs or the transaction log is truncated. It offers comprehensive data protection, enabling complete point-in-time recovery in case of data loss or corruption. However, this model requires provisioning adequate storage space for significant transaction log growth.

Bulk-logged – This model is almost the same as full, except certain bulk operations aren’t fully logged in the transaction log and therefore don’t take as much storage space as the full recovery model for the transaction log.

In this implementation, we needed to convert from a simple model to a bulk-logged recovery model. A bulk-logged recovery model provides the foundation for data consistency and point-in-time recovery, minimizes downtime during migration, and reduces the overhead on the SQL Server instance for maintaining transaction logs.

Enabling the source for CDC

Continuous data capture (CDC) is a functionality offered by AWS DMS that facilitates ongoing replication of changes from the source database to the target database, even after the initial full-load migration is complete. When a CDC migration is configured, AWS DMS captures all the inserts, updates, and deletes occurring in the source database and applies those changes to the target database in near real time.

Refer to Capturing data changes for self-managed SQL Server on-premises or on Amazon EC2 to decide which replication mode is best for your use case: MS-Replication or MS-CDC. For Phreesia’s case in this post, there were tables without unique keys. Therefore, we decided to use MS-CDC instead of MS-Replication.

Also, a column store index was present on a few tables. We removed the column store index and added a primary key and a secondary index on the table.

To set up your database for MS-CDC, refer to Setting up ongoing replication on a self-managed SQL Server.

Set the retention period

The polling interval represents the time period, measured in seconds, during which changes made in the source database instance are available for AWS DMS to capture and consume. This setting prevents the truncation of the transaction log for the specified duration, which could potentially lead to increased storage utilization as the transaction log grows in size.

For large datasets, like the one from Phreesia, and to mitigate the risk of truncating changes, we set the polling interval to 72 hours. This extended duration makes sure AWS DMS has sufficient time to read and process the changes effectively. However, depending on the rate of change occurring in the source database, you can also consider testing with a lower polling interval to optimize resource utilization.

Update the retention period on the source database for the changes to be available on the source transactions. For instructions on setting the polling interval, refer to Setting up ongoing replication on a cloud SQL Server DB instance.

Output file format

The appropriate output file format is an important factor because it can significantly impact the efficiency, performance, and scalability of the migration process, as well as the subsequent data processing and analysis tasks within a data lake architecture. Analyzing the data format of your source database is crucial in determining the most suitable output file format. Consider factors such as the structure of the data, the presence of large or complex data types, the frequency of updates and deletes, and the intended use cases for the replicated data within the data lake architecture.

In the case of Phreesia’s dataset, we encountered a unique challenge. The dataset included LOB columns with semi-structured formats and no common unique field delimiter, such as a comma or pipe. In such scenarios, traditional file formats such as CSV may not be the most efficient choice, because any combination of characters could potentially be part of the field values. To address this issue, we opted for the Parquet file format, which is designed to handle complex and semi-structured data efficiently within a data lake architecture.

Parquet file format offers several advantages, including efficient storage and retrieval of data, support for complex data types and nested data structures, and superior performance for analytical workloads within a data lake environment.

Moreover, because the dataset contains tables with frequent updates and deletes, we used the Iceberg table structure in conjunction with the Parquet file format. The Iceberg table structure is designed to handle data mutations efficiently, making it an ideal choice for workloads that involve frequent data modifications within a data lake architecture. By combining the Parquet file format with the Iceberg table structure, we provided optimal performance, throughput, and scalability during the migration process and subsequent data processing tasks within the data lake environment.

Replicate large tables

When migrating large tables from your on-premises SQL Server instance to AWS using AWS DMS, it’s essential to optimize the migration process to achieve efficient performance and minimize the impact on your source database. During a full load, by default, AWS DMS uses a single thread to read data from each table being replicated, which can cause performance issues for larger tables.

During the full-load data migration phase, AWS DMS initiates a SELECT statement on the source table, performing a full table scan. However, a full table scan on large tables is not recommended, because it causes all records to be scanned sequentially, resulting in a significant amount of unnecessary I/O. This can degrade the overall performance of the source database and slow down migration. To address this challenge, AWS DMS provides built-in options to load tables in parallel using multiple different partition load settings. For more information, refer to Table and collection settings rules and operations.

Phreesia had some extremely large tables with billions of rows of data, and these tables weren’t partitioned. We used the range partitions feature in AWS DMS for partition load range. This approach involves dividing the table into multiple ranges and loading each range in parallel, taking advantage of the parallelism capabilities of AWS DMS.

One of the critical factors in choosing range segmentation is identifying the right number of boundaries and making sure the data is distributed evenly across these boundaries. We identified the unique key on the tables, set up boundaries based on the range, and chose a value for the MaxFullLoadSubTasks setting to have more threads during the full-load phase. You should also take into consideration the amount of vCPU the replication instance has.

Replication instance type considerations

Selecting the appropriate replication instance type is a key factor in optimizing performance and resource utilization during the migration process from an on-premises SQL Server to AWS using AWS DMS. AWS DMS offers a variety of replication instance types with varying levels of CPU, memory, and network bandwidth capabilities, allowing you to choose the best fit based on your specific workload requirements.

One of the key considerations when choosing the replication instance type is monitoring the CPU utilization during the migration process. High CPU usage may indicate resource contention and potential performance issues, which can lead to delays or interruptions in the migration. Amazon CloudWatch provides comprehensive monitoring metrics for the replication instance, enabling you to gain insights into CPU utilization and make informed decisions about the instance type selection.

For this migration, we chose a C-type instance due to the need for CPU-intensive operations during the process. The primary reasons for this choice were:

Parquet file format conversion – As mentioned earlier, we opted for the Parquet file format as the output, which required compression and conversion on the replication instance during migration. This added CPU load on the instance.

Parallel loading for large tables – We enabled the parallel load feature for large tables, using multiple threads to accelerate the loading process. This parallel processing also increased the CPU demand on the replication instance.

C-type instances offer better CPU performance compared to memory-optimized R-type instances, which is advantageous for workloads involving complex data transformations or computations like the conversion to Parquet format.

Replication instance storage size considerations

Estimating the appropriate storage capacity is essential for accommodating the replicated data and providing sufficient capacity for future growth. Additionally, your storage size contributes towards read and write IOPs in Amazon Elastic Block Store (Amazon EBS). Refer to gp2 volume performance for more information about the IOPs performance baseline.

We choose AWS DMS replication instance storage of 1 TB to allocate sufficient storage and elevate baseline IOPs performance.

Monitoring AWS DMS tasks

Monitoring AWS DMS tasks provides visibility into the progress, status, statistics, and performance of data replication from SQL Server to the target environment in AWS.

During the migration process, various factors can influence the efficiency and success of the data replication. By observing AWS DMS tasks, administrators can identify any issues or bottlenecks that may arise, such as data synchronization errors, network latency, or performance degradation. Early detection of these problems is essential for timely intervention and troubleshooting, so migration objectives are met within the expected timeframe and with minimal disruptions to operations. For more information, refer to AWS DMS key troubleshooting metrics and performance enhancers.

The following screenshots show examples of the AWS DMS task metrics you can monitor.

Replicate LOB columns

Migrating LOB columns is challenging for any database migration because of the large amount of data they hold. AWS DMS provides three options to replicate LOB columns: limited, full, and inline mode.

For identifying the max LOB size in each column for SQL Server, you can use the following query:

When migrating database tables with LOB columns using AWS DMS, it’s important to consider how to handle those large data types efficiently. In this migration, we used limited LOB mode rather than full LOB mode for tables containing LOB columns. Limited LOB mode only replicates the first n KB of LOB data in order to reduce migration time and cost because AWS DMS pre-allocates memory and loads the LOB data in bulk. Based on an analysis of the source database, we selected the maximum LOB size value of 1 MB from the tables and set this as the max LOB size (K) option in AWS DMS. This helped replicate the full content of all LOB columns without risk of truncation.

Benefits and results

By replicating SQL Server data directly to Amazon S3 using AWS DMS, Phreesia was able to realize the following technical and business benefits:

Replicated a 30 TB SQL Server database to Amazon S3 in 2 days.

Reduced the overall cost by removing the need for ongoing physical hardware maintenance and providing flexibility to optimize search and analytical processes for particular business cases. Phreesia can now search, on average, 300 times more records in a comparable amount of time for less than $1.

Integrated sophisticated natural language processing (NLP) directly into the search process.

Provided a scalable, repeatable, and reliable mechanism to collect valuable health insights from on-premises SQL Server.

Removed existing constraints around concurrency and sample size in the search process to identify and forecast customer audience segments.

Decreased sampling uncertainty, resulting in better detection and characterization of rare audience segments and more robust and accurate forecasts.

Maintained data integrity throughout the migration and effectively eliminated any gaps in the data record. AWS DMS also provided a method to incrementally migrate production data as it streamed in from different applications with no disruption to the ongoing data ingestion.

Unlocked increased flexibility, making it straightforward to restructure and repartition data at scale to tailor to specific use cases, as well as increased portability, allowing for seamless ingestion or exportation of data to other platforms as needed.

Conclusion

In this post, we saw how Phreesia embarked on the journey to replicate and modernize its forecasting workload to support its growth. The key factors that drove the migration decisions were the ability to scale the platform to support data growth, improve query speed and quantity of queries, and facilitate future innovation. The modernized forecasting workload on AWS also decreases the ongoing operational support burden for engineers, and the scalable, reliable, and repeatable nature of the workflow opens significant opportunity for growth.

By migrating their forecasting data to Amazon S3, the Phreesia team enabled the seamless integration of powerful parallel computing techniques, NLP, machine learning (ML), and artificial intelligence (AI) to significantly increase the speed, concurrency, scale, and sophistication of their searches and downstream analytical and predictive processes. The net result is better identification and characterization of audience segments, more robust and accurate forecasts, and ultimately more optimal engagement with patients using the Phreesia platform who consent to receiving personalized content.

To learn more about migrating SQL Server databases to AWS using AWS DMS, visit the AWS DMS product page.

About the Authors

Luke Walker Goetzke, PhD, is a Director of Data Science at Phreesia. He is a results-driven data science leader with a unique blend of academic and industry experience. He has a proven track record of applying analytical expertise to complex problems, from searching for dark matter to improving healthcare outcomes.

Vaibhav Sabharwal is a Senior Solutions Architect with Amazon Web Services based out of New York. He is passionate about learning new cloud technologies and assisting customers in building cloud adoption strategies, designing innovative solutions, and driving operational excellence. As a member of the Financial Services Technical Field Community at AWS, he actively contributes to the collaborative efforts within the industry.

Tim Ramos is a Senior Account Manager at AWS. He has 12 years of sales experience and 10 years of experience in cloud services, IT infrastructure, and SaaS. Tim is dedicated to helping customers develop and implement digital innovation strategies. His focus areas include business transformation, financial and operational optimization, and security. Tim holds a BA from Gonzaga University and is based in New York City.

Robert Daly is a Senior Database Specialist Solutions Architect at AWS, focusing on Amazon RDS, Amazon Aurora, and AWS DMS. He has helped multiple enterprise customers move their databases to AWS, providing assistance on performance and best practices. Robert enjoys helping customers build technical solutions to solve business problems.

Source: Read More