In natural language processing (NLP), handling long text sequences effectively is a critical challenge. Traditional transformer models, widely used in large language models (LLMs), excel in many tasks but must be improved when processing lengthy inputs. These limitations primarily stem from the quadratic computational complexity and linear memory costs associated with the attention mechanism used in transformers. As the text length increases, the demands on these models become prohibitive, making it difficult to maintain accuracy and efficiency. This has driven the development of alternative architectures that aim to manage long sequences more effectively while preserving computational efficiency.

One of the key issues with long-sequence modeling in NLP is the degradation of information as text lengthens. Recurrent neural network (RNN) architectures, often used as a basis for these models, are particularly prone to this problem. As input sequences grow longer, these models need help to retain essential information from earlier parts of the text, leading to a decline in performance. This degradation is a significant barrier to developing more advanced LLMs that can handle extended text inputs without losing context or accuracy.

Many methods have been introduced to tackle these challenges, including hybrid architectures combining RNNs with transformers’ attention mechanisms. These hybrids aim to leverage the strengths of both approaches, with RNNs providing efficient sequence processing and attention mechanisms helping to retain critical information across long sequences. However, these solutions often have increased computational and memory costs, reducing efficiency. Some methods focus on extending the length capabilities of models by improving their length extrapolation abilities without requiring additional training. Yet, these approaches typically result in only modest performance gains and only partially solve the underlying problem of information degradation.

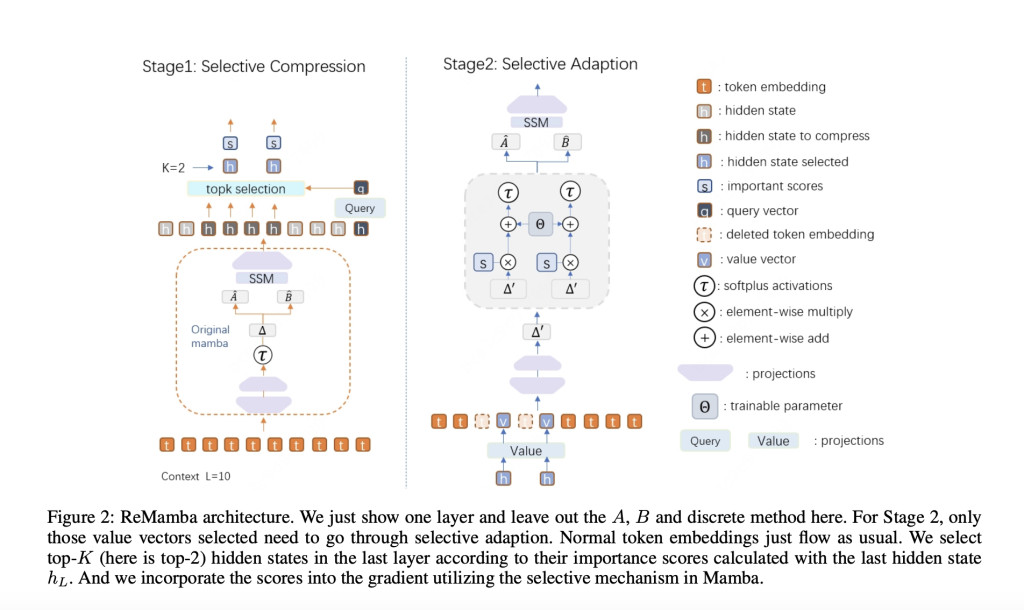

Researchers from Peking University, National Key Laboratory of General Artificial Intelligence, 4BIGAI, and Meituan introduced a new architecture called ReMamba, designed to enhance the long-context processing capabilities of the existing Mamba architecture. While efficient for short-context tasks, Mamba shows a significant performance drop when dealing with longer sequences. The researchers aimed to overcome this limitation by implementing a selective compression technique within a two-stage re-forward process. This approach allows ReMamba to retain critical information from long sequences without significantly increasing computational overhead, thereby improving the model’s overall performance.

ReMamba operates through a carefully designed two-stage process. In the first stage, the model employs three feed-forward networks to assess the significance of hidden states from the final layer of the Mamba model. These hidden states are then selectively compressed based on their importance scores, which are calculated using a cosine similarity measure. The compression reduces the required state updates, effectively condensing the information while minimizing degradation. In the second stage, ReMamba integrates these compressed hidden states into the input context, using a selective adaptation mechanism that allows the model to maintain a more coherent understanding of the entire text sequence. This method incurs only a minimal additional computational cost, making it a practical solution for enhancing long-context performance.

The effectiveness of ReMamba was demonstrated through extensive experiments on established benchmarks. On the LongBench benchmark, ReMamba outperformed the baseline Mamba model by 3.2 points; on the L-Eval benchmark, it achieved a 1.6-point improvement. These results highlight the model’s ability to approach the performance levels of transformer-based models, which are typically more powerful in handling long contexts. The researchers also tested the transferability of their approach by applying the same method to the Mamba2 model, resulting in a 1.6-point improvement on the LongBench benchmark, further validating the robustness of their solution.

ReMamba’s performance was particularly notable in its ability to handle varying input lengths. The model consistently outperformed the baseline Mamba model across different context lengths, extending the effective context length to 6,000 tokens compared to the 4,000 tokens for the finetuned Mamba baseline. This demonstrates ReMamba’s enhanced capacity to manage longer sequences without sacrificing accuracy or efficiency. Additionally, the model maintained a significant speed advantage over traditional transformer models, operating at comparable speeds to the original Mamba while processing longer inputs.

In conclusion, the ReMamba model addresses the critical challenge of long-sequence modeling with an innovative compression and selective adaptation approach. By retaining and processing crucial information more effectively, ReMamba closes the performance gap between Mamba and transformer-based models while maintaining computational efficiency. This research not only offers a practical solution to the limitations of existing models but also sets the stage for future developments in long-context natural language processing. The results from the LongBench and L-Eval benchmarks underscore the potential of ReMamba to enhance the capabilities of LLMs.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Here is a highly recommended webinar from our sponsor: ‘Building Performant AI Applications with NVIDIA NIMs and Haystack’

The post ReMamba: Enhancing Long-Sequence Modeling with a 3.2-Point Boost on LongBench and 1.6-Point Improvement on L-Eval Benchmarks appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)