Training a model now requires more memory and computing power than a single accelerator can provide due to the exponential growth of model parameters. The effective usage of combined processing power and memory across a large number of GPUs is essential for training models on a big scale. Getting many identical high-end GPUs in a cluster usually takes a considerable amount of time. Still, there is typically no problem acquiring a sufficient amount of heterogeneous GPUs. The limited number of consumer-grade GPUs available to some academics makes it impossible for them to train massive models independently. Buying new equipment is also expensive because GPU goods are released so frequently. Tackling these issues and speeding up model exploration and tests can be achieved by properly employing heterogeneous GPU resources. Most distributed model training methods and techniques now assume that all employees are the same. There will be a lot of downtime during synchronization when these methods are used directly to heterogeneous clusters.

Incorporating heterogeneity into the search space of auto-parallel algorithms has been the subject of numerous studies. Previous studies have focused on certain aspects of heterogeneity, but not all of them. Only GPUs with different architectures and amounts of RAM (such as a V100 and an A100) can run them smoothly. This hinders the efficient exploitation of heterogeneous real GPU clusters. Given the characteristics of 3D parallelism, current approaches fail in two cases: (1) when the sole difference is in memory capacities and computation capabilities, as in A100-80GB and A100-40GB, and (2) when the quantity of heterogeneous GPUs is not uniform.

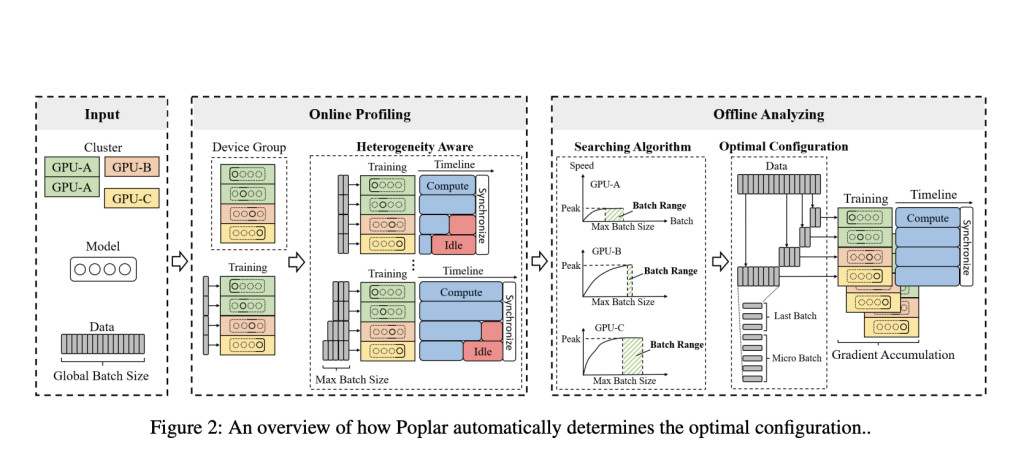

Poplar, a groundbreaking distributed training system, has been developed by a team of researchers from Peking University, the PLA Academy of Military Science, and the Advanced Institute of Big Data. This innovative system takes a comprehensive approach to GPU heterogeneity, considering computing capabilities, memory capacity, quantity, and their combinations. By expanding ZeRO to include heterogeneous GPUs and independently assigning jobs to each GPU, Poplar ensures maximum global throughput. The team also introduces a novel method for evaluating GPU heterogeneity, conducting granular analyses for each ZeRO stage to bridge the performance gap between the cost model and real-world results.Â

The team created a search algorithm that works independently of a batch allocation approach to guarantee that the load is balanced. They remove the need for human modification and expert knowledge by enabling automatic optimal configuration determination across heterogeneous GPUs.Â

The researchers used three diverse GPU clusters in their tests, with two different kinds of GPUs in each cluster. To measure the efficient use of the cluster from beginning to end, they employ TFLOPs (FLOPs/1e12). The average value is obtained after 50 repetitions for each experiment. They validated performance in the key experiments using Llama, then assessed generalizability using Llama and BERT for different sizes. For their trials, they keep the worldwide batch size of tokens at 2 million.Â

By setting up four baselines, they can clearly show that Poplar can accelerate. In baseline 2, more powerful homogenous GPUs are used, unlike baseline 1, which uses less powerful GPUs. The third baseline uses an advanced distributed training method called DeepSpeed. For baseline 3, they manually assign maximum batch sizes that satisfy the requirements. When it comes to fourth-generation heterogeneous training systems that provide hetero-aware load balancing, the gold standard is undoubtedly Whale. Baseline 4’s batch sizes are tuned to ensure maximum batch size aligned with its strategy. Findings on three real-world heterogeneous GPU clusters show that Poplar outperformed other approaches regarding training speed.

The team intends to investigate using ZeRO in heterogeneous clusters with network constraints. They also plan to explore the possibility of an uneven distribution of model parameters among diverse devices, taking into account their memory sizes.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Here is a highly recommended webinar from our sponsor: ‘Building Performant AI Applications with NVIDIA NIMs and Haystack’

The post Poplar: A Distributed Training System that Extends Zero Redundancy Optimizer (ZeRO) with Heterogeneous-Aware Capabilities appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)