Cross-lingual code cloning has become an important and difficult job due to the rising complexity of modern software development, where numerous programming languages are typically employed inside a single project. The term ‘cross-lingual code clone detection’ describes the process of finding identical or nearly identical code segments in several computer languages.Â

Recent advances in Artificial Intelligence and Machine Learning have made tremendous progress in handling many computing jobs possible, especially with the introduction of Large Language Models (LLMs). Due to their exceptional Natural Language Processing skills, LLMs have garnered attention for their possible use in code-related tasks like code clone identification. Building on these advancements, in recent research, a team of researchers from the University of Luxembourg has re-examined the problem of cross-lingual code clone detection and studied the effectiveness of both LLMs and pre-trained embedding models in this field.

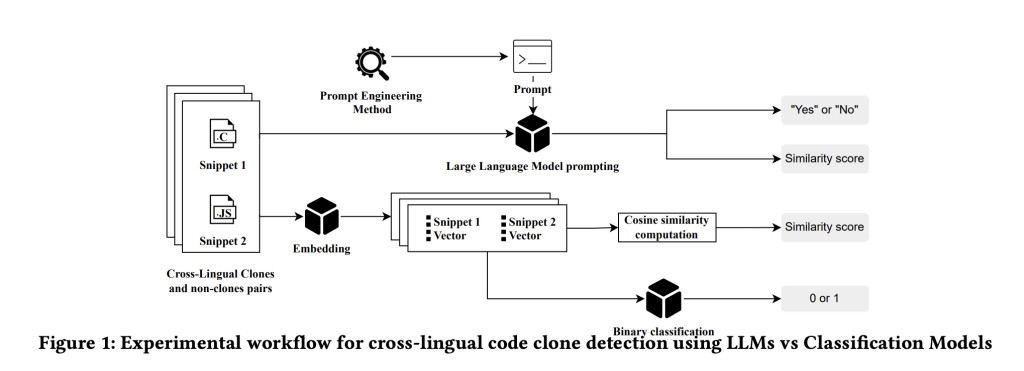

The research assesses the performance of four different LLMs in conjunction with eight unique prompts intended to support the detection of cross-lingual code clones. It evaluates the usefulness of a pre-trained embedding model that produces vector representations of code excerpts. Following this, pairs of code fragments are categorized as clones or non-clones based on these representations. Two popular cross-lingual datasets have been used for the evaluations, which are CodeNet and XLCoST.

The study’s findings have demonstrated the benefits and drawbacks of LLMs in this situation. When working with simple programming examples like those in the XLCoST dataset, the LLMs showed that they could attain high F1 scores, up to 0.98. However, when presented with more difficult programming tasks, their performance suffered. This decline raises the possibility that LLMs will find it difficult to completely appreciate the subtle meaning of code clones, especially in a cross-lingual context where it is crucial to comprehend the functional equivalency of code between languages.

However, the research has shown that embedding models, which represent code fragments from many programming languages within a single vector space, offer a stronger basis for identifying cross-lingual code clones. With an improvement of about two percentage points on the XLCoST dataset and about 24 percentage points on the more complicated CodeNet dataset, the researchers could attain results that surpassed all evaluated LLMs by training a basic classifier using these embeddings.

The team has summarized their primary contributions as follows.

The work broadly analyzes LLM capacities to identify cross-lingual code clones, with a particular emphasis on Java combined with ten distinct programming languages. This work applies several LLMs to a wide range of cross-lingual datasets and assesses the effects of several quick engineering methods, providing a distinct viewpoint in contrast to previous research.

The study offers insightful information about how well LLM performs in code clone identification. It emphasizes how much the closeness of two programming languages influences LLMs’ capacity to identify clones, particularly when given straightforward cues. The effects of programming language differences are lessened when prompts focus on reasoning and logic. The generalisability and universal effectiveness of LLMs in cross-lingual code clone detection tasks have also been discussed.

The study contrasts LLM performance with traditional ML techniques using learned code representations as a basis. The experiment’s findings have indicated that LLMs might not fully understand the meaning of clones in the context of code clone detection, suggesting that conventional techniques may still be superior in this regard.

In conclusion, the results imply that while LLMs are highly capable, especially when it comes to handling simple code examples, they might not be the most effective method for cross-lingual code clone detection, especially when dealing with more complicated circumstances. On the other hand, embedding models are more appropriate for attaining state-of-the-art performance in this domain since they provide consistent and language-neutral representations of code.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 48k+ ML SubReddit

Find Upcoming AI Webinars here

The post Embeddings or LLMs: What’s Best for Detecting Code Clones Across Languages? appeared first on MarkTechPost.

Source: Read MoreÂ