Deploying large language models (LLMs) has become a significant challenge for developers and researchers. As LLMs grow in complexity and size, ensuring they run efficiently across different platforms, such as personal computers, mobile devices, and servers, is daunting. The problem intensifies when trying to maintain high performance while optimizing the models to fit within the limitations of various hardware, including GPUs and CPUs.

Traditionally, solutions have focused on using high-end servers or cloud-based platforms to handle the computational demands of LLMs. While effective, these methods often come with significant costs and resource requirements. Additionally, deploying models to edge devices, like mobile phones or tablets, remains a complex process, requiring expertise in machine learning and hardware-specific optimization techniques.

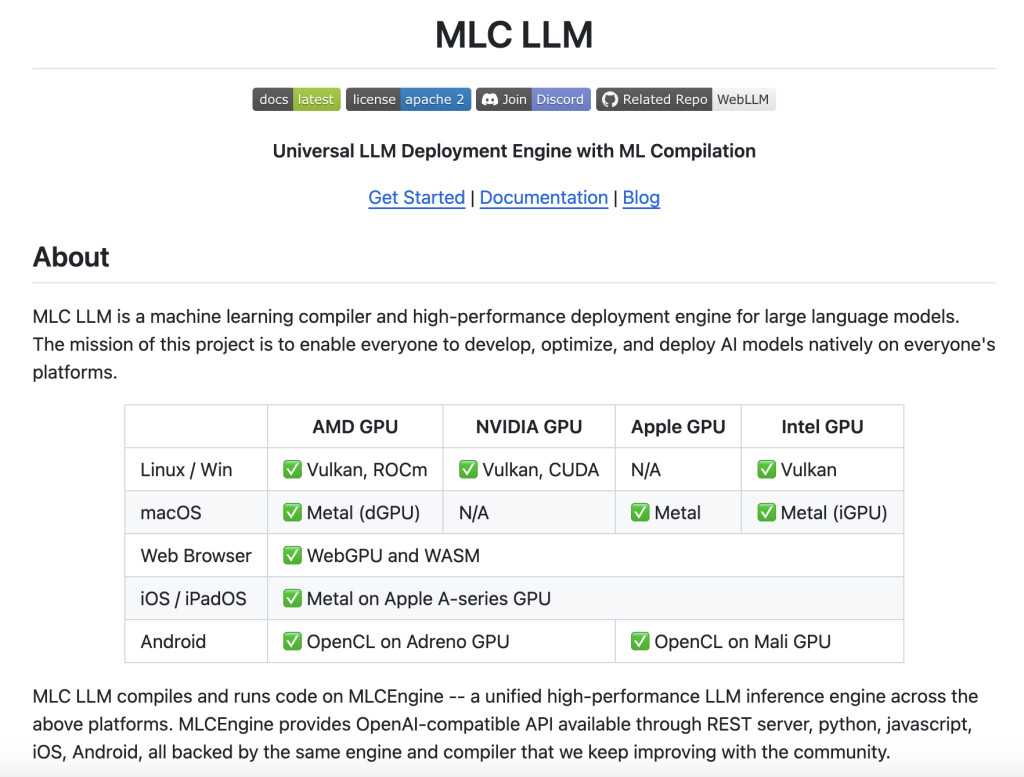

Introducing MLC LLM, a machine learning compiler and deployment engine that offers a new approach to address these challenges. Designed to optimize and deploy LLMs natively across multiple platforms, MLC LLM simplifies the process of running complex models on diverse hardware setups. This solution makes it more accessible for users to deploy LLMs without extensive machine learning or hardware optimization expertise.

MLC LLM provides several key features that demonstrate its capabilities. It supports quantized models, which reduce the model size without significantly sacrificing performance. This is crucial for deploying LLMs on devices with limited computational resources. Additionally, MLC LLM includes tools for automatic model optimization, leveraging techniques from machine learning compilers to ensure that models run efficiently on various GPUs, CPUs, and even mobile devices. The platform also offers a command-line interface, Python API, and REST server, making it flexible and easy to integrate into different workflows.

In conclusion, MLC LLM provides a robust framework for deploying large language models across different platforms. Simplifying the optimization and deployment process allows for a broader range of applications, from high-performance computing environments to edge devices. As LLMs evolve, tools like MLC LLM will be essential in making advanced AI accessible to more users and use cases.

The post MLC LLM: Universal LLM Deployment Engine with Machine Learning ML Compilation appeared first on MarkTechPost.

Source: Read MoreÂ