In today’s rapidly advancing world of generative artificial intelligence (AI), businesses across diverse industries are transforming customer experiences through the power of real-time search. By harnessing the untapped potential of unstructured data ranging from text to images and videos, organizations are able to redefine the standards of engagement and personalization.

A key component of this transformation is vector search, which helps unlock the semantic meaning of data. Real-time vector search enables contextually relevant results instantly, enhancing user experience and operational effectiveness. This technology allows companies to provide hyper-personalized recommendations, detect fraud with high confidence, and deliver timely, context-aware content that resonates with users. For instance, today, e-commerce platforms may run a daily build for their personalization recommendation widgets meaning they can miss out on providing relevant recommendations should a new event occur, such as a customer purchase. With vector search for MemoryDB, customers can power these recommendation engines with real-time vector search, enabling updates and search to the vector database that happen within single-digit milliseconds. This ensures the recommendation engines have the most recent data, e.g., a customer recent purchases, thereby improving the quality of the user experience through more relevant content delivered within milliseconds.

Amazon MemoryDB delivers the fastest vector search performance at the highest recall rates among popular vector databases on AWS. As such, MemoryDB excels in these scenarios due to its high-performance in-memory computing capabilities and Multi-AZ durability, which allows it to store millions of vectors while maintaining minimal latencies at high levels of recall. With its in-memory performance, MemoryDB powers real-time semantic search for Retrieval Augmented Generation (RAG), fraud detection or personalized recommendations, and durable semantic caching to optimize generative AI applications. This capability not only solves the problem of handling data in real time, but also makes sure the data remains highly available and durable across multiple Availability Zones.

In this post, we explore how to use MemoryDB for performing a vector search in an e-commerce use case to create a real-time semantic search engine.

Vector search for MemoryDB

Vectors are numerical representations of data and its meaning, created using machine learning (ML), captures complex relationships for applications like information retrieval, image detection.

Vector search finds similar data points using distance metrics, but nearest neighbor queries can be computationally intensive. Approximate nearest neighbor (ANN) algorithms balance accuracy and speed by searching subsets of vectors.

An in-memory database with vector search capabilities like MemoryDB allows you to store vectors in memory along with the index. This significantly reduces the latency associated with index creation and updates. As new data is added or existing data is updated, MemoryDB updates the search index in single-digit milliseconds, so subsequent queries have access to the latest results in near real time. These near real-time index updates minimize downtime thereby ensuring search results are both current and relevant, despite the computational intensity of the overall indexing process. By handling tens of thousands of vector queries per second, MemoryDB provides the necessary performance to support high-throughput applications without compromising on search relevancy or speed. In MemoryDB you have a choice of 2 indexing algorithms.

In MemoryDB you have a choice of 2 indexing algorithms.

Flat: A brute force algorithm that processes each vector linearly, yielding exact results but with high run times for large indexes.

HNSW (Hierarchical Navigable Small Worlds): An approximation algorithm with lower execution times, balancing memory, CPU usage, and recall quality.

When executing a search in MemoryDB, you’ll be leveraging the FT.SEARCH and FT.AGGREGATE commands. These commands rely on a query expression, which is a single string parameter composed of one or more operators. Each operator targets a specific field in the index to filter a subset of keys. To refine your search results, you can combine multiple operators using boolean combiners (such as AND, OR, and NOT) and parentheses to group conditions. This flexibility allows you to enhance or restrict your result set, ensuring you extract precisely the data you need efficiently and effectively.

Hybrid filtering with MemoryDB

MemoryDB allows for hybrid filtering to filter the vectors, either before or after the vector search is conducted. MemoryDB supports both of these options. Hybrid filtering offers the following benefits:

Narrowing down the search space – You can filter the vector space with numeric- or tag-based filtering before applying the more computationally intensive semantic search to eliminate irrelevant documents or data points. This reduces the volume of data that needs to be processed, improving efficiency. This process is known as pre-filtering.

Improving relevance – By applying filters based on metadata, user preferences, or other criteria, pre-filtering makes sure that only the most relevant documents are considered in the semantic search. This leads to more accurate and contextually appropriate results.

Combining structured and unstructured data – Hybrid filtering can merge structured data filters (like tags, categories, or user attributes), stored as metadata associated to the vectors, with the unstructured data processing capabilities of semantic search. This provides a more holistic approach to finding relevant information.

Hybrid queries combine vector search with filtering capabilities like NUMERIC and TAG filters, simplifying application code. In the following example, we find the nearest 10 vectors that also have a quantity field within 0–100:

In this next example, we confine our search to only vectors that are tagged with a City of New York or Boston:

Solution overview: Semantic search with MemoryDB

Vector search enables users to find text that is semantically or conceptually similar. Vector search can recognize the similarity between words like “car†and “vehicle,†which are conceptually related but linguistically different. This is possible because vector search indexes and executes queries using numeric representations of unstructured, semi-structured, and structured content. This technique can also be applied over visual search as well, where slight variations can be detected to be similar, e.g. a white dress with yellow dots in comparison to white dress with yellow squares.

In the use case of a customer purchasing a headset from Amazon, for example, a traditional database query could provide general characteristics to the user, such as the product title, description, and other features. However, when a user has a question about the headset, you may want to search past questions that were semantically similar with vector search, to provide the answers associated. Although natural language processing(NLP)and pattern matching has been a common pattern in these types of searches, vector search allows for a new and often more straightforward methodology.

In this post, we load the Amazon-PQA dataset (Amazon product questions and their answers, along with the public product information) for search. We can execute our search to identify if the user questions has been asked before and retrieve relevant product data.

To generate vector embeddings for this data, we can use an ML service such as Amazon SageMaker or Amazon Bedrock embedding models. SageMaker allows you to effortlessly train and deploy ML models, including models that generate vectors for text data. Amazon Bedrock provides an effortless way to build and scale generative AI applications with foundation models (FMs).

The following sections provide a step-by-step demonstration to perform a vector search with a sample dataset. The workflow steps are as follows:

Create a MemoryDB cluster

Load the sample product dataset.

Generate vectors using sentence transformers and use MemoryDB to store the raw text (question and answer) and vector representations of the text.

Use the transformers to encode the query text into your vectors.

Use MemoryDB to perform vector search.

Prerequisites

For this walkthrough, you should have an AWS account with the appropriate AWS Identity and Access Management (IAM) permissions to launch the solution.

Create a MemoryDB cluster

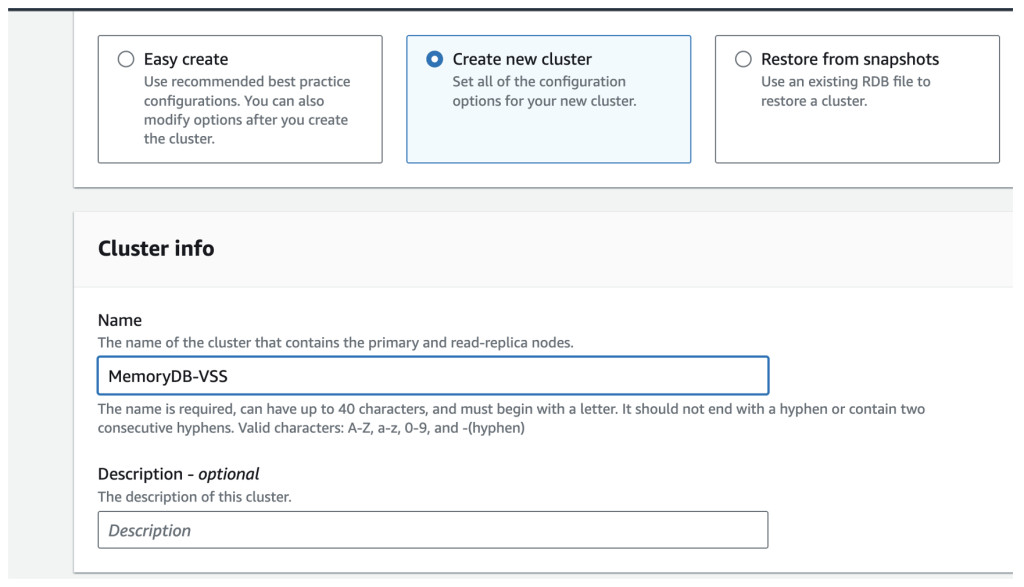

Complete the following steps to create a MemoryDB cluster with vector search capabilities:

On the MemoryDB console, choose Clusters in the navigation pane.

Choose Create cluster.

For Name, enter a name for your cluster.

Select Enable vector search.

To create a MemoryDB cluster through the AWS Command Line Interface (AWS CLI), use the following code:

After the cluster is successfully provisioned, you can initiate the storage of vectors in the database and conduct searches as needed. For more details, refer to the Amazon MemoryDB Developer Guide. We test connectivity to the MemoryDB cluster provisioned using the redis-py client library in the following example:

Load the sample dataset

The following code demonstrates how to retrieve JSON data from the registry and load it into a data frame:

For this post, we load the first 1,000 rows of the dataset.

Generate the vectors and use MemoryDB as a vector store

We load the question, answer, and vectors from this dataset into MemoryDB as hashes. MemoryDB also accommodates JSON data structures.

We use Hugging Face embedding models (all-distilroberta-v1) to generate vectors for product QA data. The following code is an example of how to implement it in our application:

When storing per-vector metadata in MemoryDB, the hash data structure is efficient for simple metadata needs. However, if your metadata is complex or varies significantly, JSON might be a better choice. Below is an example code block that demonstrates how to create vector embeddings and store them as hashes in MemoryDB:

The next step is to create an index for the dataset that will allow you to perform vector search, which is creation, maintenance, and use of indexes. Each vector search operation specifies a single index and its operation is confined to that index (operations on one index are unaffected by operations on any other index). Except for the operations to create and destroy indexes, any number of operations may be issued against any index at any time, meaning that at the cluster level, multiple operations against multiple indexes may be in progress simultaneously.

Individual indexes are named objects that exist in a unique namespace, which is separate from the other Redis namespaces such as keys and functions.

In this example, we create the index and load the data as hashes into MemoryDB:

Let’s break down what this command does:

CREATE idx:pqa – FT.CREATE is a command used in MemoryDB to create a new search index. This initializes the creation of a new index named idx.

SCHEMA – The schema defines a list of field definitions in the index. Each definition is essentially a key-value pair where the key is the name of the field followed by a permitted value for that field. Some field types have additional optional specifiers. We use the following parameters:

question TEXT answer TEXT defines two additional text fields in your index named question and answer.

question_vector is the field name for storing the vector data.

VECTOR specifies that the index will store vector data.

HNSW (Hierarchical Navigable Small World) is an algorithm used for efficient vector search.

TYPE FLOAT32 indicates the data type of each dimension in the vector.

DIM 768 denotes the number of dimensions of the vector, with 768 being the number of dimensions. This should match the dimensionality of the vectors generated by your embedding model.

DISTANCE_METRIC COSINE sets the distance metric for comparing vectors. In MemoryDB, you also have the option to use Euclidean or Inner Product (Dot Product) metrics.

INITIAL_CAP 1000 When specified, this parameter pre-allocates memory for the indexes, resulting in reduced memory management overhead and increased vector ingestion rates. This defaults to 1024 when not specified.

M defines the maximum number of outgoing edges for each node in the graph at each layer. A higher M value can improve recall but may decrease overall query performance. On layer zero, the maximum number of outgoing edges will be 2M. The default value is 16, and it can go up to a maximum of 512.

Now that you have an index and the data in MemoryDB, you can execute search. A query is a two-step process. First, you must convert your query into a vector, and then you perform a similarity search for find the most relevant answers.

Streamlit is an open source Python framework used to build interactive web applications. For this use case, we build our UI in Streamlit to allow users to ask questions on the headset data and see the relevant product ID and question answers:

Run a vector search in MemoryDB

Now you can find questions and answers that are the most similar to the user’s question by comparing their vector representations. Because we are using HNSW, our search results will be approximations and may not be the same results as an exact nearest neighbor search. In this example, we choose k=5, finding the five most similar questions:

The results returned are the topK documents that have the highest similarity (as indicated by vector_score) to the query vector, along with their respective question and answer fields. The following screenshot shows an example.

Clean up

After you have completed the workshop, you need to remove the AWS resources that you created in your account to stop incurring costs. This section walks you through the steps to delete these resources.

Delete MemoryDB clusters provisioned for the test.

Remove all applications deployed for testing.

Conclusion

MemoryDB delivers the fastest vector search performance at the highest levels of recall among popular vector databases on AWS, making it a strong choice for generative AI applications on AWS. MemoryDB empowers businesses to transform their operations with high-speed, high-relevancy vector searches, supporting real-time search applications and robust recommendation engines. This capability opens up new possibilities for innovation, helping organizations enhance user experiences, enabling relevant responses essential for applications like chatbots, real-time recommendation engines, and anomaly detection.

To learn more about vector search for MemoryDB, refer to vector search. To get started, visit our GitHub repository to find the code in this post as well as additional examples with LangChain.

Vector search for MemoryDB is available in all AWS Regions where MemoryDB is available at no additional cost. To get started, create a new MemoryDB cluster using MemoryDB version 7.1 and enable vector search through the MemoryDB console or the AWS CLI.

About the authors

Sanjit Misra is a Senior Technical Product Manager on the Amazon ElastiCache and Amazon MemoryDB team based in Seattle, WA. For the last 15 years, he has worked in product and engineering roles related to data, analytics, and AI/ML. He has an MBA from Duke University and a bachelor’s degree from the University of North Carolina, Chapel Hill.

Lakshmi Peri is a Sr. Solutions Architect on Amazon ElastiCache and Amazon MemoryDB. She has more than a decade of experience working with various NoSQL databases and architecting highly scalable applications with distributed technologies. Lakshmi has a particular focus on vector databases, and AI recommendation systems.

CJ Chittajallu CJ Chittajallu is a Technical Program Manager for Amazon ElastiCache and Amazon MemoryDB. He has an extensive background in cloud engineering, management consulting, and digital transformation strategy. He is passionate about cloud and AI/ML.

Source: Read More