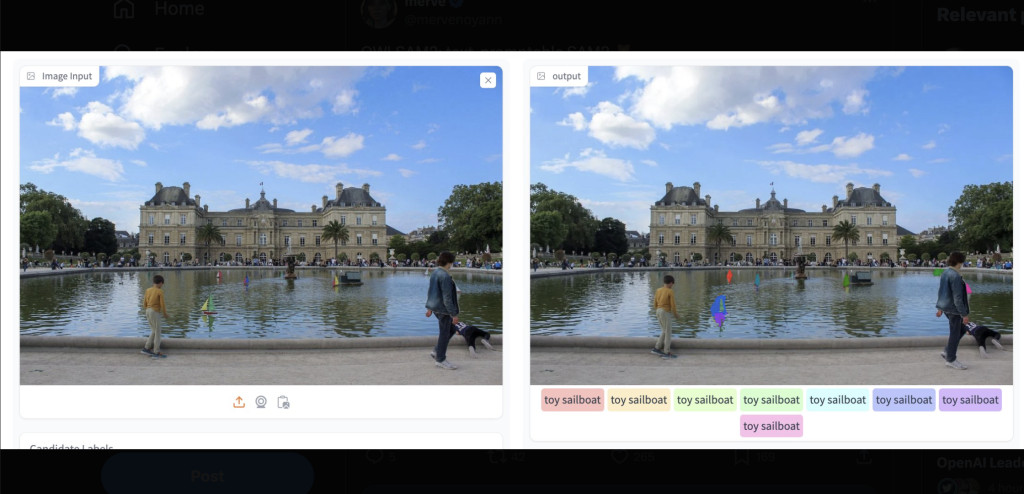

Meet OWLSAM2: a groundbreaking project that combines the cutting-edge zero-shot object detection capabilities of OWLv2 with the state-of-the-art mask generation prowess of SAM2 (Segment Anything Model 2). This innovative fusion results in a text-promptable model that sets new standards in the field of computer vision.

The heart of OWLSAM2 lies in integrating OWLv2 and SAM2, two advanced models in their respective domains. OWLv2, known for its exceptional zero-shot object detection abilities, is designed to identify objects in images without prior training on specific datasets. This model leverages large-scale language-image pre-training, enabling it to recognize and categorize objects based on textual descriptions alone. Such an approach significantly enhances its versatility and applicability across various scenarios.

On the other hand, SAM2 excels in mask generation, a crucial task in image segmentation. Despite its compact size, SAM2’s small checkpoint delivers high precision in generating masks that accurately delineate objects within images. By combining these two technologies, OWLSAM2 achieves a level of accuracy and efficiency in zero-shot segmentation that was previously unattainable.

One of OWLSAM2’s most notable features is its ability to perform zero-shot segmentation precisely. Zero-shot learning refers to the model’s capability to understand and process new concepts without explicit training on specific items. OWLv2’s sophisticated language and image comprehension and SAM2’s precise mask generation allow OWLSAM2 to identify and segment objects based on simple textual prompts.

This functionality opens up new avenues for applications in various fields, like medical imaging, autonomous driving, and even everyday image editing. Imagine a scenario where a user can prompt the model to identify and segment objects like “red cars†or “tumors†in medical scans without requiring extensive pre-labeled datasets. The implications for efficiency and accuracy in these fields are profound.

Merve Novan’s vision with OWLSAM2 is to push what is possible in computer vision and machine learning. By combining the best aspects of OWLv2 and SAM2, OWLSAM2 enhances the capabilities of zero-shot object detection and sets a new standard for mask generation accuracy. This integration demonstrates a significant leap forward, making it easier for researchers & practitioners to develop and deploy sophisticated image analysis solutions.

OWLSAM2 is designed with user accessibility in mind. The model’s prompt nature means users do not need extensive technical knowledge to utilize its capabilities. Simple textual descriptions are sufficient to activate its advanced segmentation functionalities, democratizing access to powerful image analysis tools.

In conclusion, the release of OWLSAM2 marks a pivotal moment in the evolution of zero-shot object detection and mask generation. By harnessing the strengths of OWLv2 and SAM2, Merve Novan has created a model that delivers unprecedented precision and ease of use. OWLSAM2 is poised to revolutionize various industries by providing a versatile, powerful, and accessible tool for advanced image analysis.

Check out the Demo here. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 47k+ ML SubReddit

Find Upcoming AI Webinars here

The post OWLSAM2: A Revolutionary Advancement in Zero-Shot Object Detection and Mask Generation by Combining OWLv2 with SAM2 appeared first on MarkTechPost.

Source: Read MoreÂ