Enterprises across the globe are looking to organize, harness, and analyze data from multiple data sources to create insights to achieve timely, impactful, and actionable business decisions. Change Data Capture (CDC) is one of the most common design patterns to capture the changes made in a source database and relay them to a cloud data warehouse such as Amazon Redshift for analytics.

Amazon Aurora zero-ETL integration with Amazon Redshift is a fully managed solution that makes transactional data available in Amazon Redshift in near real time after being written to an Amazon Aurora MySQL-Compatible Edition database. With zero-ETL integration, you can run near real-time analytics and machine learning (ML) on petabytes of transactional data within Amazon Redshift.

We at Infosys, Infosys Center of Emerging Technology and Solutions (iCETS) and Data and Analytics (DNA), explored this new feature, including its setup, data preparation, use case identification and proof of concept build, comparison of the new feature with existing ETL (extract, transform, and load) jobs, metrics of zero-ETL, and feature improvements.

In this post, we talk about how Infosys redefined the ETL landscape for their product sales and freight management application using Aurora zero-ETL to Amazon Redshift. We also explain our experience with the old process and how the new zero-ETL integration helped us effortlessly move data into a Redshift cluster for analytics along with metrics to monitor the health of the integration.

Infosys: What we do?

The Infosys Data and Analytics team helps customers develop, migrate, and modernize their data platforms, and has delivered millions of ETL mappings and transformations over the last 15 years for more than 500 clients. The team’s main objective is to provide optimal solutions using the best tool combinations for each client, with the goal of minimizing the time spent on updating and maintaining the mappings, allowing clients to focus on extracting value from their data.

iCETS is the Emerging Technologies incubation partner for Infosys clients and service lines that provides NextGen service and offerings by accelerating innovation to futureproof enterprise businesses. We do so by building upon incubating NEXT (New Emerging eXploratory Technology) offerings, providing incubation as a service, being at the forefront with thought leadership, offering immersive client proofs of concept, and collaborating with leading universities and startups on the latest trends and technology.

Solution overview

In the world of data warehousing, ETL is a crucial process that enables organizations to collect and analyze data from various sources. However, ETL can be time-consuming and complex, often requiring significant resources and expertise. Aurora zero-ETL to Amazon Redshift is a game-changing solution that simplifies the ETL process and allows organizations to move data seamlessly from Aurora to Amazon Redshift without any ETL.

The zero-ETL integration of Aurora with Amazon Redshift now makes real-time insights on operational data possible with latency in seconds. You can integrate multiple Aurora databases and tables with Amazon Redshift without having to build complex pipelines to achieve the same outcome. This enables near real-time analytics and ML on petabytes of transactional data. Many ETL workloads can be simplified with this zero-ETL feature.

Zero-ETL uses Aurora enhanced binlog, which is a standalone feature of Amazon Aurora MySQL 3.x major version that provides improved throughput due to reduced compute contention and improved database recovery times. By writing binary logs and transactions to storage in parallel, enhanced binlog reduces the performance impact on the database source caused by enabling binlog. Refer to Amazon Aurora MySQL enhanced binary log for more details.

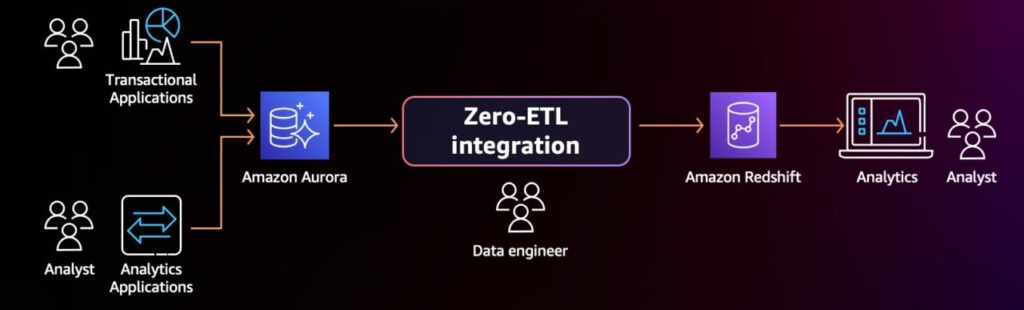

The following diagram shows a high-level architecture for Aurora zero-ETL integration with Amazon Redshift.

Figure 1 – Aurora zero-ETL integration with Amazon Redshift

Current ETL process

Enterprises often face the challenge of having transactional data scattered across various databases, making it difficult to support different use cases that require data consolidation and reporting. To address this challenge, they often resort to developing distinct procedures for extracting and consolidating data into a reporting database.

Traditionally, customers have been getting data from online transactional processing (OLTP) databases to analytical data warehouses using ETL pipelines. Any ETL pipeline between two databases also has to include processes for incremental updates and real-time processing, data type mappings, data transformations, error handling, and monitoring.

For large customers, these pipelines can amount to hundreds of ETL jobs running on a schedule or events with complex interdependencies.

There are many options to build an ETL pipeline for data replication between Amazon Relational Database Service (Amazon RDS) and Amazon Redshift. This pipeline is shown in following diagram in a simplified manner.

Figure 2 – A traditional ETL process

At a high-level, this setup involves the following steps:

Establish a connection between ETL system and Amazon Redshift and Amazon RDS.

Write code for ETL jobs to extract, transform, and load data. Common programming languages for these codes are python, pyspark, and Java. Most of the development efforts are spent in this build cycle.

When scheduled ETL job runs, it extracts the required dataset from source system, RDS.

The ETL job may hold data in some temporary storage location and work on it to make it as expected by the target system, Redshift.

Finally, the data is loaded in the Redshift as target.

For near real-time replication, you need to build a complex pipeline having CDC logic built in.

Use case 1: Product sale management

In this use case, a store management system uses details of products, stores, inventory, sales, and restocking inventory stored on an Aurora cluster. These tables represent the system analysis to identify highest valued products, top 10 sold products, stores that generate highest revenues based on time and products, inventory management in stores, and so on. We loaded these six tables with around 10,000 rows containing synthetic data generated using an Infosys application. We captured the metrics around this initial data replication into Amazon Redshift and measured latency for this bulk insert in the tables.

We observed that when data changes occurred in Aurora tables, the changes were immediately reflected in the Redshift cluster—inserts, updates, and deletes were replicated within milliseconds. We used the zero-ETL integration to demonstrate the real-time analysis that was possible: the highest priced products in a store, total revenue by quarter for each store, total sales by store and product, cumulated number of products returned per store by month, and so on.

With zero-ETL integration, data was replicated in near real time to a Redshift cluster, from which reports can be created internally by running queries. We also connected the Redshift cluster as a data source to Amazon QuickSight and created dashboards. Such dashboards can enable near real-time forecasting of changes in data, provide faster responses to any anomalies detected, track logistics and shipments, and so on.

We created the following dashboard on Amazon Redshift with the data that was replicated from Aurora. The product and store table were joined to view month-on-month revenue for the products in a store.

Figure 3 – Redshift dashboard reflecting changes in Aurora in near real time

We used Amazon Redshift as a data source in QuickSight to arrive at the following visualization dashboards.

Figure 4 – Quicksight dashboard showing the replicated data in Redshift

To demonstrate near real-time analytics, we introduced a new product (one7) in a store using an update to an Aurora table, which reflected immediately in Amazon Redshift and the QuickSight dashboard.

The following screenshot shows the data before the update in Aurora.

Figure 5 – Before the new product was added to Aurora in Quicksight

The following screenshot reflects the update with the new product listed.

Figure 6 – After the new product got added in Aurora, in near real time reflected in Quicksight dashboard

Use case 2: Freight management

Freight verification plays a crucial role in maintaining supply chain integrity. We used the shipment tracking module and carrier performance tracking reporting to test the zero-ETL functionality.

As part of this use case, we ingested the synthetic data related to shipment tracking and carrier performance to the source Aurora MySQL database and checked the real-time reporting performance of freight verification reports from Amazon Redshift.

The following figure shows how we tested the zero-ETL integration for the freight verification module to check the real-time tracking of orders. Few tables were initially loaded with 10,000 records.

Figure 7 – Freight management architecture

We completed the following steps:

Create the freight application database in Amazon Aurora MySQL.

Load the initial data.

Enable the zero-ETL integration to Amazon Redshift.

Create the target views for accessing the aggregated data and freight tracking details.

Continuously insert or update order details.

View the target reports.

Zero-ETL integration is a good choice for this use case because it simplified the real-time shipment tracking and enabled the access of real-time data along with other primary tables. It also helped create aggregate reports by using a materialized views approach.

Zero-ETL integration helped with the real-time ingestion of freight tracking data into the Redshift database to enable reporting related to the following:

Shipment tracking – The module allows real-time tracking of freight shipments, providing visibility into the location, status, and estimated time of arrival (ETA) of each shipment.

Freight analytics and reporting – The module provides comprehensive analytics and reporting capabilities, offering insights into freight-related metrics, trends, and performance. This information supports data-driven decision-making, optimization of freight operations, and cost reduction strategies.

The following figure shows a report providing real-time visibility if there are any gaps between planned and actual shipment deliveries.

Figure 8 – Planned and actual quantity by order date

The following screenshot shows an example of current day shipment tracking, which helps users with real-time order tracking using API calls and queries.

Figure 9 – Shipment tracking in Redshift as and when changes occur in Aurora

It also enables us to purge old transactional data, helping us control Amazon Redshift cost.

Monitoring the integration

The zero-ETL integration can be monitored directly from the target Redshift database. The details of replication are captured in system tables and views released as part of this integration, and it’s possible to configure alerts in case of any latency or failure issues.

We used two system integration views in Amazon Redshift to capture the metrics: SVV_INTEGRATION and SYS_INTEGRATION_ACTIVITY. A join on these two views can help derive the integration status:

SELECT SI. integration_id,

SI. target_database,

SI. source,

SI. state,

SI. current_lag,

SI. last_replicated_checkpoint,

SA. checkpoint_bytes,

SA modified_tables,

SA integration_end_time from SV_INTEGRATION SI

join SYS_INTEGRATION ACTIVITY SA on SI. integration_id=SA. integration_id;

The following screenshot shows the output of the previous query, and it primarily focuses on the replication details.

Figure 10 – SQL query output in Redshift

Currently, the lag and status details are available in system DB tables, the Amazon Redshift console, and Amazon CloudWatch. However, by using Lambda and Amazon Simple Notification Service (Amazon SNS), you can configure alerts for any failures or increase in the lag time to review the status of database.

Zero-ETL: Core test scenarios and metrics

The following core test scenarios and metrics form the foundation for validating the robustness and efficacy of zero-ETL in modern data architectures:

Bulk inserts – Use the initial load of Aurora to measure the time taken to load the data into Amazon Redshift. For our use case, 10,000 rows were loaded to Aurora. This initial bulk load was replicated in Amazon Redshift with 2,516–2,528 milliseconds of latency. Within this time frame, the entire 10,000 rows were replicated to Amazon Redshift.

Deletion of rows in Aurora – Delete operations are reflected in the Redshift tables.

Table updates and deletes in Aurora – DML operations are replicated in Amazon Redshift in near real time.

New tables are added to Aurora – All tables are instantly reflected in Amazon Redshift.

Run a Python script that inserts rows to an Aurora table every minute – This simulates a real-life scenario where in an application can constantly insert, update, and delete rows to an Aurora table during peak hours. We tested if the Aurora changes are reflected instantly in Amazon Redshift throughout. Data is replicated to Amazon Redshift with 1,560–1,570 milliseconds of latency.

Latency test – 2,568 milliseconds was the longest time taken so far to replicate data from source to target.

Duration for the zero-ETL integration to remain active – After you create the integration, it remains active until manually deleted. Most of the DDL changes are supported as a CDC or resync operation without breaking the integration.

Data replication – When the integration is active between Aurora and Amazon Redshift, data replication happens in near real time with millisecond latency.

Benefits of zero-ETL integration

The following are the advantages for the zero-ETL:

Ease of integration because there are no ETL jobs to develop and manage

Data is moved with minimal latency

Tables are created automatically on the Amazon Redshift side

Minimal impact on the performance of the transactional database

Ability to report across domains in near real time in the Redshift database

No incremental loads or other replications need to be enabled from the Aurora MySQL database

Historical or stale data can be copied to Amazon Simple Storage Service (Amazon S3) from Amazon Redshift to save costs

Comparing zero-ETL with a traditional Infosys ETL job

The following table compares a zero-ETL job with a traditional Infosys TEL job.

Features

Traditional ETL JOB

Zero-ETL

Using ETL jobs for integration

Replication is not real time

Near real-time replication with less than 2 seconds of latency

Latency difference between ETL and zero-ETL

An ETL job may take up to 2 minutes initially and then a few more seconds for replications

Based on the data in this scenario, Zero-ETL takes 2–3 seconds for data replication after setup

Data transformation before replication

Possible in regular ETL jobs

You can transform first with other AWS services such as AWS Glue and move using Zero ETL integration

Replication between multiple databases and tables

Have to set up as many ETL jobs as the number of tables or use custom frameworks for reusability

Multiple tables can be replicated as part of one

integration. Additionally, Data filtering allows you to choose the databases and tables to be replicated from Amazon Aurora MySQL to Amazon Redshift. You can apply multiple filters to the zero-ETL integration, allowing you to tailor the replication to your specific needs.

Use case considerations for Zero-ETL Integration

Zero-ETL integration aims to simplify the process of analyzing data stored in one system by seamlessly integrating it with another system, all without the need for intricate pipeline management. The zero-ETL concept underscores AWS’s commitment to streamline data analytics workflows and reduce the complexities associated with data integration. Notably, alongside our efforts with Aurora, AWS introduced several other zero-ETL integrations for Amazon Redshift, emphasizing our dedication to providing versatile solutions for data analysis and management.

Conclusion

In this post, we showed you how Infosys used Aurora zero-ETL in simplifying data integration and analysis. Embracing this feature allows organizations to unlock the full potential of their data, enabling you to stay ahead in today’s data-driven business landscape.

During AWS re: Invent 2023, AWS announced more zero-ETL integrations:

Announcing Amazon Aurora PostgreSQL zero-ETL integration with Amazon Redshift (Preview)

Announcing Amazon RDS for MySQL zero-ETL integration with Amazon Redshift (Preview)

Announcing Amazon DynamoDB zero-ETL integration with Amazon Redshift (Preview)

Announcing Amazon OpenSearch Service zero-ETL integration with Amazon S3 (preview)

About the Authors

Arnab Saha is a Senior Database Specialist Solutions Architect at AWS, based in Seattle, USA. Arnab specializes in Amazon RDS, Amazon Aurora, AWS Database Migration Service (DMS), and Amazon Elastic Block Store (EBS). He provides expert guidance and technical assistance to customers, helping them build scalable, highly available, and secure solutions in the AWS Cloud. Arnab also supports AWS partners and customers in their data modernization and cloud migration journeys.

Ashutosh Dubey is a Global Technical Leader and Generative AI specialist Solutions Architect at Amazon Web Services, based in New Jersey, USA. As a respected voice in the tech community, he frequently shares insights on Data and Generative AI trends, best practices, and ethical considerations at industry events.

Meenakshi Venkatesan is a Principal Consultant at Infosys and a part of the AWSCOE at iCETS. She helps in designing, developing, and deploying in AWS environments and has interests in exploring the new offerings and services.

Karthikeyan Senthilkumar is a Senior Systems Engineer at Infosys and a part of the AWSCOE at iCETS. He specializes in AWS infra and database services.

Abhishek Choudhary is a Technology Lead and Cloud Professional with Infosys and part of the DNA TCG team. He works primarily to deliver analytical solutions.

Source: Read More