Significant issues arise when programming knowledge and task assistants based on Large Language Models (LLMs) carefully follow developer-provided policies. To satisfy the requests and demands of users, these agents must reliably retrieve and provide accurate and pertinent information. However, a typical problem with these agents is that they tend to respond in an unjustified manner, a phenomenon called hallucination.

The term hallucination describes the process by which information is produced that is not grounded in the real data or knowledge that the model has been trained on. These answers can be wholly made up, untrue, or deceptive, yet the model frequently presents them with assurance and makes them seem reasonable.

While they provide a means of managing conversations, traditional dialogue trees are only able to accommodate a restricted set of pre-established conversation flows. They are, therefore, intrinsically rigid and unable to adjust to the vast range of possible user interactions because of this restriction.

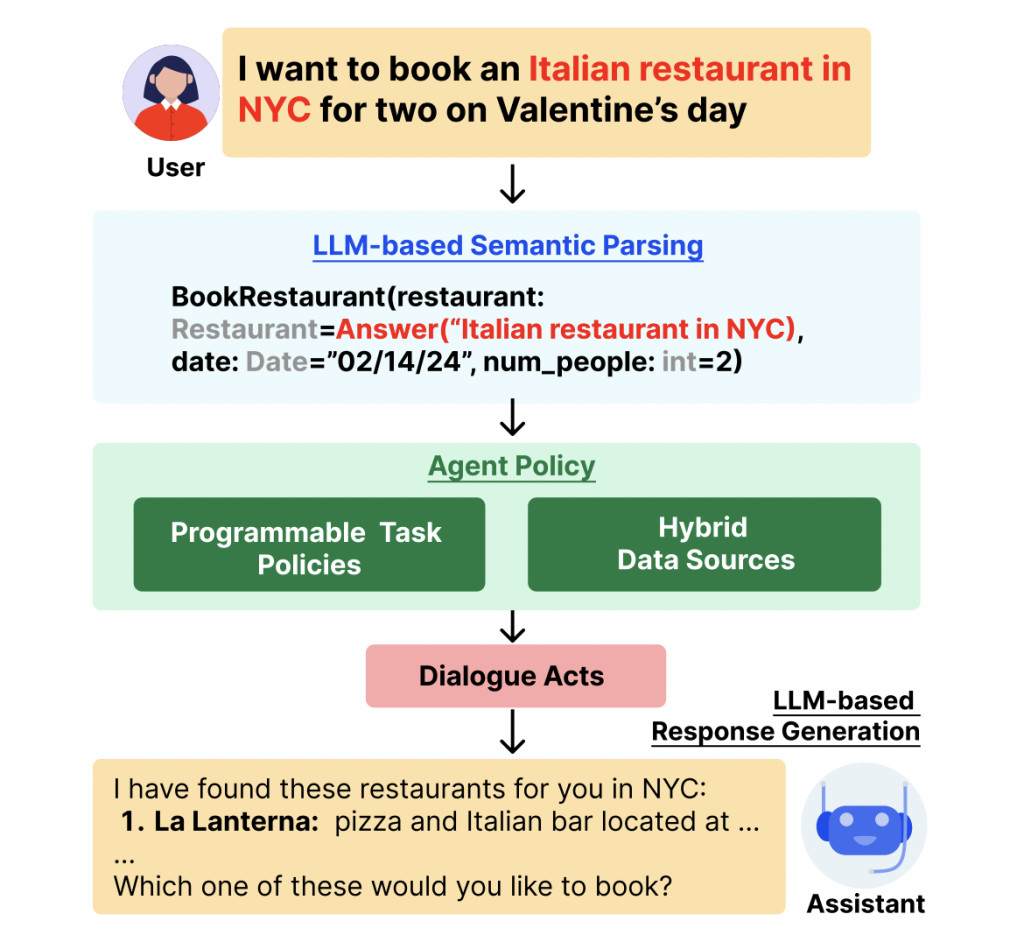

To address these issues, Stanford researchers have introduced KITA, a programmable framework for building task-oriented conversational agents that can manage intricate user interactions. In contrast to LLMs, KITA is designed to give developers control over agent behavior through its expressive specification, the KITA Worksheet, while still producing dependable and grounded responses. Compared to conventional dialogue trees, this worksheet offers a more flexible and reliable method by enabling declarative policy programming.

Some of the most significant features of KITA are as follows.

Resilience to Diverse Queries: KITA is more flexible and resilient in real-world situations because, in contrast to dialogue trees, it can handle a broad range of user queries.

Integration with Knowledge Sources: KITA successfully combines a range of knowledge sources to deliver precise and well-informed answers.

Programming policies is made easier by the declarative paradigm of the KITA Worksheet, which enables developers to construct and manage complicated relationships with greater ease.

The team has shared that KITA was shown to be successful in a real-user trial with sixty-two people. The outcomes demonstrated that KITA performed significantly better than the GPT-4 with a function-calling baseline.

Execution Accuracy: KITA saw a 26.1-point improvement.

Dialogue Act Accuracy: KITA experienced a 22.5-point improvement.

Goal Completion Rate: KITA saw an increase of 52.4 points.

The team has summarized their primary contributions as follows.

KITA, which is an open-domain integrated task and knowledge assistant that complies with policies supplied by high-level developers, has been presented. It provides full compositionality of tasks and knowledge queries, and grounds replies in hybrid knowledge sources.

KITA Worksheet has also been proposed as a unique specification for task-oriented dialogue (TOD) agents. With the help of this specification, KITA will be able to monitor the status of discussions and give the LLM step-by-step instructions, guaranteeing precise and contextually appropriate interactions.

The 62-person real-user experiments have shown that KITA is effective, with 91.5% execution accuracy, 91.6% dialogue act accuracy, and 74.2% target completion rate. These findings demonstrate that KITA significantly outperforms the GPT-4 function-calling baseline. A dataset of 180 dialogue turns from 22 actual user chats has also been made available. It has been manually adjusted for accuracy.

In conclusion, KITA provides a stable, adaptable, and developer-friendly framework for producing dialogue agents that are focused on tasks. It surpasses the drawbacks of conventional discussion trees and LLMs by offering correct, well-founded responses and facilitating simple, efficient policy programming with its unique KITA Worksheet.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post Researchers at Stanford Introduce KITA: A Programmable AI Framework for Building Task-Oriented Conversational Agents that can Manage Intricate User Interactions appeared first on MarkTechPost.

Source: Read MoreÂ

![Why developers needn’t fear CSS – with the King of CSS himself Kevin Powell [Podcast #154]](https://devstacktips.com/wp-content/uploads/2024/12/15498ad9-15f9-4dc3-98cc-8d7f07cec348-fXprvk-450x253.png)