Some of our customers who use SAP ASE (formerly known as Sybase) as their primary database engine to run workloads, generally want to continue using SAP ASE with a Bring Your Own License (BYOL) model in the AWS Cloud. This approach affords them greater flexibility, high availability, and robust archival solutions, while concurrently preserving the prospect of modernization within their future strategic roadmap.

In this post, we provide different options for data migration from an SAP ASE on-premises database to SAP ASE on Amazon Elastic Compute Cloud (Amazon EC2) based on the size of data, application downtime, and data compliance. The migration methods include using AWS Database Migration Service (AWS DMS) and SAP ASE native features.

Solution overview

When you start your migration journey to the AWS Cloud, you may have different challenges related to database size, business criticality, workload demands, data compliance, and migration of data. AWS DMS is a preferable approach to move database workload quickly, securely, and with minimal downtime and zero data loss.

We explore three different data migrations options for migrating your SAP ASE database, which include AWS DMS and SAP ASE native features like dump and load, and Bulk Copy Program (bcp). These options offer flexibility and add an extra boost of confidence because you may already be familiar with the SAP ASE native features used on your on-premises database.

The following table shows summary of approaches for different use cases:

Migration strategy

Use case

Database size

AWS DMS

Business critical application, minimum downtime, Low latency, CDC changes

Hundreds of GB to TB

Dump and load

Non critical application, relaxed RTO/RPO requirement

In GBs (100 – 300 GB)

BCP

High latency, less application dependency

In GBs (< 100 GB)

Prerequisites

You should have the following prerequisites:

An active AWS account

An EC2 instance with SAP ASE installed

AWS DMS for replication instance

An Amazon Simple Storage Service (Amazon S3) bucket to store backup and bcp files

A user with Sybase sa role permissions for DMS and dbo role for bcp, dump, and load migration approach

Any tool installed to connect to the Sybase ASE server

A source database, replication instance, and target database created in the same VPC

AWS DMS for SAP ASE as source and target

AWS DMS supports both homogeneous and heterogeneous data migration. AWS DMS supports full load and change data capture (CDC) methods (for a single task when you have SAP ASE as a source) to migrate historic data and ongoing changes with near zero latency from on premises to the AWS Cloud.

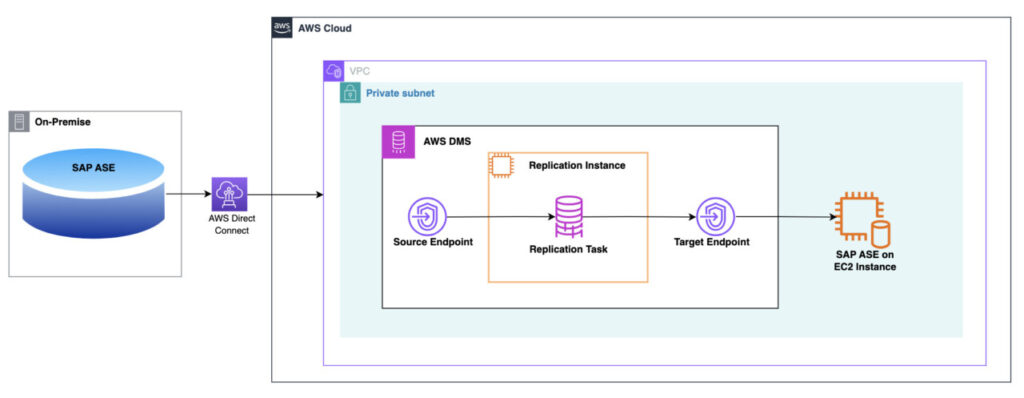

The following architecture illustrates SAP ASE data migration to the AWS Cloud using AWS DMS.

To explain the steps involved in our use case, we have our source SAP ASE database running on Amazon EC2, as shown in the following diagram.

You create a source and target connection for your respective databases to tell AWS DMS where to extract data (source database) and where to load it (target database). Then you create a task that runs on this server to move your data from source to target.

This solution has the following key components:

Source database – SAP ASE is installed and configured to run on an EC2 instance

Replication instance – We use an AWS DMS replication instance

Target database – Another SAP ASE database is running on Amazon EC2

To perform the full load and set up continuous replication from SAP ASE, you should complete the following steps.

Check the replication agent configuration status on the source database:

To determine whether your database is set up for Replication Agent functionality, execute the following command:

If database isn’t configured to use Replication Agent then you can proceed to establish a Log Transfer Manager (LTM) truncation point.

If Replication Agent is configured then disable thread using the following commands.

Establish a Log Transfer Manager (LTM) truncation point on the source database:

To create a checkpoint for LTM truncation, establish a connection to the Sybase ASE server, open a new query window, and execute the following command.

Enable replication at the source database:

To enable replication, complete the following steps:

To check on the current replication state for all the tables contained within the source database, run the following command:

To enable replication for a table, present in the source database, run the following command.

You can use previous command to enable replication for all tables needs to be replicated to target database.

The following screenshot shows that the replication for table authors in pubs2 database is enabled.

validate if replication is enabled for tables using following command:

Create an AWS DMS replication instance:

To set up continuous replication from SAP ASE source database to target database, create an AWS DMS replication instance following the steps in documentation

Create a source endpoint:

Now you need to create the source endpoint and test the connection using the replication instance you created in the previous step:

On the AWS DMS console, choose Endpoints in the navigation pane.

Choose Create endpoint.

For Endpoint type, choose Source endpoint.

For Endpoint identifier, enter a name for your endpoint.

For Source engine, choose SAP Sybase ASE.

The following screenshot shows the configuration details.

For Access to endpoint database options, select your preferred method of authentication to SAP ASE source database: Secrets Manager or manual authentication. For this post, we use manual authentication. select Provide access information manually.

For Server name, enter the source database server name (address) on the web or an IP address.

Enter the port, user name, password, and database name for the source database server.

Secure Socket Layer (SSL) mode enable to use SSL to establish secure connection from DMS to source database server. for this post we choose none. ÂÂ

Choose Create endpoint.

The following screenshot shows configuration details.

Create a target endpoint:

Next, you create the target endpoint and test the connection using the replication instance you created in the previous step.

On the AWS DMS console, choose Endpoints in the navigation pane.

Choose Create endpoint.

For Endpoint type, select Target endpoint.

For Endpoint identifier, enter a name for your endpoint.

For Target engine, choose SAP Sybase ASE.

For Access to endpoint database options, choose Provide access information manually.

Enter the server name, port, user name, password, and database name for the source database server.

For Secure Socket Layer (SSL) mode choose none .

Choose Create endpoint.

The following screenshot shows configuration details.

Create an AWS DMS database migration task:

To create the database migration task using the replication instance and source and target endpoints, complete the steps mentioned in documentation

On the AWS DMS console, choose Database migration tasks in the navigation pane.

Choose Create task.

For Task identifier, enter a unique name.

Choose your replication instance, source database endpoint, and target database endpoint.

For Migration type, choose Migrate existing data and replicate ongoing changes.

For Target table preparation mode, select Do nothing.

For Stop task after full load completes, select Don’t stop.

For LOB column setting, select Limited LOB mode. In limited LOB mode, you set a maximum LOB size for DMS to accept.

For Data validation choose Turn off , because it’s not supported (as of this writing) for the SAP ASE endpoint.

Select Turn on CloudWatch logs to gain insights during the migration process from Amazon CloudWatch.

Review the Advanced task settings options and enter values as needed.

In the Table mappings section, under Selection rule, choose Add new selection rule.

Enter % for Schema name and Table name to include all the schemas and tables from the source database. For this post we set replication of table authors

Premigration assessment is checked by default to check if there are any potential issues in replication before starting the task. For this post , uncheck this option.

For Migration task startup configuration, select Manually later.

Choose Create task.

Start the migration task:

Before you start the migration task, make sure you disable or drop constraints and triggers if any on target database.

To Start migration task, choose Database migration task in navigation pane of DMS console, select the task created in previous steps. From Actions menu choose Restart/Resume.

The following screenshot shows the selected task.

You can refer documentation to get more details on monitoring AWS DMS tasks

Summary

If you have business-critical applications with a large database that needs continuous data replication with low latency and near-real time replication with a high Recovery Time Objective (RTO), we recommend using AWS DMS.

Note that there are limitations while using SAP ASE as source and target for AWS DMS. For more information, refer to the following:

Limitations on using SAP ASE as a source for AWS DMS

Limitations when using a SAP ASE database as a target for AWS DMS

SAP ASE native features

SAP ASE provide native features like dump and load or bcp, which you can use for homogeneous data migration to save cost and time. Migrating an on-premises SAP ASE database to SAP ASE hosted on Amazon EC2 is a homogeneous migration. Therefore, we can use SAP ASE native features for data migration, which is a cost-effective solution.

The following migration architecture has an S3 bucket that stores the dump files taken from the on-premises database; we use dump files from S3 bucket to restore new database.

SAP ASE native feature: Dump and load

Dump and load are the SAP ASE backup and restore commands, respectively. The dump command makes an entire copy of the data and transaction log as a dump file. This dump file is read by the load command to restore the database.

Dump database from source (full database backup)

For a full source database backup, complete the following steps:

Log in to the source SAP ASE database server using isql or any supported SQL editor tool. The following command uses isql:

Run the following SQL command to take a full dump of the user database to a specified local path and file name:

In the following code, we take a backup of the database pubs2:

We need to copy the backup file we created to an S3 bucket. In a real-life situation, these files are created on premises. You need to get that file to the AWS Cloud by connecting the on-premises data center to AWS, using either AWS Direct Connect, the public internet, or AWS Site-to-Site VPN. For more information, refer to Integrate an SAP ASE database to Amazon S3 using AWS Storage Gateway.

Use the following AWS Command Line Interface (AWS CLI) command to copy the backup file from the source database EC2 instance to Amazon S3 provided that the instance role is configured correctly.

Load database on target (full database restore)

To restore the database on the target, complete the following steps:

Log in to the target SAP ASE host instance and copy the dump file from the S3 bucket to the target EC2 instance provided that the instance role is configured correctly.

The following screenshot shows that the backup file is downloaded to the target instance from the S3 bucket.

Log in to the target SAP ASE database and create an empty database called pubs2_tgt to load the dump file you downloaded:

The following screenshot shows that the target user database (pubs2_tgt) is created.

When you create the target database, make sure that the database size is the same or larger than that of the source database to load the dump file from the source.

Run the following command to load the dump file to the target database:

The following screenshot shows that the backup file is successfully loaded to the database pubs2_tgt and this database is online.

Incremental database dump

An incremental (or cumulative) backup captures changes after the point where you have taken a full backup. The full backup is the base for subsequent incremental backups. A full database dump copies the entire data present at the time when the backup is taken. Based on the size of the database, a full database dump may take time to migrate critical applications. To mitigate this challenge, take a full backup one time and then schedule incremental backups to capture continuous (ongoing) changes in the database.

To perform a cumulative backup, make sure that the source backup server is running. Additionally, confirm that incremental dumps are enabled for the source database.

Complete the following steps to perform a full load and incremental backup:

Run the following SQL command on the source database to enable incremental backups:

The following screenshot shows that incremental backups are enabled for the source database pubs2.

Run a full backup, which is marked as the base for cumulative backups.

The following screenshot shows that the full backup of the pubs2 database is created, called pubs2_full_bk.dmp (after enabling incremental backup).

Make some changes on the source database to be captured in the next incremental backup.

The following screenshot shows that we created a new table on the source database and inserted a row.

Take an incremental backup of the source database:

The following screenshot shows that a cumulative backup is created for database pubs2 to the backup file /opt/sap/db_backup/pubs2_incr_bk.dmp. This backup file contains the changes on the database between the last full backup and the latest incremental backup.

Follow the same steps as mentioned previously to copy these dump files to the S3 bucket.

Incremental database restore

Complete the following steps to complete an incremental database restore:

Log in to the target instance and copy the dump files from Amazon S3.

Log in to the target SAP ASE database and complete a load full using the dump files.

Do not bring the database online yet, because you still need to load the incremental backup file.

Load the cumulative backup file to the target database:

The following screenshot shows that incremental backup is loaded to the target database pubs2_tgt from the file /opt/sap/db_backup/pubs2_incr_bk.dmp and command executed to mark database online. The changes have been restored on the target SAP ASE database.

Considerations

Note the following when using the dump and load strategy for data migration:

The target database size should be either the same or greater than that of the source database.

Try to set the database on single-user mode to prevent users from making changes to the database.

If your database size is big, you can use stripe dump and load instead of standard dump and load. With this method, you can create multiple small dump files and load them in the target database.

You can compress the dump files and save space and improve performance.

Summary

You can use the dump and load method for data migration from your source to target database for databases of all sizes to migrate data in less time. You can use a full dump and load in combination with AWS DMS to migrate data for large size databases and complete the migration with near-zero downtime.

You can decide on your database strategy as follows:

Take a full dump of the database.

Take successive cumulative backups for ongoing changes until cutover.

Load the first full backup and the last cumulative backup. The last cumulative backup will capture all changes in the database from the first full backup.

SAP ASE native feature: bcp utility

The bcp utility is a native program of SAP ASE to copy data in bulk. It works at the operating system level and accesses data pages to fetch the data and load it into the database table.

This method has the following requirements:

By default, bcp comes with the SAP ASE installer, but if you’re running it from another host, you must install the Sybase client tools package on the host

You must create the target database and tables before using the bcp utility

To copy the data file into a table (bcp in), you need insert permissions on the table

To copy the table data to the operating system file (bcp out), you need select permissions on the table

The data migration approach using bcp includes the following high-level steps:

Run bcp out on the source database instance to export table-level data to delimited files or native format files.

Copy these data files from the on-premises data center to an S3 bucket.

Download the data files on the target EC2 instance using the AWS CLI.

Run bcp in on the target database instance to load files into the database tables.

To demonstrate this approach, we copy data from the table salesdetail to a flat file. Then we copy that flat file back to another target database table.

You can follow similar steps mentioned in the previous approach to copy flat files from an on-premises data center to Amazon S3 and back to the target database EC2 instance from Amazon S3.

Table export (bcp out)

Complete the following steps to export your table:

Use the following command to run bcp out on the source database:

The following screenshot shows that the bcp out extract file salesdetail.dat is generated for the table salesdetail from the pubs2 database.

Copy this file to Amazon S3 and from Amazon S3 to the target EC2 instance.

Table import (bcp in)

The data file copied from the source is now available on the EC2 target instance. Use the following command to run bcp in on the source database to import the data file to the target database:

In the following screenshot, the data file is imported to the target table salesdetail_tgt.

When exporting and importing data using bcp, it’s very important to note the option and the format of the data. The -c option is used to export data in character format, which is human readable and can be used for cross-platform or cross-database data migration. The -n option is called a native format. Native formats usually create a more compact operating system file, and bcp operations are faster using this option. The bcp utility supports copying data in native format from the same operating systems. It’s not recommended to use the row terminator (-t) or field terminator (-r) parameters with bcp in native format, because data may get corrupted.

Summary

You can use the SAP ASE native bcp utility to migrate data from on premises to the AWS Cloud when you have smaller databases ranging from 1–100 GiB. Because this method is a table-level import and export, you need to validate the migrated data for each table.

Conclusion

In this post, we discussed possible options (AWS DMS, SAP ASE native dump and load, and the bcp utility) to migrate data from on-premises SAP ASE to SAP ASE hosted on Amazon EC2. This can help you plan your data migration strategy for your SAP ASE applications based on data volume, downtime, and application criticality. We also discussed which method or combination of methods is best suitable for specific scenarios.

If you have any comments or feedback, leave them in the comments section.

About the authors

Nishad Mankar is a Lead Database Consultant with AWS Professional Services in India. He plays pivotal role in helping customers to migrate and modernize their databases on AWS cloud platform. With expertise in database technologies such as SQL Server, SAP ASE, and PostgreSQL, he builds cutting-edge solutions that helps customer success.

Amit Kumar is a Database Consultant with AWS Professional Services based out of Hyderabad, India. With good knowledge on relational databases adding hands-on in homogenous and heterogenous database migrations and optimization in AWS cloud.

Uttiya Gupta is a technology professional with over 18 years of experience. His experiences span across the BFSI, HCLS, and Telco domains. He is highly skilled in helping enterprise customers in their cloud adoption journey through managing end-to-end complex cloud migration engagements. With deep understandings of AWS Solution Architectures and Well Architected Framework, his niche technical skills also include database migration and modernization, SAP ASE (Sybase) database programming & performance tuning, data architecture, strategy, ingestion and engineering. He has strong affinity towards ideation & building migration accelerators to facilitate customers’ cloud transformations.

Source: Read More