As a very effective machine learning ML-born optimization setting, boosting requires one to efficiently learn arbitrarily good models using a weak learner oracle, which provides classifiers that perform marginally better than random guessing. Although the original boosting model did not necessitate first-order loss information, the decades-long history of boosting has rapidly transformed it into a first-order optimization setting, with some even incorrectly defining it as such. This is a significant difference with gradient-based optimization.

The term “zeroth order optimization†can describe a group of optimization methods that skip over using gradient information to determine a function’s minimum and maximum values. These techniques shine in cases where the function is either noisy or non-differentiable or where computing the gradient would be prohibitively expensive or impractical. In contrast, the search for the best solution in zeroth order optimization is guided entirely by function evaluations.

There have been few investigations into boosting, even though ML has witnessed a significant uptick in zeroth order optimization across numerous settings and algorithms in recent years. The question is highly pertinent, as boosting has rapidly developed into a method that necessitates first-order knowledge of the optimal loss. Boosting lowered to this first-order setting is also rather typical. A weak learner that could provide classifiers that were distinct from random guessing was originally required by the boosting model rather than first-order loss information. With zeroth-order optimization becoming more popular in machine learning ML, it’s important to know if differentiability is necessary for boosting, which loss functions can be boosted with a weak learner, and how boosting compares to the recent formal progress on bringing gradient descent to zeroth-order optimization.

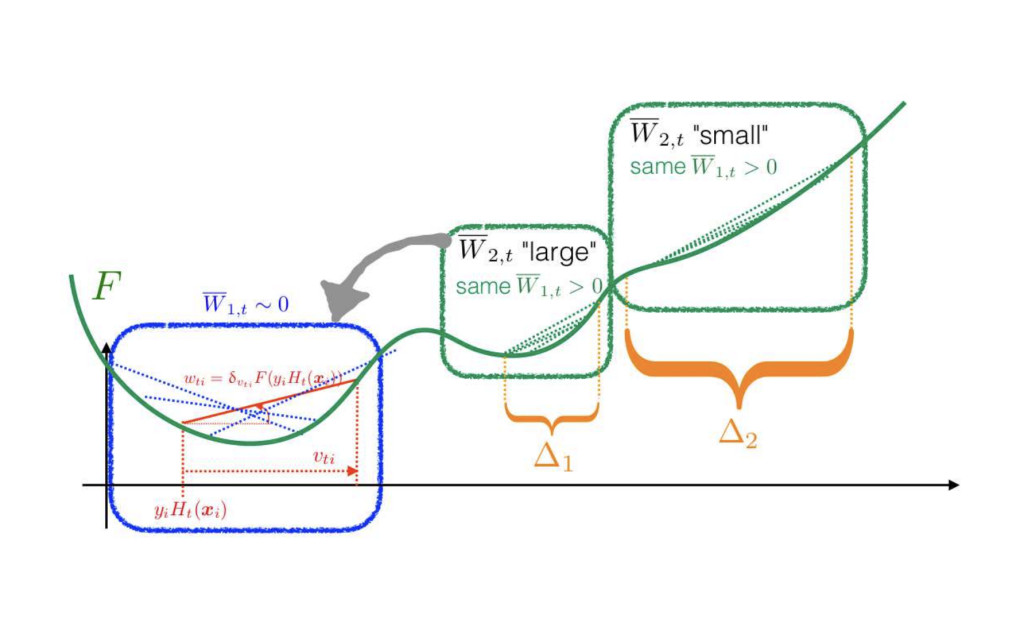

Google’s research team aims to provide a formal boosting technique to handle loss functions with sets of discontinuities with zero Lebesgue measure. Any stored loss function would, in reality, satisfy this criterion with conventional floating-point encoding. Theoretically, the researchers include losses that are not necessarily convex, differentiable, Lipschitz, or continuous. Classical zeroth-order optimization solutions differ significantly in this regard; while their algorithms are zeroth-order, the assumptions made about the loss in their proof of convergence—including convexity, differentiability (once or twice), Lipschitzness, and so on—are far more extensive. They employ or expand upon strategies from quantum calculusℎ, some of which seem to be commonplace in zeroth-order optimization research, to sidestep the usage of derivatives in boosting.

The proposed SECBOOST technique, when applied to a broader context, uncovers two additional areas where deliberate design decisions can be leveraged to maintain assumptions throughout a stronger number of rounds. This not only addresses the issue of local minima but also manages losses that exhibit stable values over portions of their area. The potential of the SECBOOST technique is significant, offering hope for the future of boosting research and application.

Based on the findings, boosting is better than the latest advancements in zeroth-order optimization. This is because, to achieve boosting-compliant convergence, the loss was only assumed to satisfy some of the typical assumptions used in such analyses. While this issue requires fixing in this situation—for example, to optimize the offset oracle efficiently—recent developments in zeroth-order optimization have also accomplished significant design tricks for implementing such algorithms. The team hasn’t resolved this issue yet. Still, in the appendix, the community can find some mock experiments that a simple implementation can accomplish, suggesting that SecBoost can optimize “exotic†types of losses.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post Google Researchers Propose a Formal Boosting Machine Learning Algorithm for Any Loss Function Whose Set of Discontinuities has Zero Lebesgue Measure appeared first on MarkTechPost.

Source: Read MoreÂ