There has been a lot of development in AI agents recently. However, one single goal—accuracy—has dominated evaluation and is vital to agent development. According to a recent study out of Princeton University, agents that are unnecessarily complicated and costly to run are the result of focusing only on accuracy. The team suggests a change to an evaluation paradigm that takes cost into account, where accuracy and cost are optimized together.

Standard metrics for gauging an agent’s efficacy on a given job have long been used in agent evaluation. Aiming for ever-increasing precision through ever-more-complicated models is a common trend that arises from these standards. The computing needs of these models may prevent them from being useful in the real world, even when they perform quite well on the benchmark.

The team points out where the existing evaluation system needs to improve in their study:Â

Firstly, there is a risk that agents developed with an overemphasis on accuracy will not apply to real-world situations. Deploying highly accurate agents in contexts with limited resources is sometimes not viable due to their high computational cost.

Second, there is a chasm between model developers and downstream developers due to the present method. Upstream developers care more about how much it will cost to run the agent in production, while model developers focus on how accurate the model will be on the benchmark. The result of this discord may be agents with high levels of verifiable accuracy that are impractically expensive to implement in real-world scenarios.

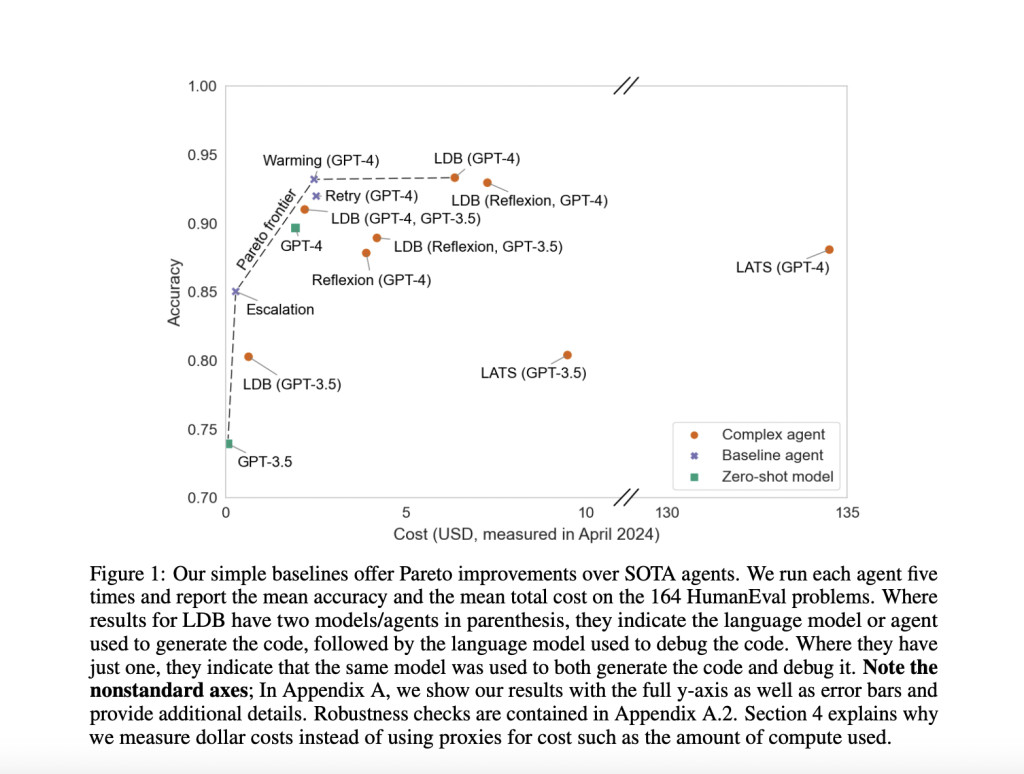

The researchers propose an evaluation paradigm that considers the costs of solving these problems. By representing the cost and accuracy of agents as a Pareto frontier, a new avenue for agent design becomes apparent: maximizing both costs and accuracy simultaneously, which can result in agents with lower costs without sacrificing accuracy. This elaboration can be applied to many agent design criteria, including latency, without restrictions.Â

The overall expense of managing an agent encompasses both fixed and variable expenses. When you optimize the agent’s hyperparameters (temperate, prompt, etc.) for a certain task, you’ll incur fixed costs. Running the agent incurs variable expenses proportional to the input and output token counts. Variable costs become increasingly important as the agent’s usage increases. The team could balance the agent’s fixed and variable expenses by utilizing joint optimization. They could lower the variable cost of running an agent (for example, by discovering shorter prompts and few-shot examples while preserving accuracy) by investing more upfront in the one-time optimization of agent design. They mentioned that if users want to operate agents for less money without compromising accuracy, it can be done by model trimming and hardware acceleration.

The modified version of the DSPy framework is tested on the HotPotQA benchmark to show how effective joint optimization can be. Since HotPotQA has been published in multiple official tutorials by the developers and was used as a benchmark to demonstrate DSPy’s efficiency in the original paper, the team decided to use it. To find few-shot instances that may be employed with an agent that decreases cost while preserving accuracy, the Optuna hyperparameter optimization framework was used. Please be aware that we anticipate significantly better performance from more intricate joint optimization methods. Joint optimization opens up a huge, uncharted design space in agent design, and the findings are just the tip of the iceberg.

The team tests the efficacy of DSPy-based multi-hop question-answering with several agent designs. They employ ColBERTv2 to conduct a HotPotQA-based query on Wikipedia as a retrieval strategy. To measure performance, they compare the agent’s retrieval success rate of all ground-truth documents included in the HotPotQA task. One hundred HotPotQA samples are utilized from the training set to fine-tune the DSPy pipelines, and 200 samples from the evaluation set to assess the outcomes. Five different agent architectures are evaluated as follows:

Uncompiled: Neither the agent’s prompt optimization nor the formatting instructions for HotPotQA queries are provided in the uncompiled version. Without few-shot examples or formatting instructions, each prompt only includes the task instructions and the core content (i.e., question, context, rationale).

Formatting instructions only: Like the uncompiled baseline, this one also includes formatting instructions for retrieval query outputs.

Few Shot:  DSPy was used to find effective few-shot examples from all 100 samples in the training set. Few-shot examples are instances where the model is trained on a few examples, typically less than 100, to make predictions on new, unseen data. Attached are the instructions for formatting. Selecting few-shot examples is done by looking at the number of successful predictions on the training set. Random Search: they apply DSPy’s random search optimizer on half of the training data (out of 100 samples) to choose the best few-shot examples. The optimizer’s performance on the other half of the samples is then used to inform its decision-making. Attached are the instructions for formatting.

Joint optimization: 50% of the training set is iterated to get a set of potential few-shot instances that enhance the model’s accuracy. For validation, the remaining fifty samples were used. Using parameter search, the team wanted to maximize accuracy while minimizing the number of tokens used in the few-shot samples provided by the prompt. Although DSPy provides significant accuracy increases over uncompiled baselines, it does so at a cost. Luckily, it is possible to reduce the price by utilizing joint optimization. Compared to the default DSPy implementations, it results in a variable cost of 53% lower while maintaining the same level of accuracy for GPT-3.5. For Llama-3-70B, it’s the same story: it reduces costs by 41% without sacrificing precision. Â

It’s crucial that we rethink our approach to agent benchmarks. The current benchmarks often lead to agents that perform well in the benchmark but struggle in real-world scenarios. By considering factors such as distribution changes and downstream developer requirements, we can design more practical and effective benchmarks, addressing the urgency of this change.

As AI agents become more sophisticated, the importance of safety evaluations cannot be overstated. While this study doesn’t specifically address security concerns, it underscores the vital role of existing frameworks in regulating agentic AI. It’s crucial that developers prioritize and deploy these frameworks to ensure responsible development and deployment of AI agents.

The team states that their research empowers individuals to evaluate the cost-effectiveness of capabilities that could pose risks. This way, the community can spot and prevent possible safety issues before they increase. For this reason, makers of AI safety benchmarks should incorporate cost assessments. Ultimately, this work suggests a change in how agents are evaluated. To create useful and feasible agents for deployment in the real world, the team highlights that researchers need to shift their focus from accuracy alone to cost considerations.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 46k+ ML SubReddit

The post Researchers at Princeton University Reveal Hidden Costs of State-of-the-Art AI Agents appeared first on MarkTechPost.

Source: Read MoreÂ