AWS announced AWS Database Migration Service (AWS DMS) homogeneous migration for several AWS Regions. With AWS DMS homogenous migration, you can migrate data from your source database to an equivalent engine on AWS using native database tools.

Homogeneous data migrations are serverless, which means that AWS DMS automatically provision the resources that are required for your migration. With homogeneous data migrations, you can migrate tables, table partitions, data types, and secondary objects such as functions, stored procedures, triggers, indexes, and other database objects. When you create a migration project with compatible source and target data providers, AWS DMS connects to the source data provider, reads the source data, dumps the files on the disk, and restores the data using native database tools.

In this post, we show you an example of a complete homogeneous migration process and provide troubleshooting steps for migrating from PostgreSQL to Amazon Aurora PostgreSQL-Compatible Edition and Amazon Relational Database Service (Amazon RDS) for PostgreSQL.

Solution overview

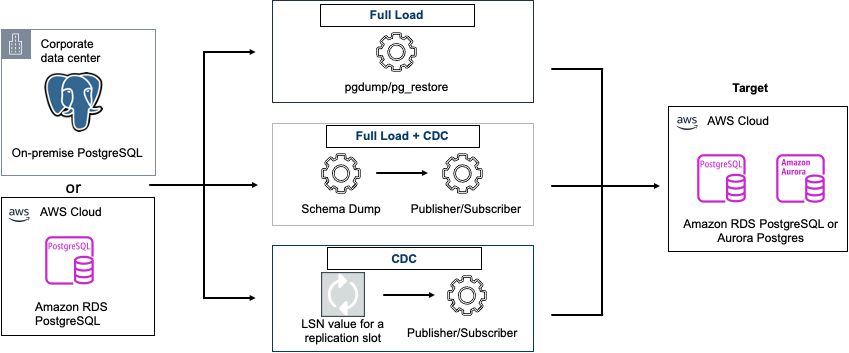

The following diagram shows the process of using homogeneous data migrations in AWS DMS to migrate a PostgreSQL database to Amazon RDS for PostgreSQL or Amazon Aurora PostgreSQL. AWS DMS creates a serverless environment for your data migration.

For different types of data migrations, AWS DMS uses different native PostgreSQL database tools.

For homogeneous data migrations with the full load migration type, AWS DMS uses pg_dump to read data from your source database and store it on the disk attached to the serverless environment. After AWS DMS reads all your source data, it uses pg_restore in the target database to restore your data.

For homogeneous data migrations of the full load and change data capture (CDC) type, AWS DMS uses pg_dump and pg_restore to read the metadata from the source and load the metadata to the target, and it uses disk attached to the serverless environment as storage for dump files. After AWS DMS loads the metadata data to the target, it uses a publisher and subscriber model for the initial sync and then performs the replication. In this model, one or more subscribers subscribe to one or more publications on a publisher node.

For homogeneous data migrations with CDC, AWS DMS requires the native start point to start the replication. If you provide the native start point, AWS DMS captures changes from that point. Alternatively, choose Immediately in the data migration settings to automatically capture the start point for the replication when the actual data migration starts.

Prerequisites

The following diagram illustrates how homogeneous data migrations work and the required components.

AWS DMS homogeneous migration connects to the source database and target database using data providers and network and security details captured from the instance profile. In the following sections, we show you how to create these components.

Create an RDS for PostgreSQL instance

For this use case, we use PostgreSQL version 14. For instructions to create a database, see Create and Connect to a PostgreSQL Database with Amazon RDS.

For this post, we use a DVD rental sample database, which contains the following objects:

15 tables

1 trigger

7 views

8 functions

1 domain

13 sequences

To set up the source database, log in to PostgreSQL from the command prompt and use the following sample syntax to connect:

psql “host=postgres.xxxx11hxxxxx.us-east-1.rds.amazonaws.com port=5432 dbname= dvdrental user= USERNAME password= PASSWORD”

The following code shows examples of our database details:

Configure source database permissions

Configuration changes are required for the source and target databases to process the CDC.

On the source database, grant superuser permissions for the database user that you specified in AWS DMS for your PostgreSQL source database. The database user needs superuser permissions to access replication-specific functions in the source. For a full load data migration, your database user needs SELECT permissions on tables to migrate them. See the following syntax:

For Amazon RDS for PostgreSQL as source

Use the AWS primary user account for the PostgreSQL DB instance as the user account for the PostgreSQL source data provider for homogeneous data migrations in AWS DMS. The primary user account has the required roles that allow it to set up CDC. If you use an account other than the primary user account, the account must have the rds_superuser role and the rds_replication role. The rds_replication role grants permissions to manage logical slots and stream data using logical slots. See the following syntax:

Self-managed PostgreSQL database as source

If you’re using a self-managed PostgreSQL database as the source, modify the following settings at the parameter group level, which is mapped to the database:

wal_level – Set to logical.

max_replication_slots – Set to a value greater than 1.

max_replication_slots – Set to a value greater than 1.

max_wal_senders – Set to a value greater than 1.

max_wal_senders – Set to a value greater than 1.

wal_sender_timeout – Set the value to 0. This parameter ends replication connections that are inactive longer than the specified number of milliseconds. The default is 60,000 milliseconds (60 seconds). Setting the value to 0 disables the timeout mechanism.

After you update the preceding parameters, restart your PostgreSQL source database.

For this use case, we use Amazon Aurora PostgreSQL version 15.

Create an IAM policy and role

You also need an AWS Identity and Access Management (IAM) policy and for role.

Configure target database permissions

AWS DMS requires certain permissions to migrate data to your target RDS for PostgreSQL or Aurora PostgreSQL database.

The following script creates a database user with the required permissions in your PostgreSQL target database:

Because AWS DMS uses a subscriber and publisher method for this homogeneous migration, you need to set the rds.logical_replication parameter in your DB parameter group to 1, and reboot the database. The reboot is needed to make sure the updated parameter group changes are reflected.

You can store your source and target database credentials in AWS Secrets Manager. For more details, refer to Move hardcoded database credentials to AWS Secrets Manager.

Next, you create the data providers, subnet group, and instance profile.

Create the data providers

The procedure to create the data providers is similar to the source database endpoint in AWS DMS.

Create the source provider

You can use a PostgreSQL database as a source for homogeneous data migrations in AWS DMS. In this case, your source data provider can be on premises, hosted on Amazon Elastic Compute Cloud (Amazon EC2), or an RDS for PostgreSQL database. As of this writing, Amazon Aurora PostgreSQL is not supported as source provider.

Provide a source provider name that is uniquely identifiable for your migration project, and specify the engine type, server name, port, database name, and SSL mode.

Create the target provider

Add a target provider name that is uniquely identifiable for your migration project, and specify the engine type, server name, port, database name, and SSL mode.

Create an instance profile

AWS DMS creates a serverless environment for homogeneous data migrations. When you create your instance profile, you specify the VPC to use. You can use your default VPC for your account and Region, or you can create a new VPC. For each data migration, AWS DMS establishes a VPC peering connection with the VPC that you used for your instance profile. Next, AWS DMS adds the CIDR block in the security group that is associated with your instance profile. Because AWS DMS attaches a public IP address to your instance profile, all your data migrations that use the same instance profile have the same public IP address. When your data migration stops or fails, AWS DMS deletes the VPC peering connection.

Create a migration project

Now you can create a migration project using the resources that you created from the earlier steps.

On the AWS DMS console, create a new data migration task.

The following screenshot shows our created migration project.

Create and run a data migration task

On the AWS DMS console, create a new data migration task.

You have two options for the stop mode:

Don’t stop CDC – When the full load is complete, CDC will start immediately and the task will be in a running state. This option is for continuous data replication.

Using a server time point – When the full load is complete, you can mention the CDC stop point in the source server in UTC. This allows you to stop the migration task at the specified time.

When the migration task is complete, start the task.

Validate the migration

After the data has been migrated, you can see the objects on the target side. Log in to your target PostgreSQL database and run the dt command to list the relations and di to list the indexes:

psql “host=postgres.xxxx11hxxxxx.us-east-1.rds.amazonaws.com port=5432 dbname= dvdrental user= USERNAME password= PASSWORD”

Test a CDC scenario

When the migration has started, the publication slot is created at the source database.

You can view the tables that are under the publication slot by running the following queries:

Note : pubname should be the publication id

To test a CDC scenario on the source, we insert and update data in a sample table from the dvdrental database.

On target database end, the subscriber started with ID 21838, as shown in the following screenshot.

You can view the following updates on the target.

In case of any issues or verifications during the migration, refer to the source and target database logs.

The following screenshot shows an example of the source database log.

The following target database log shows a warning related to max_logical_replication_workers. Increasing the max_logical_replication_workers parameter reduces the overall migration time.

Limitations

This solution has the following limitations:

The user name to connect to the source has the following requirements:

Must be 2–64 characters in length.

Can’t have spaces.

Can include the following characters: a–z, A–Z, 0–9, underscore (_).

Must start with a–z or A–Z.

The password to connect to the source has the following requirements:

Must be 1–128 characters in length.

Can’t contain any of the following: single quote (‘), double quote (“), semicolon (;) or space.

Homogeneous data migrations don’t support selection rules. Also, you can’t use transformation rules to change the data type of columns, move objects from one schema to another, or change the names of objects.

Homogeneous data migrations don’t provide a built-in tool for data validation.

When using homogeneous data migrations with PostgreSQL, AWS DMS migrates views as tables to your target database.

Homogeneous data migrations don’t capture schema-level changes during an ongoing data replication. If you create a new table in your source database, then AWS DMS can’t migrate this table. To migrate this new table, restart your data migration.

You can’t use homogeneous data migrations in AWS DMS to migrate data from a higher database version to a lower database version.

You can’t use homogeneous data migrations in the AWS Command Line Interface (AWS CLI) or API.

Homogeneous data migrations don’t support establishing a connection with database instances in VPC secondary CIDR ranges.

You can’t use the 8081 port for homogeneous migrations from your data providers.

Troubleshooting homogeneous database migrations

If you encounter a connectivity issue that led to data migration task failure, check the following:

Make sure that the source and target instance security groups contain rules for any traffic inbound or outbound for the data IP/port. Also, verify the NACL and route table rules.

On-premises and Amazon EC2 databases should be accessible through the VPC created. If not, add the following in your security groups to allow lists of input connections in your firewall:

Add the public IP of the on-premises instance.

Add the public IP of the AWS-managed database.

Add the data migration task elastic IP address (after the task is created, an elastic IP is generated).

For CDC connectivity, both the source and target databases should be able to communicate with each other.

Make sure that the AWS DMS homogeneous instance elastic IP addresses are added in the source and target database security groups (database ports).

You may also encounter a task failure due to missing prerequisites. The error message can be similar to the following.

In this case, logical replication should be enabled on both the source and target database.

Log file

You can view the database migration progress and logs on the details page of the migration project, as shown in the following screenshot.

The following is a sample Amazon CloudWatch log from our migration project:

Clean up

AWS resources created by the AWS DMS homogeneous migration incur costs as long as they are in use. When you no longer need the resources, clean them up by deleting the associated data migration under the migration project, along with the migration project.

Conclusion

This post discussed the end-to-end process to configure and migrate data using AWS DMS homogeneous migration and the PostgreSQL DB engine. The latest AWS DMS homogeneous migration feature can migrate from PostgreSQL to Amazon Aurora PostgreSQL and Amazon RDS for PostgreSQL with ease and minimal effort.

Leave your thoughts, questions, and comments in the comments section.

About the Authors

Nagarjuna Paladugula is a Senior Cloud Support Engineer at AWS Professional Services, specialized in Oracle, Amazon RDS for Oracle, and AWS DMS. He has over 19 years’ experience on different database technologies, and uses his experience to offer guidance and technical support to customers to migrate their databases to the AWS Cloud. Outside of work, Nagarjuna likes traveling, watching movies and web series, and running.

Bhavani Akundi is a Senior Lead Database consultant at AWS Professional Services. She helps AWS customers migrate and modernize their databases and applications to AWS.

InduTeja Aligeti is a Senior Lead Database Consultant at AWS. She has over 18 years of experience working with Microsoft technologies with a specialization in SQL Server. She focuses on helping customers build highly available, cost-effective database solutions and migrate their large-scale SQL Server databases to AWS.

Source: Read More