The process outlined in this post was co-developed and tested with Ionut Bruma, a Solutions Architect in the Capital Markets division at London Stock Exchange Group (LSEG).

In this post we share how the London Stock Exchange Group (LSEG) Capital Markets Business unit improved their Blue/Green software deployment methodology, by using continuous logical database replication.

Previously, deployments included the need to manually backup and restore the production database to create the Green environment. This step was time consuming and involved shutting down the production database in order to keep both Blue and Green environments consistent during deployment.

Using logical replication, the backup and restore is replaced by continuous replication. This considerably minimises the deployment time by reducing the downtime of the Blue environment.

Blue/green deployment is a strategy to implement changes to a website, application, or database by interchanging production and staging environments. The Blue environment represents the primary or active backend, and the Green environment is a replica that is staged and synchronized with the live environment. The process involves making changes or upgrades to the Green environment and then switching over, thereby minimizing downtime and enabling you to roll back to the Blue environment if problems arise.

Without a Blue/green methodology, deployments involve production downtime, where all activities are performed during non-business hours, incurring additional operational costs and reduction in availability. As part of software deployment, you can use Blue/green deployment to achieve more agility in the deployment process by minimizing the downtime of the production system. This allows the internal product teams to shorten the time to market for new features as well as reduce deployment efforts.

In addition to supporting database version upgrades and select database schema changes, this type of deployment also helps during internal testing of application changes by mirroring production configurations. Eliminating the need to back up and restore the production database for each iteration of a test cycle provides a significant advantage.

Amazon Aurora PostgreSQL-Compatible Edition and Amazon Relational Database Service (Amazon RDS) for PostgreSQL both have the Amazon RDS Blue/Green Deployments for Aurora. Managed Blue/green deployment vastly simplifies upgrades and schema changes, but is not supported in conjunction with some Amazon Aurora features such as Amazon Aurora Global Database.

In this post, we show you the process of implementing a Blue/green deployment architecture using Aurora PostgreSQL Global Database. Specifically, we explore best practices and considerations when configuring the architecture. Blue/green deployment serves as a robust and efficient approach to make sure applications stay resilient and synchronized throughout the process.

Solution overview

Our application serves internal users through Amazon Route 53, resolving to the internal Application Load Balancer that distributes traffic to a Kubernetes cluster deployed across three Availability Zones. The application layer performs CRUD operations against an Aurora global database.

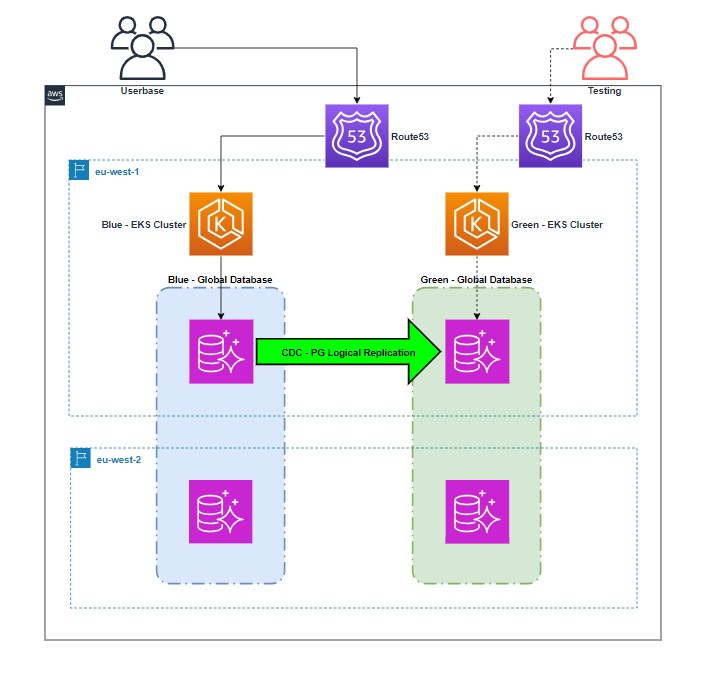

The following diagram illustrates the architecture of the testing phase.

The following diagram illustrates the step of cutting traffic to the Blue cluster.

The following diagram illustrates the step of stopping replication from Blue to Green.

This is done after you have verified that there is no user activity, application, or batch job actively accessing the database.

The following diagram illustrates the step of pointing traffic to the Green cluster.

From this point forward, the Green environment serves as the primary production environment until the next iteration.

In the following sections, we detail the steps to implement a Blue/green deployment:

Configure the cluster parameter group associated with the source (Blue) Aurora global database cluster, to enable logical replication.

Create a publication of the database hosted on the Blue cluster.

Create a clone of the Blue cluster using the Aurora cloning feature, which creates the Green (target) cluster.

Configure the subscription from the Green cluster to the Blue publication.

Verify replication lag.

Convert the Green cluster into an Aurora global database.

Perform pre-cutover validation.

Alter sequences and drop the subscription.

Cut over to the Green cluster.

We use native PostgreSQL logical replication to synchronize the Green environment to provide the ongoing CDC capability. The logical replication process is asynchronous. For more information, refer to Using logical replication to perform a major version upgrade for Aurora PostgreSQL.

The target database is in a writable state, open to both Data Definition Language (DDL) and Data Manipulation Language (DML) operations outside of replication. It is strongly recommended that you take appropriate measures to ensure that DML and DDL changes are applied to the intended database.

Prerequisites

Complete the following prerequisites:

Create an Aurora PostgreSQL cluster configured with a global database.

Note that Aurora Global Database doesn’t support managing users via AWS Secrets Manager. We discuss alternative security methods later in this post.

Review the limitations of logical replication with Amazon Aurora PostgreSQL.

Confirm that you meet the relevant requirements, for example a user with the rds_superuser role granted to it

Establish connectivity to the DB cluster via a PostgreSQL client (for example, psql or pgAdmin).

Confirm access to the AWS control plane via the AWS Management Console or AWS Command Line Interface (AWS CLI).

Note that as of this writing, Amazon RDS Proxy doesn’t support streaming replication mode. If you’re using RDS Proxy, you should use the regular cluster endpoint and ignore RDS Proxy for the replication process.

Required permissions

There are two levels of permissions required for the process:

Control plane (AWS resource layer) – These permissions grant the ability to modify the Aurora clusters involved, as well as to create and modify a new cluster via the console or API

Data plane (Aurora data layer) – These permissions grant the ability to create the replication configuration using a PostgreSQL client

The following table summarizes the relevant solution steps and whether they are performed via the control plane or data plane.

Step Number

Step Description

Control Plane

Data Plane

1

Configure the Blue cluster parameter group for logical replication

X

–

2

Create a publication of the database hosted on the Blue cluster

–

X

3

Create a clone of the Blue cluster

X

–

4

Configure the subscription from the Green cluster to the Blue publication

–

X

5

Verify replication lag

–

X

6

Convert the Green cluster into an Aurora global database

X

–

7

Perform pre-cutover validation

–

X

8

Alter sequences and drop the subscription

–

X

Configure the Blue cluster parameter group

Aurora clusters and Aurora instance parameter groups are Regional resources. Make sure that these resources are configured in the target Region with the desired settings. Typically, the parameter settings in the target Region will mirror your source RDS cluster and RDS instance parameter groups.

The instance should use a custom DB cluster parameter group with the following settings:

rds.logical_replication – Configure this parameter to 1. The rds.logical_replication parameter serves the same purpose as a standalone PostgreSQL server’s wal_level parameter and other parameters that control the write-ahead log file management. (This change will require the instances to be rebooted, if the parameter group is created subsequently.)

max_replication_slots – Configure this parameter to the total number of subscriptions that will be created.

max_wal_senders – Configure this parameter to the number of concurrent connections, with additional overhead for management tasks and new sessions. If you’re using AWS DMS, the number of max_wal_senders should be the sum of the number of concurrent sessions and the number of AWS DMS tasks that may be operational at any given time.

max_logical_replication_workers – Configure this parameter to the anticipated number of logical replication workers and table synchronization workers. It is typically advised to set the number of replication workers to the same value used for max_wal_senders. The workers are drawn from the pool of background processes (max_worker_processes) allocated for the server.

max_worker_processes – Configure this parameter to the number of background processes for the server. The allocation should be sufficient for replication, auto-vacuum processes, and other maintenance processes that might occur concurrently.

Create a publication of the database on the Blue cluster

Complete the following steps to create a publication of the database on the Blue cluster:

Connect to the source database on the Blue cluster

Run the following query to confirm the settings:

Create a publication:

Create a replication slot:

Create a non-admin account to serve replication only, and grant the following permissions in the source database:

Create a clone of the Blue cluster

Create the Green cluster by creating a clone of the Blue cluster. For instructions on creating a clone of the Blue cluster (to serve as the Green cluster), refer to Cloning a volume for an Amazon Aurora DB cluster.

Configure the subscription in the Green database

After the clone is provisioned and in an available state, complete the following steps:

Connect to the target database on the Green cluster

Retrieve the log position from the cluster or writer endpoint and store it in a secure location. This will be used to start the subscription at the point the clone was created, so no data is lost, and no duplicate changes are processed. To do this run the following query:

Drop the replication slot on the Green instance only:

Drop the publication from the Green instance only:

Create a subscription to the Blue instance:

Retrieve the roname value, which is the identifier of the replication origin:

Use the roname output from the previous step to configure the start position in the publication. The log_sequence_number is the output of aurora_volume_logical_start_lsn(); from Step 2.

Enable the subscription:

Verify replication lag

Confirm that the replication is configured and replicating data:

Connect to the source database on the Blue cluster

Run the following query to confirm:

Optionally, you can log the preceding query in a table at a specified frequency using the pg_cron extension to track replication lag over time.

Note that although Amazon CloudWatch publishes various metrics related to replication and replication lag, but note these apply to managed read replicas and global database.

Convert the Green cluster into an Aurora global database

It is at your discretion whether you perform this step now or post-deployment.

Make sure that the configuration of the cluster parameter and instance groups is in the Region intended to be added to the global database. For more information, refer to Adding an AWS Region to an Amazon Aurora global database.

At this stage, the replication is configured and the system is prepared for the deployment of changes to the Green environment.

Once completed, you can perform deployment validation and testing on the Green environment, which might involve completing the rest of the steps in this guide to simulate the end-to-end cutover process.

Once testing is complete, the Green environment can simply be dropped and re-instantiated in preparation for the live deployment.

Perform pre-cutover validation

You’re now able to cut over from the Blue environment to the Green environment. The following validation steps might involve multiple teams to complete:

Stop writes to the Blue environment (using your preferred method).

Connect to the source database on the Blue cluster

Run the following query to ensure that replication has caught up. When the diff_size and diff_bytes values reach 0, the replica has caught up to the source cluster:

Run the following query against the pg_stat_activity system view and confirm that all non-system connections have been removed from the Blue cluster:

Cancel any active connections still open:

Use your preferred method to validate between the Blue and Green environments’ datasets—for example, object and row counts, binary checksums, or third-party tooling.

If you’re using sequences, retrieve and store the current value of the sequences, because they need to be resynchronized to the Green environment later. Connect to the source database on the Blue cluster, and run the following code, which will output the required commands to be run:

Alter sequences and drop the subscription

If you’re using sequences, reset the sequence values to match the Blue environment from the replication validation step. You need to perform this step for all sequences that were replicated:

Connect to the target database on the Green cluster

Run the output from step 7 in the previous section to recede all sequences

Now you can drop the subscription from the Green environment:

Cut over to the Green cluster

This step marks the transition from the current Blue environment to the new Green environment. This involves all parties involved in supporting the deployment. Redirecting your workload to the Green environment could involve updating connection strings, Aurora endpoints, or CNAME records, among others. (Note that there is no global Aurora endpoint for the global database.) The specifics of how you manage this cutover depends on your preferred method.

Post-cutover steps

When the cutover is complete, stop the Blue cluster with a final backup. You can remove the Blue cluster at a later date in line with your organizational policies.

Clean up

If you have created one or more clusters for testing purposes only and need to remove them, complete the following steps to clean up your resources:

Remove all secondary DB clusters from the Aurora global database. Each cluster becomes a standalone Aurora DB cluster. For more details, see Removing a cluster from an Amazon Aurora global database.

From each standalone Aurora DB cluster, delete all Aurora replicas.

Remove the primary DB cluster from the Aurora global database. This becomes a standalone Aurora DB cluster.

From the Aurora primary DB cluster, first delete all Aurora replicas, then delete the writer DB instance.

For more information, refer to Deleting an Amazon Aurora global database.

Considerations

In this post, we have simplified the deployment process and assumed a standard set of configurations. Because this is a nuanced process, there might be multiple custom configurations that need to be validated against the process. In this section, we discuss some key things to consider.

Stopping write traffic to the Blue environment

PostgreSQL doesn’t have the concept of setting a database to read only. ALTER SYSTEM SET default_transaction_read_only TO on; is not safe as it does not affect existing connections and can be overridden at the transaction level.

Note that stopping instances temporarily is not supported in Aurora Global Database.

Consider the following options to prevent writes to the Blue environment during cutover:

Bring down or disable applications and services

Bring down or disable the application connection pool or write application connection pool

Change the port in the cluster parameter group. This will apply the change immediately, and will restart all instances in the cluster.

The following options require configuration changes to the Blue (live production) environment and must be carefully considered. This includes reversing them in the event that you encounter issues during the cutover to the Green environment.

Redirect all traffic to the Aurora read-only endpoint

Revoke login permissions from user accounts and cancel any existing connections after that:

Replication

Logical replication only replicates DML and TRUNCATE operations for data tables and indexes. For more information about further limitations, refer to Logical Replication in the PostgreSQL documentation.

Logical replication does not replicate any DDL or schema changes, with the exception of TRUNCATE. These must be done manually, and the subscription refreshed.

Consider if monitoring and alerting for the replication architecture is required. This could be as simple as a query scheduled via the pg_cron extension to check the replication lag.

Primary Keys

All source tables in a PostgreSQL logical replication publication must have a replica identity configured to replicate inserts and updates, which is usually a Primary Key. If a replica identity does not exist, inserts will be replicated but updates and deletes will throw the following error:

If there is no suitable key, you can use a unique index as the replica identity or use REPLICA IDENTITY FULL which means the entire row becomes the key.

If using REPLICA IDENTITY FULL this causes all column values to be written to the WAL which is inefficient and resource heavy, hence this should only be considered where no other solution exists.

Replication user credentials and security

As we mentioned earlier, Secrets Manager doesn’t support managing secrets in Aurora Global Database. To enhance security, consider using a non-admin account for running the replication process, and subsequently disabling or removing it after a successful cutover. Whichever account you use to initiate the subscription should either be removed or have the password changed immediately after cutover.

Another item to consider is setting log statements to none to ensure no password values are populated to CloudWatch:

Schema changes

It is possible to make schema changes to the Blue cluster, but these are not replicated to Green. Schema changes to the Green database tables do not necessarily break logical replication, but these must be considered very carefully in the scope they are being made. Adding indexes, stored procedures, functions, and (in certain cases) columns to tables doesn’t impact replication. Renaming tables, dropping columns, or changing data-types to incompatible types for replicated tables will cause replication to break.

Column lists came out in PostgreSQL 15 logical replication, which enable you to select the columns to replicate to subscribers on a table level.

Triggers

PostgreSQL replication is able to handle existing database triggers on the target database that haven’t been altered from their original state since being cloned or created at the subscriber.

As mentioned in the PostgreSQL documentation, the apply process on the subscriber database always runs with session_replication_role set to replica. This means that, by default, triggers and rules will not fire on a subscriber.

Users can optionally choose to enable triggers and rules on a table using the ALTER TABLE command and the ENABLE TRIGGER and ENABLE RULE clauses.

The session_replication_role is detailed in the PostgreSQL documentation

The intended use of this setting is that logical replication systems set it to replica when they are applying replicated changes. The effect of that will be that triggers and rules (that have not been altered from their default configuration) will not fire on the replica, with the exception of triggers configured as ENABLE ALWAYS at the table level. These will consistently be triggered irrespective of the session_replication_role setting.

Initial hydration of the Green environment

This is the phase where the initial dataset is populated, sometimes known as the full load. For the purposes of this process, we use Aurora cloning for simplicity and speed because this clones the entire cluster volume.

Validation and cutover

Prior to cutover, it is crucial to confirm that replication lag is zero and both datasets are identical. This guards against possible data loss in the cutover process. You should confirm that all applications and connections have been stopped and there are none still running by querying the pg_stat_activity or other catalog view. This should expose any connections that are still open unintentionally.

Consider removing any unexpected active connections, if any are still open by app services. This should be carefully considered to ensure there are no unexpected consequences.

See the following code:

As we mentioned earlier, validation is strongly recommended to ensure you have identical datasets prior to cutover. Consider the following methods:

Object and row counts

Binary checksums

Third-party tooling

Performing the procedure across accounts

Assuming connectivity is possible between the clusters on the database port, this process can work across AWS accounts.

Conclusion

In this post, we outlined the process for performing Blue/green deployments for Aurora PostgreSQL Global Database instances. With this technique, you can achieve more agility during the deployment process by reducing the deployment time and the total downtime of the production system. This solution offers a fast way to snapshot a production environment and perform tests on the new version of the application prior to production deployment. There are also cost improvements associated with this process, because you can move operational activities outside the maintenance window, which most often is scheduled during non-business hours.

We welcome your feedback; try out the steps detailed in this post and let us know your thoughts in the comments.

About the Authors

Ionut Bruma is a Solutions Architect at LSEG where he builds and manages various solutions in Capital Markets division. He has extensive experience in crafting robust data management solutions tailored to support diverse business applications. Over the course of his 15 years career, Ionut has seamlessly transitioned through a spectrum of roles, spanning from providing technical support to assuming responsibilities in database administration and customer engineering. His professional journey has traversed multiple industries, including Information Technology, retail, and financial services, where he has honed his expertise.

Jonathan Kerr is a Sr. Solutions Architect for Databases at AWS based in London UK. He specialises in technical solutioning and providing strategic guidance to large enterprises in the Financial Services Industry, at all stages of the cloud database journey. In his role he helps customers migrate, modernise, and build high performance database solutions, leveraging the comprehensive suite of AWS database services.

Inthu Anandakumar is a Technical Account Manager at AWS, based in London. Inthu currently works with strategic financial services organisations, helping them architect, build, and optimise cloud applications to drive business value. With a background in software development and solution architecture, he thrives on crafting innovative solutions and enjoys sharing his discoveries with customers across a wide array of sectors.

Richard Chester is a Principal Solutions Architect at AWS, advising large Financial Services organisations. He has 25+ years’ experience across the Financial Services Industry where he has held leadership roles in transformation programs, DevOps engineering, and Development Tooling. Since moving across to AWS from being a customer, Richard is now focused on driving the execution of strategic initiatives, mitigating risks and tackling complex technical challenges for AWS customers.

Source: Read More