Large Language Models (LLMs) have made significant advances in the field of Information Extraction (IE). Information extraction is a task in Natural Language Processing (NLP) that involves identifying and extracting specific pieces of information from text. LLMs have demonstrated great results in IE, especially when combined with instruction tuning. Through instruction tuning, these models are trained to annotate text according to predetermined standards, which improves their ability to generalize to new datasets. This indicates that even with unknown data, people are able to do IE tasks successfully by following instructions.

However, even with these improvements, LLMs still face many difficulties when working with low-resource languages. These languages lack both the unlabeled text required for pre-training and the labeled data required for fine-tuning models. Due to this lack of data, it is challenging for LLMs to attain good performance in these languages.

To overcome this, a team of researchers from the Georgia Institute of Technology has introduced the TransFusion framework. In TransFusion, models are adjusted to function with data translated from low-resource languages into English. With this method, the original low-resource language text and its English translation provide information that the models may use to create more accurate predictions.

This framework aims to effectively enhance IE in low-resource languages by utilizing external Machine Translation (MT) systems. There are three primary steps involved, which are as follows:

Translation during Inference: Converting low-resource language data into English so that a high-resource model can annotate it.

Fusion of Annotated Data: In a model trained to use both types of data, fusing the original low-resource language text with the annotated English translations.

Constructing a TransFusion Reasoning Chain, which integrates both annotation and fusion into a single autoregressive decoding pass.

Expanding upon this structure, the team has also introduced GoLLIE-TF, which is an instruction-tuned LLM that is cross-lingual and tailored especially for Internet Explorer tasks. GoLLIE-TF aims to reduce the performance disparity between high- and low-resource languages. The combined goal of the TransFusion framework and GoLLIE-TF is to increase LLMs’ efficiency when handling low-resource languages.

Experiments on twelve multilingual IE datasets, with a total of fifty languages, have shown that GoLLIE-TF works well. In comparison to the basic model, the results demonstrate that GoLLIE-TF performs greater zero-shot cross-lingual transfer. This means that without further training data, it can more effectively apply its acquired skills to new languages.

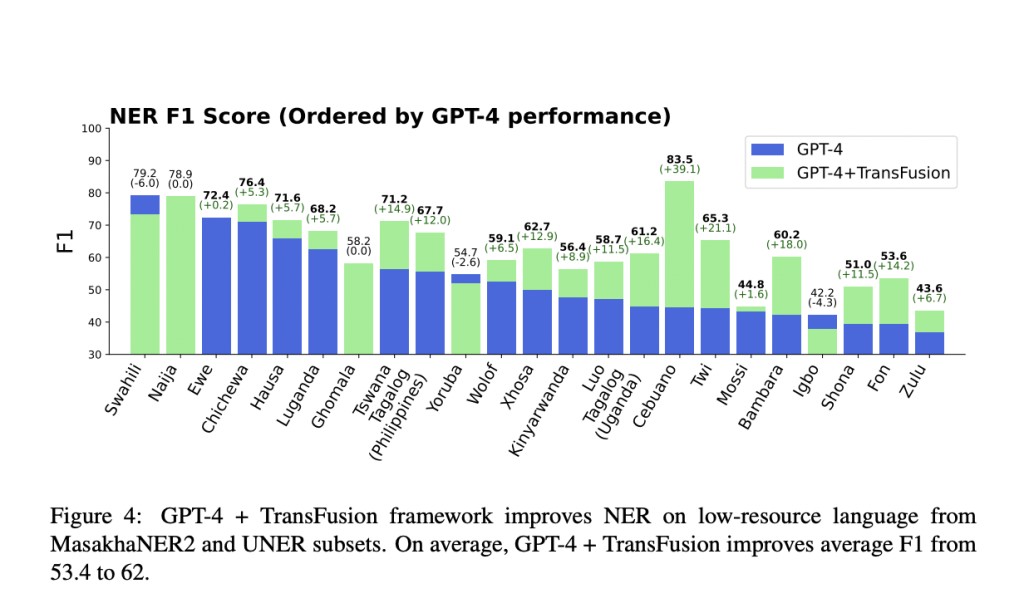

TransFusion applied to proprietary models such as GPT-4 considerably improves the performance of low-resource language named entity recognition (NER). When prompting was used, GPT-4’s performance increased by 5 F1 points. Further improvements were obtained by fine-tuning various language model types using the TransFusion framework; decoder-only architectures improved by 14 F1 points, while encoder-only designs improved by 13 F1 points.

In conclusion, TransFusion and GoLLIE-TF together provide a potent solution for enhancing IE tasks in low-resource languages. This shows notable improvements across many models and datasets, helping to reduce the performance gap between high-resource and low-resource languages by utilizing English translations and fine-tuning models to fuse annotations.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post TransFusion: An Artificial Intelligence AI Framework To Boost a Large Language Model’s Multilingual Instruction-Following Information Extraction Capability appeared first on MarkTechPost.

Source: Read MoreÂ