Recent language models like GPT-3+ have shown remarkable performance improvements by simply predicting the next word in a sequence, using larger training datasets and increased model capacity. A key feature of these transformer-based models is in-context learning, which allows the model to learn tasks by conditioning a series of examples without explicit training. However, the working mechanism of in-context learning is still partially understood. Researchers have explored the factors affecting in-context learning, where it was found that accurate examples are not always necessary to be effective, whereas, the structure of the prompts, the model’s size, and the order of examples significantly impact the results.

This paper explores three existing methods of in-context learning in transformers and large language models (LLMs) by conducting a series of binary classification tasks (BCTs) under varying conditions. The first method focuses on the theoretical understanding of in-context learning, aiming to link it with gradient descent (GD). The second method is the practical understanding, which looks at how in-context learning works in LLMs, considering factors like the label space, input text distribution, and overall sequence format. The final method is learning to learn in-context. To enable in-context learning, MetaICL is utilized, which is a meta-training framework for finetuning pre-trained LLMs on a large and diverse collection of tasks.

Researchers from the Department of Computer Science at the University of California, Los Angeles (UCLA) have introduced a new perspective by viewing in-context learning in LLMs as a unique machine learning algorithm. This conceptual framework allows traditional machine learning tools to analyze decision boundaries in binary classification tasks. Many invaluable insights are achieved for the performance and behavior of in-context learning by visualizing these decision boundaries in linear and non-linear settings. This approach explores the generalization capabilities of LLMs, providing a distinct perspective on the strength of their in-context learning performance.

Experiments carried out by researchers mostly focused on solving these questions:

How do existing pre-trained LLMs perform on BCTs?Â

How do different factors influence the decision boundaries of these models?Â

How can we improve the smoothness of decision boundaries?

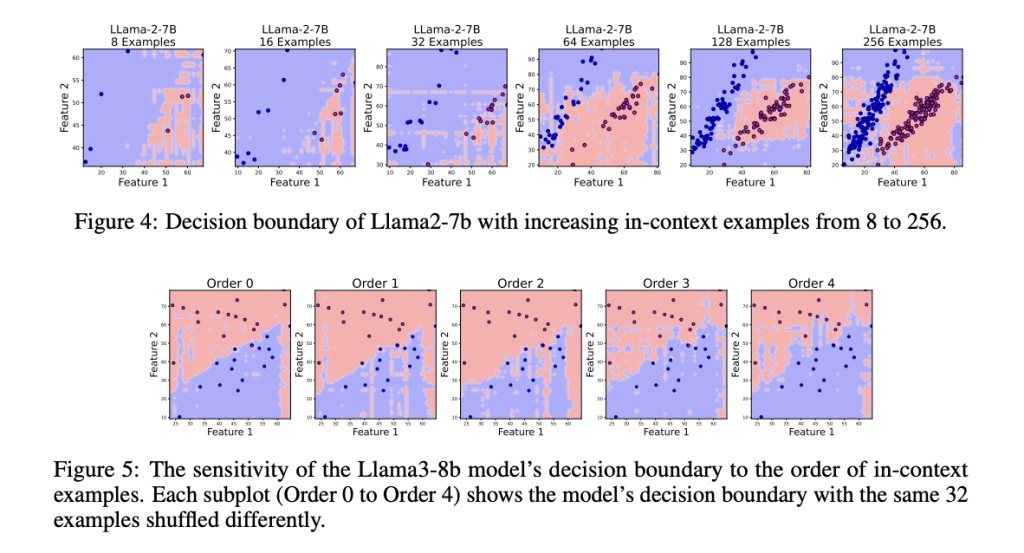

The decision boundary of LLMs was explored for classification tasks by prompting them with n in-context examples of BCTs, with an equal number of examples for each class. Using scikit-learn, three types of datasets were created to represent different shapes of decision boundaries such as linear, circular, and moon-shaped. Moreover, various LLMs were explored, ranging from 1.3B to 13B parameters, including open-source models like Llama2-7B, Llama3-8B, Llama2-13B, Mistral-7B-v0.1, and sheared-Llama-1.3B, to understand their decision boundaries.

Results of the experiments demonstrated that finetuning LLMs on in-context examples does not result in smoother decision boundaries. For instance, when the Llama3-8B on 128 in-context learning examples was fine-tuned, the resulting decision boundaries remained non-smooth. So, to improve the decision boundary smoothness of LLMs on a Dataset of Classification Tasks, a pre-trained Llama model was fine-tuned on a set of 1000 binary classification tasks generated from scikit-learn, which featured decision boundaries that were linear, circular, or moon-shaped, with equal probabilities.Â

In conclusion, the research team has proposed a novel method to understand in-context learning in LLMs by examining their decision boundaries in in-context learning in BCTs. Despite obtaining high test accuracy, it was found that the decision boundaries of LLMs are often non-smooth. So, factors that affect this decision boundary were identified through experiments. Further, fine-tuning and adaptive sampling methods were also explored, which proved effective in improving the smoothness of the boundaries. In the future, these findings will provide new insights into the mechanics of in-context learning and suggest pathways for research and optimization.Â

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

Create, edit, and augment tabular data with the first compound AI system, Gretel Navigator, now generally available! [Advertisement]

The post A New Machine Learning Research from UCLA Uncovers Unexpected Irregularities and Non-Smoothness in LLMs’ In-Context Decision Boundaries appeared first on MarkTechPost.

Source: Read MoreÂ