A significant challenge in the field of Information Retrieval (IR) using Large Language Models (LLMs) is the heavy reliance on human-crafted prompts for zero-shot relevance ranking. This dependence requires extensive human effort and expertise, making the process time-consuming and subjective. Additionally, the complexities involved in relevance ranking, such as integrating query and long passage pairs and the need for comprehensive relevance assessments, are inadequately addressed by existing methods. These challenges hinder the efficient and scalable application of LLMs in real-world scenarios, limiting their full potential in enhancing IR tasks.

Current methods for addressing this challenge primarily involve manual prompt engineering, which, although effective, is time-consuming and subjective. Manual methods lack scalability and are constrained by the variability in human expertise. Additionally, existing automatic prompt engineering techniques focus more on simpler tasks like language modeling and classification, failing to address the unique complexities of relevance ranking. These complexities include the integration of query and passage pairs and the need for comprehensive relevance ranking, which existing methods handle suboptimally due to their simpler optimization processes.

A team of researchers from Rutgers University and the University of Connecticut proposes APEER (Automatic Prompt Engineering Enhances LLM Reranking), which automates prompt engineering through iterative feedback and preference optimization. This approach minimizes human involvement by generating refined prompts based on performance feedback and aligning them with preferred prompt examples. By systematically refining prompts, APEER addresses the limitations of manual prompt engineering and enhances the efficiency and accuracy of LLMs in IR tasks. This method represents a significant advancement by providing a scalable and effective solution for optimizing LLM prompts in complex relevance ranking scenarios.

APEER operates by initially generating prompts and refining them through two main optimization steps. Feedback optimization involves obtaining performance feedback on the current prompt and generating a refined version. Preference optimization further enhances this prompt by learning from sets of positive and negative examples. The training and validation of APEER are conducted using multiple datasets, including MS MARCO, TREC-DL, and BEIR, ensuring the method’s robustness and effectiveness across various IR tasks and LLM architectures.

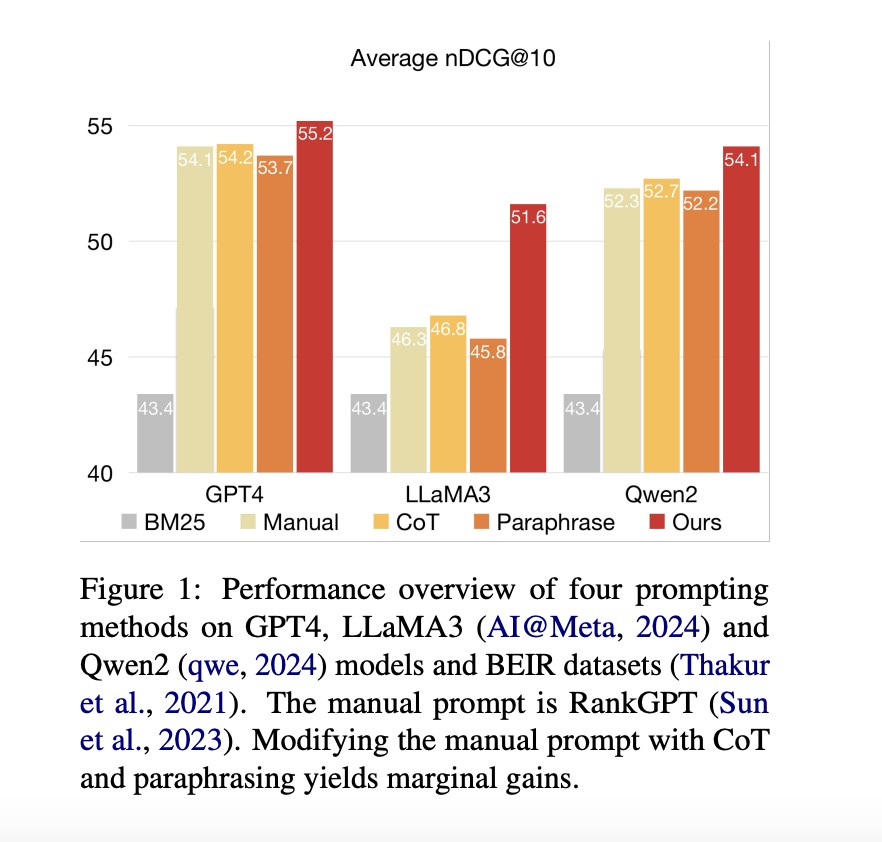

APEER demonstrates significant improvements in LLM performance for relevance ranking tasks. Key performance metrics such as nDCG@1, nDCG@5, and nDCG@10 show substantial gains over state-of-the-art manual prompts. For instance, APEER achieved an average improvement of 5.29 nDCG@10 on eight BEIR datasets compared to manual prompts on the LLaMA3 model. Additionally, APEER’s prompts exhibit better transferability across diverse tasks and LLM architectures, consistently outperforming baseline methods across various datasets and models, including GPT-4, LLaMA3, and Qwen2.

In conclusion, the proposed method, APEER, automates prompt engineering for LLMs in IR, addressing the critical challenge of reliance on human-crafted prompts. By employing iterative feedback and preference optimization, APEER reduces human effort and significantly improves LLM performance across various datasets and models. This innovation represents a substantial advancement in the field, providing a scalable and effective solution for optimizing LLM prompts in complex relevance ranking scenarios.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 45k+ ML SubReddit

The post APEER: A Novel Automatic Prompt Engineering Algorithm for Passage Relevance Ranking appeared first on MarkTechPost.

Source: Read MoreÂ