Fireworks AI releases Firefunction-v2, an open-source function-calling model designed to excel in real-world applications. It integrates with multi-turn conversations, instruction following, and parallel function calling. Firefunction-v2 offers a robust and efficient solution that rivals high-end models like GPT-4o but at a fraction of the cost and with superior speed and functionality.

Introduction to Firefunction-v2

LLMs’ capabilities have improved substantially in recent years, particularly with releases like Llama 3. These advancements have underscored the importance of function calling, allowing models to interact with external APIs and enhancing their utility beyond static data handling. Firefunction-v2 builds on these advancements, offering a model for real-world scenarios involving multi-turn conversations, instruction following, and parallel function calling.

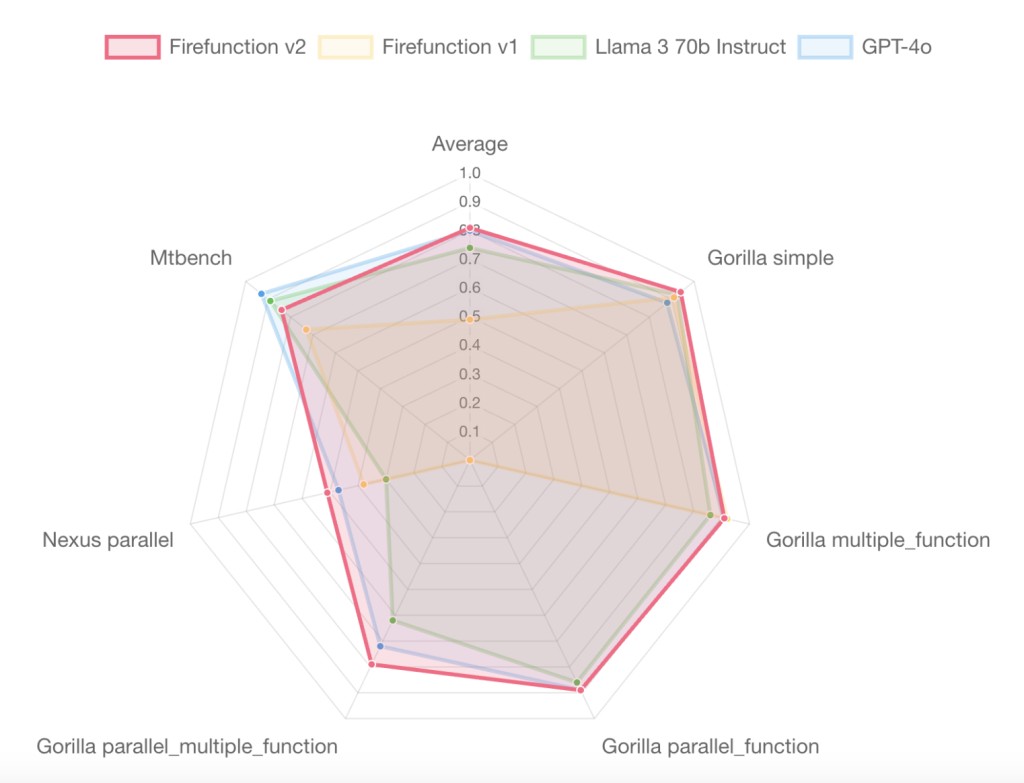

Firefunction-v2 retains Llama 3’s multi-turn instruction capability while significantly outperforming it in function-calling tasks. It scores 0.81 on a medley of public benchmarks compared to GPT-4o’s 0.80, all while being far more cost-effective and faster. Specifically, Firefunction-v2 costs $0.9 per output token, compared to GPT-4o’s $15, and operates at 180 tokens per second versus GPT-4o’s 69 tokens per second.

The Creation Process

The development of Firefunction-v2 was driven by user feedback and the need for a model that excels in both function calling and general tasks. Unlike other open-source function calling models, which often sacrifice general reasoning abilities for specialized performance, Firefunction-v2 maintains a balance. It was fine-tuned from the Llama3-70b-instruct base model using a curated dataset that included function calling and general conversation data. This approach ensured the preservation of the model’s broad capabilities while enhancing its function-calling performance.

Evaluation and Performance

The evaluation of Firefunction-v2 involved a mix of publicly available datasets and benchmarks such as Gorilla and Nexus. The results showed that Firefunction-v2 outperformed its predecessor, Firefunction-v1, and other models like Llama3-70b-instruct and GPT-4o in various function-calling tasks. For example, Firefunction-v2 achieved higher scores in parallel function calling and multi-turn instruction following, demonstrating its adaptability and intelligence in handling complex tasks.

Highlighted Capabilities

Firefunction-v2’s capabilities are best illustrated through practical applications. The model reliably supports up to 30 function specifications, significantly improving over Firefunction-v1, which struggled with more than five functions. This capability is crucial for real-world applications, as it allows the model to handle multiple API calls efficiently, providing a seamless user experience. Firefunction-v2 excels in instruction-following, making intelligent decisions about when to call functions, and executing them accurately.

Getting Started with Firefunction-v2

Firefunction-v2 is accessible through Fireworks AI’s platform, which offers a speed-optimized setup with an OpenAI-compatible API. This compatibility allows users to integrate Firefunction-v2 into their existing systems with minimal changes. The model can also be explored through a demo app and UI playground, where users can experiment with various functions and configurations.

Conclusion

Firefunction-v2 is a testament to Fireworks AI’s commitment to advancing the capabilities of large language models in function calling. Firefunction-v2 sets a new standard for real-world AI applications by balancing speed, cost, and performance. The positive feedback from the developer community and the impressive benchmark results underscore its potential to revolutionize how function calls are integrated into AI systems. Fireworks AI continues to iterate on its models, driven by user feedback and a dedication to providing practical solutions for developers.

Check out the Docs, model playground, demo UI app, and Hugging Face model page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Fireworks AI Releases Firefunction-v2: An Open Weights Function Calling Model with Function Calling Capability on Par with GPT4o at 2.5x the Speed and 10% of the Cost appeared first on MarkTechPost.

Source: Read MoreÂ