Google AI Researchers introduced Human I/O to address the issue of situationally induced impairments and disabilities (SIIDs). SIIDs are temporary challenges that hinder our ability to interact with technology due to environmental factors such as noise, lighting, and social norms. These impairments can significantly affect our ability to use our hands, vision, hearing, or speech in various situations, leading to a less efficient and more frustrating user experience. The frequent and varied nature of these impairments makes it difficult to devise one-size-fits-all solutions that can adapt in real-time to users’ needs.

Traditional methods for addressing SIIDs involve creating specific solutions tailored to situations, such as hands-free devices or visual notifications for hearing impairments. However, these approaches often fail to generalize across different scenarios and do not adapt dynamically to the constantly changing conditions of real-life environments. In contrast, Google AI’s Human I/O is a unified framework that uses egocentric vision, multimodal sensing, and large language model (LLM) reasoning to detect and assess SIIDs. Human I/O provides a generalizable and extensible system that evaluates the availability of a user’s input/output channels (vision, hearing, vocal, and hand) in real-time across various situations.

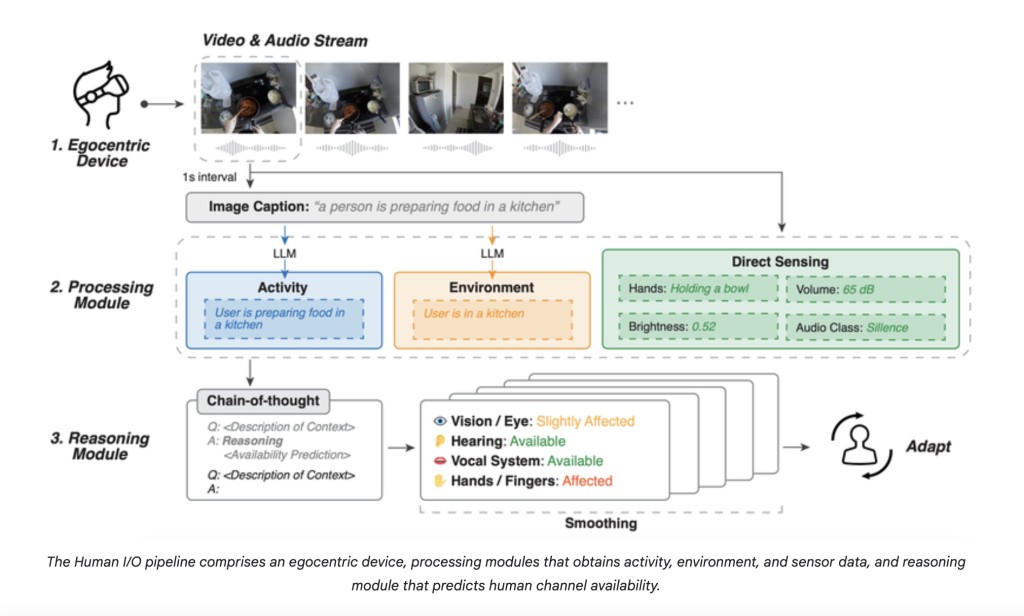

Human I/O operates through a comprehensive pipeline that includes data streaming, processing, and reasoning modules. The system begins by streaming real-time video and audio data from an egocentric device equipped with a camera and microphone. This first-person perspective captures the necessary environmental details. The processing module then analyzes this raw data to extract critical information. It employs computer vision for activity recognition, identifies environmental conditions (e.g., noise levels, lighting), and directly senses user-specific details such as hand occupancy. This detailed analysis provides a structured understanding of the user’s current context.

The reasoning module utilizes LLMs with chain-of-thought reasoning to interpret the processed data and predict the availability of each input / and output channel. By assessing the degree to which a channel is impaired, Human I/O can adapt device interactions accordingly. The system distinguishes between four levels of channel availability: available, slightly affected, affected, and unavailable, which allows for nuanced and context-aware adaptations. With an 82% accuracy in predicting channel availability and a low mean absolute error in evaluations, Human I/O demonstrates robust performance.

In conclusion, Human I/O proves to be a significant advancement in making technology interactions more adaptive and context-aware. By integrating egocentric vision, multimodal sensing, and LLM reasoning, the system effectively predicts and responds to situational impairments, enhancing user experience and productivity. It serves as a foundation for future developments in ubiquitous computing while maintaining privacy and ethical considerations.

Check out the Paper and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter.Â

Join our Telegram Channel and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 44k+ ML SubReddit

The post Revolutionizing Accessibility: Google AI’s Human I/O Unifies Egocentric Vision, Multimodal Sensing, and LLM Reasoning to Detect and Assess User Impairments appeared first on MarkTechPost.

Source: Read MoreÂ