Vision-and-language (VL) representation learning is an evolving field focused on integrating visual and textual information to enhance machine learning models’ performance across a variety of tasks. This integration enables models to understand and process images and text simultaneously, improving outcomes such as image captioning, visual question answering (VQA), and image-text retrieval.

A significant challenge in VL representation learning is effectively aligning and fusing information from visual and textual modalities. Traditional methods often process visual and textual data separately before combining them, which can result in incomplete or suboptimal interactions between the modalities. This limitation hinders the ability of models to fully utilize the rich semantic information present in both visual and textual data, thereby affecting their performance and adaptability to different tasks.

Existing work includes uni-modal encoders that process visual and textual data separately before combining them, often leading to incomplete cross-modal interactions. Models like METER and ALBEF utilize this approach but need help in fully exploiting the semantic richness across modalities. ALIGN and similar frameworks integrate visual and textual data at later stages, which can hinder comprehensive alignment and fusion of information. While effective to some extent, these methods need help with achieving optimal performance due to their separate handling of visual and textual representations.

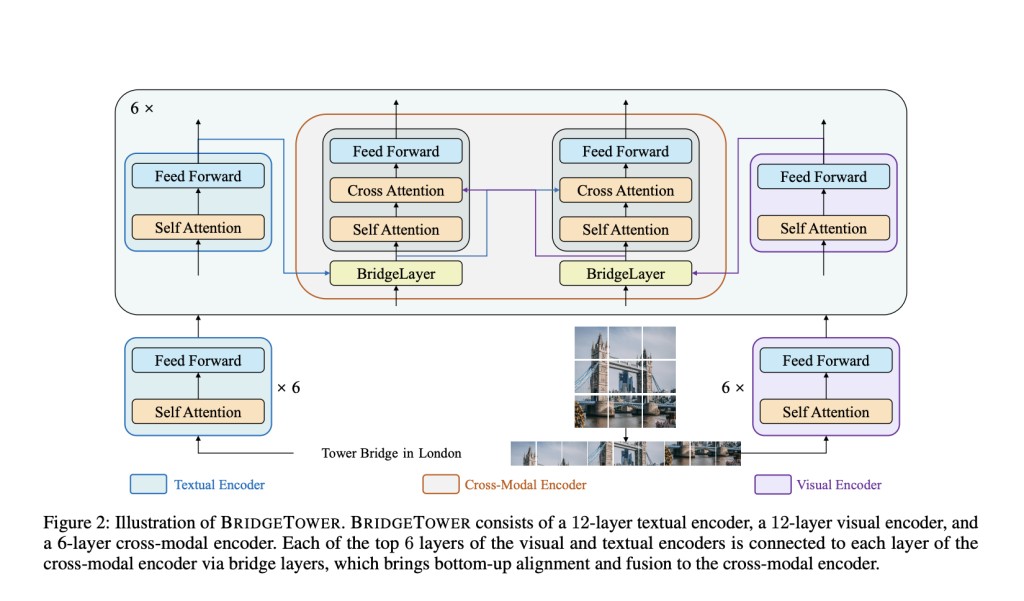

Researchers from Microsoft and Google have introduced BRIDGETOWER, a novel transformer-based model designed to improve cross-modal alignment and fusion. BRIDGETOWER incorporates multiple bridge layers that connect the top layers of uni-modal encoders with each layer of the cross-modal encoder. This innovative design enables more effective bottom-up alignment of visual and textual representations, enhancing the model’s ability to combine these data types seamlessly.

BRIDGETOWER employs bridge layers to integrate visual and textual information at different semantic levels, enhancing the cross-modal encoder’s ability to combine these data types effectively. These bridge layers utilize a LayerNorm function to merge inputs from uni-modal encoders, allowing for more nuanced and detailed interactions across the model’s layers. The method leverages pre-trained uni-modal encoders and introduces multiple bridge layers to connect these encoders with the cross-modal encoder. This approach facilitates a bottom-up cross-modal alignment and fusion between visual and textual representations of different semantic levels, thereby enabling a more effective and informative cross-modal interaction at each encoder layer.

The performance of BRIDGETOWER has been evaluated extensively across various vision-language tasks, and the results have been remarkable. On the MSCOCO dataset, BRIDGETOWER achieved an RSUM of 498.9%, outperforming the previous state-of-the-art model, METER, by 2.8%. For the image retrieval task, BRIDGETOWER scored 62.4% for IR@1, significantly surpassing METER by 5.3%. It also outperformed the ALIGN and ALBEF models, which were pre-trained with much larger datasets. Regarding text retrieval, BRIDGETOWER achieved 75.0% for TR@1, which is slightly lower than METER by 1.2%. On the VQAv2 test-std set, BRIDGETOWER attained an accuracy of 78.73%, outperforming METER by 1.09% with the same pre-training data and nearly negligible additional parameters and computational costs. When scaling the model further, BRIDGETOWER achieved an accuracy of 81.15% on the VQAv2 test-std set, surpassing models pre-trained on significantly larger datasets.

In conclusion, the research introduces BRIDGETOWER, a novel model designed to enhance vision and language tasks by integrating multiple bridge layers that connect uni-modal and cross-modal encoders. By enabling effective alignment and fusion of visual and textual data, BRIDGETOWER outperforms existing models like METER in various tasks such as image retrieval and visual question answering. The model’s ability to achieve state-of-the-art performance with minimal additional computational cost demonstrates its potential for advancing the field. This work underscores the importance of efficient cross-modal interactions for improving the accuracy and scalability of vision-and-language models.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post BRIDGETOWER: A Novel Transformer-based Vision-Language VL Model that Takes Full Advantage of the Features of Different Layers in Pre-Trained Uni-Modal Encoders appeared first on MarkTechPost.

Source: Read MoreÂ