Transformers are essential in modern machine learning, powering large language models, image processors, and reinforcement learning agents. Universal Transformers (UTs) are a promising alternative due to parameter sharing across layers, reintroducing RNN-like recurrence. UTs excel in compositional tasks, small-scale language modeling, and translation due to better compositional generalization. However, UTs face efficiency issues as parameter sharing reduces the model size, and compensating by widening layers demands excessive computational resources. Thus, UTs are less favored for parameter-heavy tasks like modern language modeling. In the mainstream, there are not any prior work that has succeeded in developing compute-efficient UT models that yield competitive performance compared to standard Transformers on such tasks.

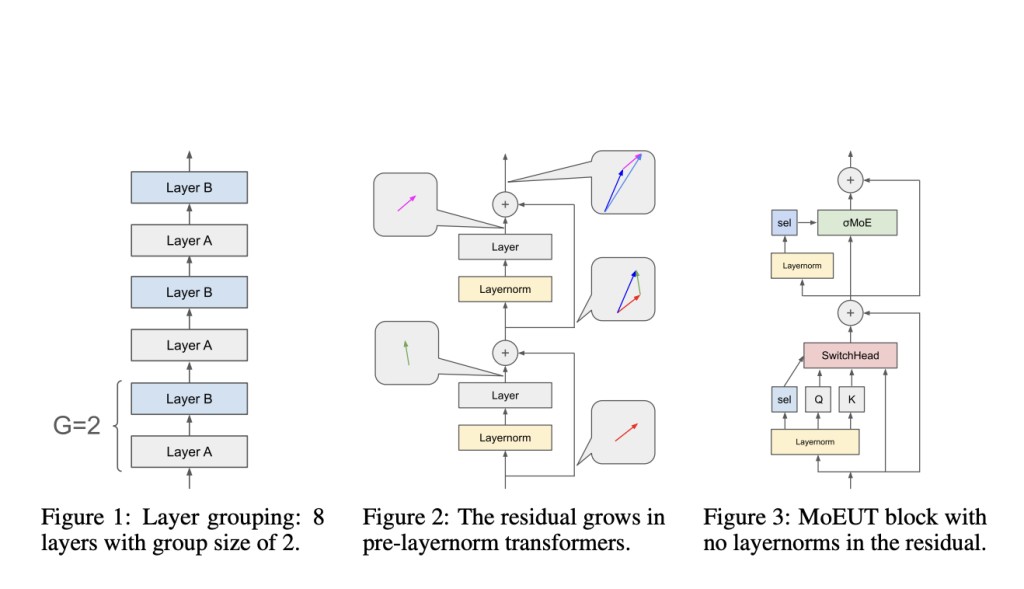

Researchers from Stanford University, The Swiss AI Lab IDSIA, Harvard University, and KAUST present Mixture-of-Experts Universal Transformers (MoEUTs) that address UTs’ compute-parameter ratio issue. MoEUTs utilize a mixture-of-experts architecture for computational and memory efficiency. Recent MoE advancements are combined with two innovations: (1) layer grouping, which recurrently stacks groups of MoE-based layers, and (2) peri-layernorm, applying layer norm before linear layers preceding sigmoid or softmax activations. MoEUTs enable efficient UT language models, outperforming standard Transformers with fewer resources, as demonstrated on datasets like C4, SlimPajama, peS2o, and The Stack.

The MoEUT architecture integrates shared layer parameters with mixture-of-experts to solve the parameter-compute ratio problem. Utilising recent advances in MoEs for feedforward and self-attention layers, MoEUT introduces layer grouping and a robust peri-layernorm scheme. In MoE feedforward blocks, experts are selected dynamically based on input scores, with regularization applied within sequences. MoE self-attention layers use SwitchHead for dynamic expert selection in value and output projections. Layer grouping reduces compute while increasing attention heads. The peri-layernorm scheme avoids standard layernorm issues, enhancing gradient flow and signal propagation.

By doing thorough experimentations, researchers confirmed MoEUT’s effectiveness on code generation using “The Stack†dataset and on various downstream tasks (LAMBADA, BLiMP, CBT, HellaSwag, PIQA, ARC-E), showing slight but consistent outperformance over baselines. Compared to Sparse Universal Transformer (SUT), MoEUT demonstrated significant advantages. Evaluations of layer normalization schemes showed that their “peri-layernorm†scheme performed best, particularly for smaller models, suggesting the potential for greater gains with extended training.

This study introduces, MoEUT, an effective Mixture-of-Expert-based UT model that addresses the parameter-compute efficiency limitation of standard UTs. Combining advanced MoE techniques with a robust layer grouping method and layernorm scheme, MoEUT enables training competitive UTs on parameter-dominated tasks like language modeling with significantly reduced compute requirements. Experimentally, MoEUT outperforms dense baselines on C4, SlimPajama, peS2o, and The Stack datasets. Zero-shot experiments confirm its effectiveness on downstream tasks, suggesting MoEUT’s potential to revive research interest in large-scale Universal Transformers.

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post MoEUT: A Robust Machine Learning Approach to Addressing Universal Transformers’ Efficiency Challenges appeared first on MarkTechPost.

Source: Read MoreÂ