Sequence modeling is a critical domain in machine learning, encompassing applications such as reinforcement learning, time series forecasting, and event prediction. These models are designed to handle data where the order of inputs is significant, making them essential for tasks like robotics, financial forecasting, and medical diagnoses. Traditionally, Recurrent Neural Networks (RNNs) have been used for their ability to process sequential data efficiently despite their limitations in parallel processing.

Rapid machine learning advancement has highlighted existing models’ limitations, particularly in resource-constrained environments. Transformers, known for their exceptional performance and ability to leverage GPU parallelism, are resource-intensive, making them unsuitable for low-resource settings such as mobile and embedded devices. The main challenge lies in their quadratic memory and computational requirements, which hinder their deployment in scenarios with limited computational resources.

Existing work includes several attention-based models and methods. Transformers, despite their strong performance, are resource-intensive. Approximations like RWKV, RetNet, and Linear Transformer offer linearizations of Attention for efficiency but have limitations in token bias. Attention can be computed recurrently, as shown by Rabe and Staats, and softmax-based Attention can be reformulated as an RNN. Efficient algorithms for computing prefix scans, such as those by Hillis and Steele, provide foundational techniques for enhancing attention mechanisms in sequence modeling. However, these techniques must fully address the inherent resource intensity, especially in applications involving long sequences, such as climate data analysis and economic forecasting. This has led to exploring alternative methods to maintain performance while being more resource-efficient.

Researchers from Mila and Borealis AI have introduced Attention as a Recurrent Neural Network (Aaren), a novel method that reinterprets the attention mechanism as a form of RNN. This innovative approach retains the parallel training advantages of Transformers while allowing for efficient updates with new tokens. Unlike traditional RNNs, which process data sequentially and struggle with scalability, Aaren leverages the parallel prefix scan algorithm to compute attention outputs more efficiently, handling sequential data with constant memory requirements. This makes Aaren particularly suitable for low-resource environments where computational efficiency is paramount.

In detail, Aaren functions by viewing the attention mechanism as a many-to-one RNN. Conventional attention methods compute their outputs parallelly, requiring linear memory about the number of tokens. However, Aaren introduces a new method for computing Attention as a many-to-many RNN, significantly reducing memory usage. This is achieved through a parallel prefix scan algorithm that allows Aaren to process multiple context tokens simultaneously while updating its state efficiently. The attention outputs are computed using a series of associative operations, ensuring that the memory and computational load remain constant, regardless of the sequence length.

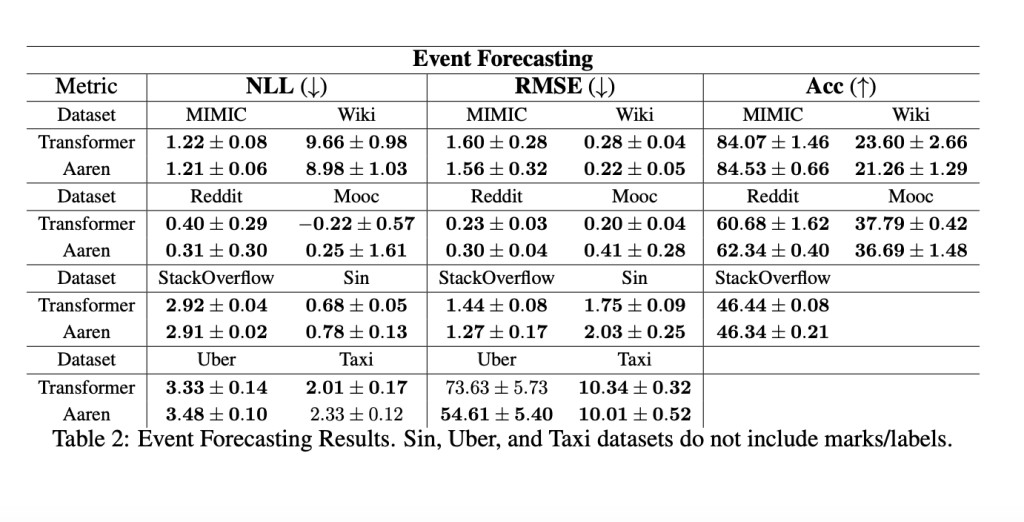

The performance of Aaren has been empirically validated across various tasks, demonstrating its efficiency and robustness. In reinforcement learning tasks, Aaren was tested on 12 datasets within the D4RL benchmark, including environments like HalfCheetah, Ant, Hopper, and Walker. The results showed that Aaren achieved competitive performance with Transformers, pronouncing scores such as 42.16 ± 1.89 for Medium datasets in the HalfCheetah environment. This efficiency extends to event forecasting, where Aaren was evaluated on eight popular datasets. For example, on the Reddit dataset, Aaren achieved a negative log-likelihood (NLL) of 0.31 ± 0.30, showing comparable performance to Transformers but with reduced computational overhead.

Aaren was tested on eight real-world datasets in time series forecasting, including Weather, Exchange, Traffic, and ECL. For the Weather dataset, Aaren achieved a mean squared error (MSE) of 0.24 ± 0.01 and a mean absolute error (MAE) of 0.25 ± 0.01 for a prediction length of 192, demonstrating its ability to handle time series data efficiently. Similarly, Aaren performed on par with Transformers across ten datasets from the UEA time series classification archive in time series classification, showing its versatility and effectiveness.

In conclusion, Aaren significantly advances sequence modeling for resource-constrained environments. By combining the parallel training capabilities of Transformers with the efficient update mechanism of RNNs, Aaren provides a balanced solution that maintains high performance while being computationally efficient. This makes it an ideal choice for applications in low-resource settings where traditional models fall short.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 43k+ ML SubReddit | Also, check out our AI Events Platform

The post Aaren: Rethinking Attention as Recurrent Neural Network RNN for Efficient Sequence Modeling on Low-Resource Devices appeared first on MarkTechPost.

Source: Read MoreÂ