Machine Translation (MT) is a significant field within Natural Language Processing (NLP) that focuses on automatically translating text from one language to another. This technology leverages large language models (LLMs) to understand and generate human languages, facilitating communication across linguistic boundaries. MT aims to bridge global communication gaps by continuously improving translation accuracy supporting multilingual information exchange and accessibility.

The primary challenge in machine translation lies in selecting high-quality and diverse training data for instruction fine-tuning. Quality and diversity in the data ensure that language models can generalize well across different contexts and languages. Without these elements, models may produce translations that lack accuracy or fail to capture nuanced meanings, limiting their effectiveness in real-world applications.

Existing research includes methods like in-context translation exemplar selection, prompt optimization, and decoding strategies to enhance machine translation performance. Notable models and frameworks include GPT-4, Bayling-13B, BigTranslate-13B, TIM, and NLLB-54B, focusing on instruction tuning and translation performance. These approaches leverage techniques to optimize translation accuracy and generalization, often relying on extensive datasets and sophisticated evaluation metrics such as BLEU, BLEURT, and COMET to measure effectiveness and improvements in language model translations.

Researchers from ByteDance Research have introduced a novel method named G-DIG, which uses gradient-based techniques to select high-quality and diverse instruction data for machine translation. The innovation leverages influence functions to analyze how individual training examples impact model performance. This method aims to improve data selection without relying on external models, thereby enhancing the quality and diversity of the training datasets.

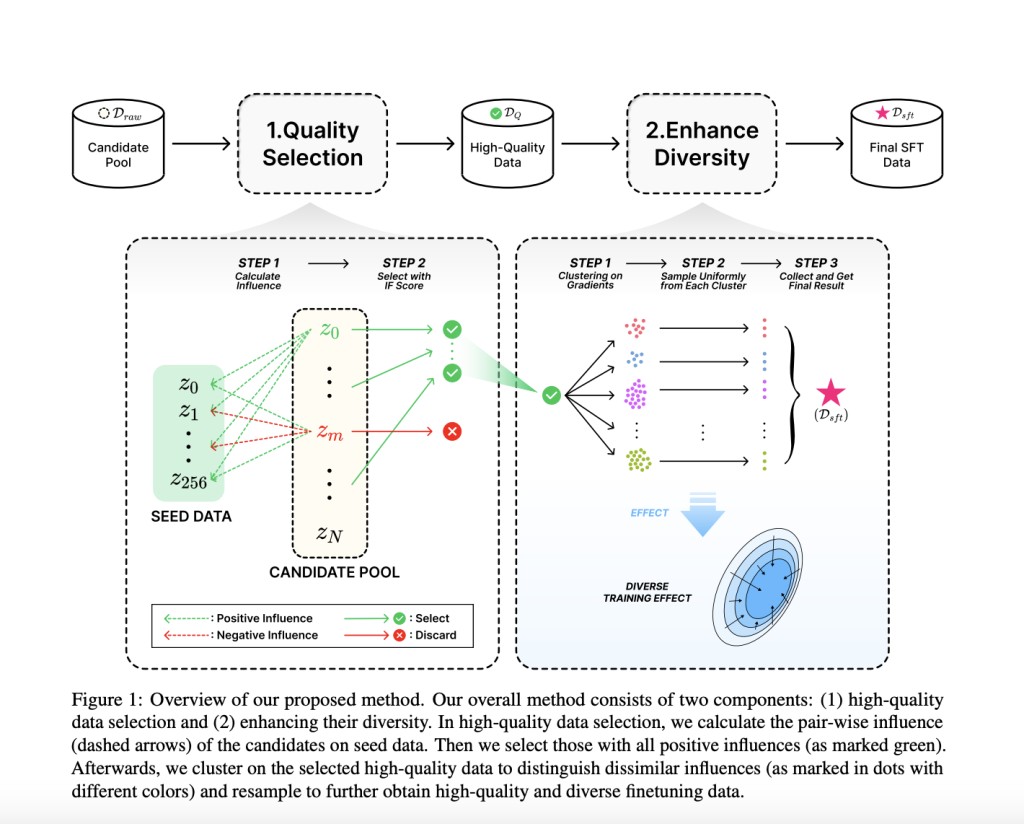

The G-DIG method involves two main components: high-quality data selection and diversity enhancement. Researchers manually create a small set of seed data for high-quality data and use influence functions to identify training examples that positively impact the model’s performance. Specifically, they measure the response quality of each training sample with the influence score on test instances. To enhance diversity, they apply clustering algorithms to the gradients of training examples, ensuring various influences on the model. The gradient similarity is assessed using the Euclidean distance measure, and the K-means clustering algorithm is employed to group training data into diverse patterns. This two-step process ensures the selected data is high-quality and diverse, improving the model’s overall translation capabilities.

Extensive experiments on various translation tasks, including WMT22 and FLORES, demonstrated that G-DIG significantly outperforms existing data selection methods and achieves competitive results against state-of-the-art models. G-DIG performed better in both Zh → En and De → En translation tasks. For instance, in Zh → En translation, the G-DIG model consistently surpassed the random model across all metrics and dataset sizes. The COMET score for Zh → En translation improved by 1.7 with 1000 training examples and by 2.11 in BLEU on the FLORES dataset. In De → En translation, G-DIG improved BLEU scores by 2.11 and 1.24 on WMT and FLORES compared to models trained with randomly selected data. The researchers highlighted that models trained with G-DIG-selected data exhibited better translation quality and alignment with human expectations.

The research team successfully addressed the challenges of data quality and diversity in machine translation by introducing the G-DIG method. This approach leverages gradient-based data selection, enhancing the model’s performance without needing external quality assessment models. The study demonstrates the potential of G-DIG to improve translation accuracy and efficiency, paving the way for more advanced and reliable machine translation systems. Furthermore, G-DIG’s ability to select training data directly impacting model performance ensures that LLMs are better aligned with human instructions, making them more effective in real-world applications.

To summarize, ByteDance Research has introduced a groundbreaking method that addresses critical issues in machine translation, demonstrating significant improvements in translation quality through innovative data selection techniques. The G-DIG method represents a substantial advancement in the field, offering a new pathway for enhancing the capabilities of LLMs in various language translation tasks. This method’s success emphasizes the importance of high-quality and diverse data in training robust and accurate language models, ensuring they can meet global communication and information exchange demands.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post This AI Paper by ByteDance Research Introduces G-DIG: A Gradient-Based Leap Forward in Machine Translation Data Selection appeared first on MarkTechPost.

Source: Read MoreÂ