Large language models (LLMs) have recently become highly valuable tools in complicated reasoning tasks, language production, and human language interpretation. Since then, there has been a dramatic increase in funding for studies in this area, and both the number of models used and the amount of data used for training have grown substantially. This also points to a rise in the inference and training costs.Â

Having efficient designs at inference time is important to ensure these models’ broader range of uses and flexibility. Various Pareto-Frontiers, or trade-offs, between LLM latency and performance, are relevant to these systems’ end-users. Multiple strategies, such as pruning and KV-Cache optimization, have been used to improve the inference efficiency of language models. Finding the best frontier of language models for inference can thus be expressed as a problem of optimizing many objectives or constraints.

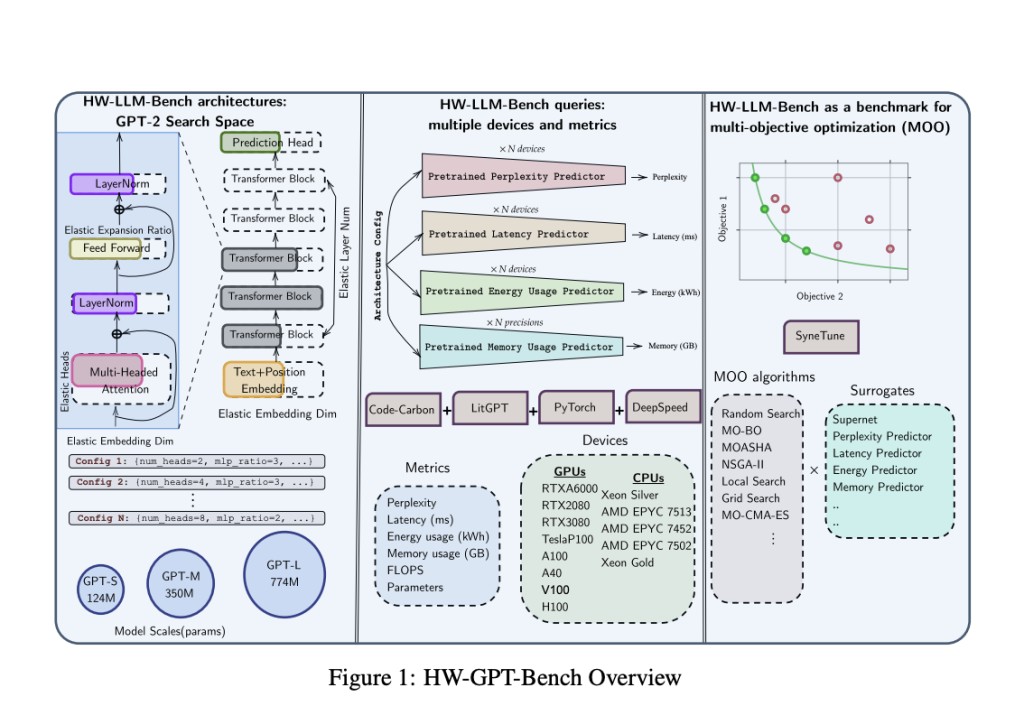

A new study by researchers from the University of Freiburg and Bosch Center for Artificial Intelligence present Hardware-Aware-GPT-Bench (HW-GPT-Bench), a language model space benchmark that takes hardware into account, to evaluate and optimize LLMs (long language models) using various hardware metrics. The goal of creating this benchmark is to speed up the process of studying and developing algorithms for hardware-aware search in the language model space.

To efficiently train a supernet proxy that covers different LLM setups, HW-GPT-Bench uses weight-sharing methods from Neural Architecture Search (NAS). A complete evaluation methodology is provided by profiling these models on thirteen devices using five critical hardware metrics: latency, energy consumption, GPU memory usage, FLOPS, and performance.Â

This comprehensive benchmark covers small, medium, and large model scales using performance and hardware metric predictors across many devices. The team investigated eight distinct multi-objective optimization algorithms, comparing performance and hardware measurements to find the best configurations by analyzing cutting-edge NAS methods. They use their pretrained surrogates for various model sizes to investigate the interplay between hardware and performance measures. This work helps with integration and reproducibility; the public API provides a queryable, open-source interface for predictors, supernetwork weights, and baselines.

Training and deploying LLMs place a heavy computational burden on the world’s power grid. To minimize the negative environmental effects caused by large-scale AI deployments, HW-GPT-Bench optimizes LLM configurations to lower energy consumption. The proposed benchmark helps create environmentally friendly AI by locating designs that use less power.

Optimizing hardware efficiency during LLMs’ training and deployment stages can result in significant cost savings. By decreasing the computational resources required, organizations can reap economic benefits and make large-scale AI solution deployment more realistic. Industries that rely on processing and analyzing massive amounts of data will benefit the most from this economic efficiency.

The team’s long-term goals include:

Investigating quantization methods.

Developing surrogates for more current and larger models.

Determining the best way to combine NAS with pruning strategies.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post Researchers at the University of Freiburg and Bosch AI Propose HW-GPT-Bench: A Hardware-Aware Language Model Surrogate Benchmark appeared first on MarkTechPost.

Source: Read MoreÂ