Language models are designed to understand & generate human language. These models are crucial for applications like chatbots, automated content creation, and data analysis. Their ability to comprehend and generate text depends on the context length they can handle, making advancements in long-context models particularly significant for enhancing AI capabilities.

Among many challenges, one major challenge in AI language models is efficiently processing and understanding long text sequences. Traditional models often struggle with context lengths beyond a few thousand tokens, leading to difficulty maintaining coherence and relevance in longer interactions. This limitation hinders the application of AI in areas requiring extensive context, such as legal document analysis, lengthy conversations, and detailed technical writing.

Most language models use fixed context windows, which limit their ability to handle long text sequences. Techniques like positional encodings are employed to manage context, but they often lead to performance degradation when the context exceeds the predefined length. Models like GPT-3 and earlier versions of Llama have made strides but still face significant challenges in extending context length without compromising accuracy and relevance.

With sponsorship support for computing from Crusoe Energy, researchers at Gradient introduced the Llama-3 8B Gradient Instruct 1048k model, a groundbreaking advancement in language models. This model extends the context length from 8,000 to over 1,048,000 tokens, showcasing the ability to manage long contexts with minimal additional training. Utilizing techniques like NTK-aware interpolation and Ring Attention, the researchers significantly improved training efficiency and speed, enabling the model to handle extensive data without the typical performance drop associated with longer contexts.

The researchers employed techniques such as NTK-aware interpolation and Ring Attention to efficiently scale the training of long-context models. They achieved a significant speedup in model training by progressively increasing the context length during training and using advanced computational strategies. This approach allowed them to create a model capable of handling extensive data without the typical performance drop associated with longer contexts.

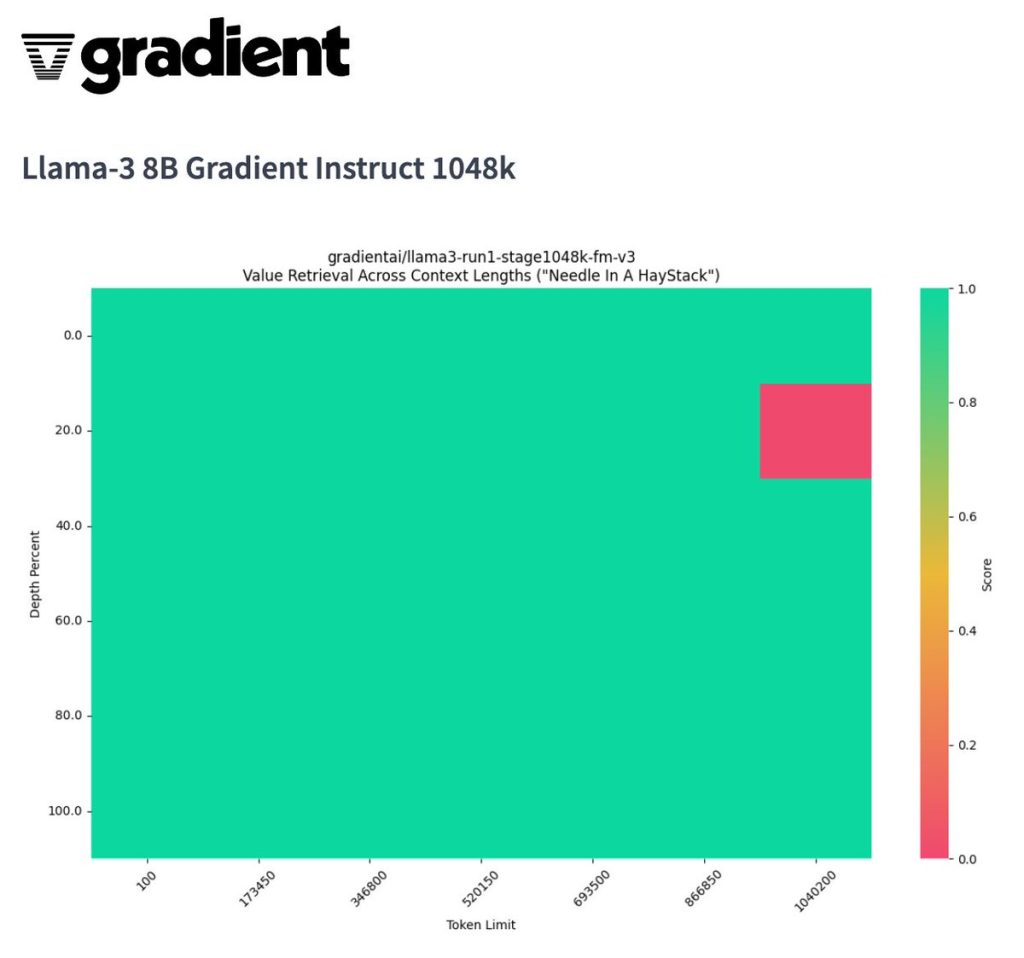

The new Llama-3 8B model with a context length of over 1 million tokens performed exceptionally well in evaluations. It achieved perfect scores on the Needle-in-a-Haystack (NIAH) test, demonstrating its ability to identify and utilize specific information within vast amounts of data. This model’s performance surpasses previous benchmarks, making it a leading option for applications requiring long-context comprehension and generation.

Use Cases of Llama-3 8B Gradient Instruct 1048k:

Code Generation: Generating code suggestions based on the context of an entire repository.

Investment Analysis: Synthesizing nuanced investment analysis from company reports spanning different periods and sectors.

Data Analysis: Automating the analysis of large sets of poorly structured tabular data.

Legal Analysis: Generating legal analysis using historical precedent from previous court proceedings.

These use cases highlight the model’s ability to effectively handle detailed and context-rich tasks.

In conclusion, the introduction of the Llama-3 8B Gradient Instruct 1048k model marks a significant milestone in developing long-context language models. By addressing the challenge of processing extensive text sequences, the researchers have opened new possibilities for AI applications in various fields. This advancement improves the coherence and relevance of AI-generated content and enhances the overall utility of language models in real-world scenarios.

Sources

https://huggingface.co/gradientai/Llama-3-8B-Instruct-Gradient-1048k

https://x.com/Gradient_AI_/status/1785036209468907796

https://gradient.ai/blog/evaluating-models-beyond-niah

https://gradient.ai/blog/scaling-rotational-embeddings-for-long-context-language-models

The post Gradient AI Introduces Llama-3 8B Gradient Instruct 1048k: Setting New Standards in Long-Context AI appeared first on MarkTechPost.

Source: Read MoreÂ