Large language models (LLMs) have revolutionized natural language processing, enabling groundbreaking advancements in various applications such as machine translation, question-answering, and text generation. However, the training of these models poses significant challenges, including high resource requirements and long training times due to the complexity of the computations involved.Â

Previous research has explored techniques like loss-scaling and mixed-precision strategies to reduce memory usage and enhance training efficiency for large models. However, these methods faced limitations related to numerical inaccuracies and restricted representation ranges, impacting overall model performance.Â

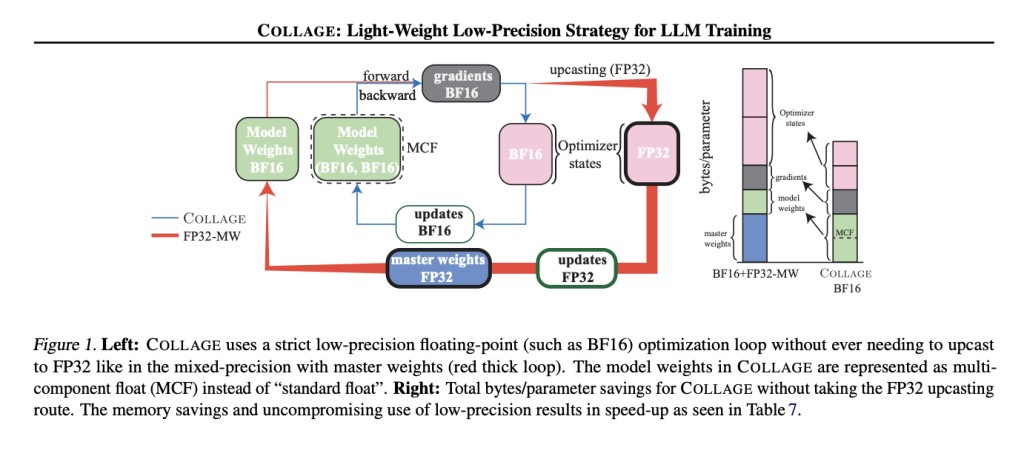

To address this problem, researchers from Cornell University and Amazon have introduced COLLAGE, a novel approach that employs a Multi-Component Float (MCF) representation to accurately handle operations with numerical errors. This innovative strategy optimizes efficiency and memory usage during training. By integrating COLLAGE as a plugin with optimizers like AdamW, significant improvements in training throughput and memory savings have been achieved compared to conventional methods. Moreover, COLLAGE introduces the “effective descent quality†metric, offering a nuanced evaluation of precision strategies and insights into information loss during the training process.

The central advancement of COLLAGE lies in its ability to handle numerical errors and imprecision without necessitating upcasting to higher precision formats, ensuring precise computations with low memory footprint and computational efficiency crucial for LLM training. Performance-wise, COLLAGE exhibits significant speed-ups in training throughput, achieving up to 3.7x better throughput on a GPT-6.7B model. Moreover, COLLAGE maintains comparable model accuracy to FP32 master weights while utilizing only low-precision storage, highlighting its effectiveness in balancing accuracy and efficiency in LLM training.

In conclusion, this innovative method presents a promising low-precision optimization strategy for enhancing language model training efficiency without compromising performance. Its utilization of MCF optimizations contributes to improved execution speed, optimized memory utilization, and overall model quality, paving the way for more efficient and scalable LLM training methodologies.COLLAGE also speeds up LLM training with reduced memory usage without compromising model performance, making it easily integrated into existing optimization frameworks. This breakthrough significantly advances the field of large language model (LLM) training by enabling the efficient training of larger and more scalable models while also reducing their carbon footprint.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 42k+ ML SubReddit

The post COLLAGE: A New Machine Learning Approach to Deal with Floating-Point Errors in Low-Precision to Make LLM Training Accurate and Efficient appeared first on MarkTechPost.

Source: Read MoreÂ