A knowledge graph combines data from many sources and links related entities. Because a knowledge graph is a gathering place for connected data, we expect many of its entities to be similar. When we find that two entities are similar to each other, we can materialize that fact as a relationship between them.

In this two-part series, we demonstrate how to find and link similar entities in Amazon Neptune, a managed graph database service. In part 1, we used lexical search to find entities with similar text. In this post, we use semantic search to find entities with similar meaning. In both cases, we demonstrate a convention to link similar entities using an edge.

Semantic search

A semantic search is the ability to find entities with similar meaning. This approach uses a machine learning (ML) model to create a numeric vector embedding of the entity. A vector is a list of numbers, each of which encodes some aspect of the entity’s meaning. The model creates similar vectors for entities with similar meaning. If we plot vectors on a graph, the closer those vectors are to each other, the more similar they are. Vector databases enable us to store vectors and run distance or similarity queries on them.

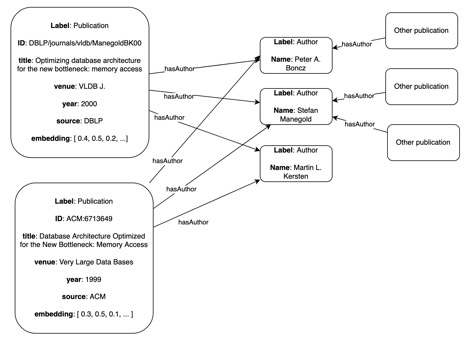

To demonstrate semantic search, we use a publication dataset, specifically the DBLP ACM dataset, which contains citation data from computer science publication sources such as DBLP and Association for Computing Machinery (ACM). Our graph data model is shown in the next diagram.

Â

We maintain Publication nodes and Author nodes. We link a publication to its authors using the hasAuthor edge. Publication nodes have several properties, including title, venue, year, and source (whose value is either DBLP or ACM). We associate an embedding with each publication to facilitate comparison of publications. There are many duplicate publications, most commonly publications with similar titles and authors from different sources. The preceding figure shows publications with similar titles but different sources. They share the same three authors.

In the next figure, we see two publications with titles that are not lexically similar, but have similar subject matter. Tetanus and lockjaw refer to the same disease; and emerge and ER are synonymous. For this reason, we expect their embeddings to be similar. To link the publications, we perform additional verification, including a comparison of authors.

Note that the overall dataset is public, but these two publications are mock publications. We add them to the dataset for testing.

To build this, we use Neptune Analytics, a memory-optimized graph engine for graph analytics. We first generate the embeddings using a pre-trained Bidirectional Encoder Representations (BERT) sentence transformer model. Then we populate a Neptune Analytics graph with publication data plus embeddings. We use an Amazon SageMaker notebook instance as a client to query the Neptune graph to resolve entities.

To run the example, you need a Neptune Analytics graph and a notebook instance. Follow along in FindAndLink-VSS.ipynb to explore the semantic similarity of publications. It includes setup instructions.

Creating embeddings

Let’s look at some highlights. First, we use the following code to create embeddings:

The get_embeddings function takes as input a snippet (or a list of snippets) of text and returns a vector (or, if a list of snippets was passed, a list of vectors). It uses the Sentence Transformers library. A snippet doesn’t need to be a grammatical sentence. We use it for publication titles. The get_str_embedding function converts the vector to the stringified format required to load into Neptune Analytics: a semicolon-separated list of numeric items in the vector.

We create three CSV files to load to the Neptune graph: publications, authors, and hasAuthor edges. The following is an excerpt of the publication CSV.

Each row in the publication CSV represents a single publication. Notice the rightmost column is a stringified embedding, created using a sentence transformer, of the publication title.

topKByNode

Now we can compare publication nodes to each other using the topKByNode function. Neptune Analytics supports OpenCypher query language. The following is an OpenCypher query that calls topKByNode:

As the comments indicate, there are six steps:

Find the candidate publication (the publication we want to compare with others) and its related authors.

Call topKByNode to find publications with embeddings similar to that of the candidate.

Keep track of candidate and matching publications, as well as the similarity score.

Find related authors for the matching nodes.

Return candidate publications, candidate authors, matching publications, matching authors, and score.

Order the results by score.

Vector similarity alone isn’t enough to compare publications. We also need to check for common relationships in the graph. For example, if we look for publications similar to Design and findings and next steps for Java implementation of Telegraph Dataflow, we find Java support for data-intensive systems: experiences building the telegraph dataflow system. But these two publications have different authors, so they are unlikely matches.

However, if we look for publications similar to Tetanus cases increase Emerg units in September, we find ER staff reports lockjaw diagnoses rose 5 percent after Labor Day with the same author. This doesn’t ensure they are the same publication, but that inference has greater probability.

topKByEmbedding

We can use the topKByEmbedding function to find publications similar to a search term. For example, to find publications like Tetanus cases September, we first generate the embedding for that term. We use the same sentence transform as we did for the node embeddings:

Then we search for publications with similar embeddings using topKByEmbedding. For those matching nodes, we also find related authors:

The top matches are, as we discussed earlier, semantically similar but lexically dissimilar.

The notebook also demonstrates how to create a match relationship between publications that we decide are duplicates because they are semantically similar and have common authors. To manually link nodes http://example.org/pubgraph/ACM/604282 and http://example.org/pubgraph/DBLP/journals/sigmod/ShahMFH01 using a matches edge, use the following OpenCypher query:

This follows the same linking convention as patients.

Semantic search in OpenSearch Service

Amazon OpenSearch Service also provides semantic search. You can load embeddings into an k-nearest neighbors index in an OpenSearch domain and perform vector similarity queries against that index. In an upcoming post, we use this capability in conjunction with Neptune.

Additional approaches

Alternatives to search include the following:

Deduplication groups similar entities in batches, typically as part of an extract, transform, and load (ETL) process. Deduplication is often used in Master Data Management (MDM) to help find the golden record. It could be used to discover and link duplicate entities prior to loading them into the Neptune database. Amazon Entity Resolution is an AWS service providing deduplication.

Graph similarity algorithms, available in Neptune Analytics, find similar graph nodes based on intersecting relationships. The following sample notebook demonstrates algorithms such as Jaccard Similarity in an air routes graph.

Neptune ML uses data currently in the graph to train an ML model in SageMaker to make further predictions about the graph. Notably, Neptune ML offers link prediction, or the prediction of an edge between nodes. You could use this capability to find similar entities, for example, by predicting a link between them.

Because the Neptune ML model generates embeddings, you can perform a vector similarity search, in a Neptune Analytics graph, on those embeddings! Significantly, the embeddings are graph aware. The embedding for a node represents not just the node’s properties but also the node’s neighborhood in the graph. Nodes with similar GNN embeddings are graphically similar.

Clean up

If you set up a Neptune Analytics graph or notebook instance to follow along, delete those resources to avoid further costs. The notebooks have cleanup instructions.

Conclusion

A knowledge graph gathers entities from numerous sources. In this post, we demonstrated the use of vector similarity search in Neptune Analytics to find semantically similar entities. We also showed a conventional way to link similar entities.

In In part 1, we showed how to use lexical search to find similar entities in a knowledge graph running on Neptune. We combined Neptune with OpenSearch Service using a feature called full text search.

Experiment with this method using the notebooks provided. Think about how to more effectively query and draw relationships in your knowledge graph using the approaches discussed, and share your thoughts in the comments section.

About the Author

Mike Havey is a Senior Solutions Architect for AWS with over 25 years of experience building enterprise applications. Mike is the author of two books and numerous articles. His Amazon author page is https://www.amazon.com/Michael-Havey/e/B001IO9JBI.

Source: Read More