Researchers in computer vision and robotics consistently strive to improve autonomous systems’ perception capabilities. These systems are expected to comprehend their environment accurately in real-time. Developing new methods and algorithms allows for innovations that benefit various industries, including transportation, manufacturing, and healthcare.

A significant challenge in this field is enhancing the precision and efficiency of object detection and segmentation in images and video streams. These tasks require models that can process visual information quickly and correctly to recognize, classify, and outline different objects. This need for speed and accuracy pushes researchers to explore new techniques that can provide reliable results in dynamic environments.

Existing research includes convolutional neural networks (CNNs) and transformer-based object detection and segmentation architectures. CNNs are known for their ability to effectively identify visual patterns, making them well-suited for detailed feature extraction. On the other hand, transformers excel in handling complex tasks due to their versatility and efficiency in processing global contexts. These methods have advanced the field, yet there is room for improvement in balancing accuracy, speed, and computational efficiency.

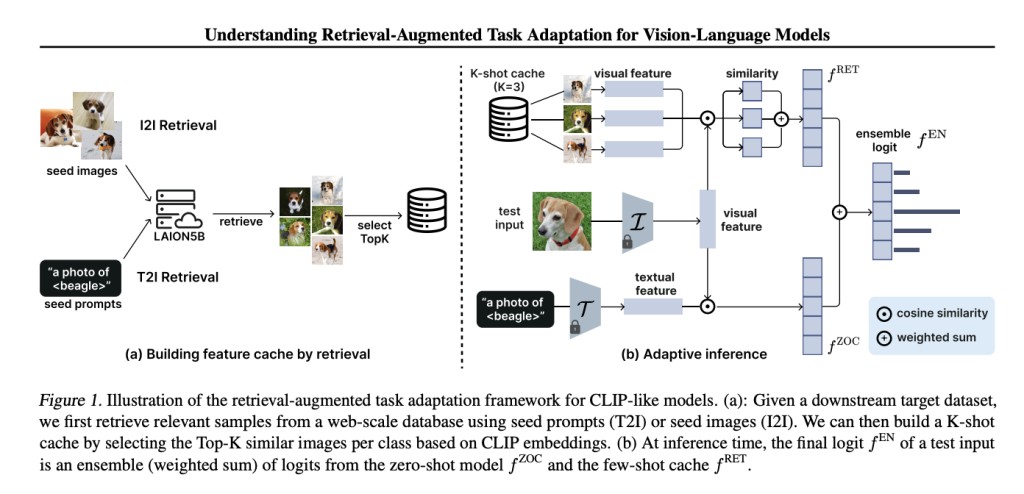

Researchers from the University of Wisconsin-Madison have introduced a new approach focusing on retrieval-augmented task adaptation for vision-language models. Their methodology emphasizes using image-to-image (I2I) retrieval as it consistently outperforms text-to-image (T2I) retrieval in downstream tasks. The method leverages a feature cache built from retrieved samples, significantly impacting the adaptation process and optimizing the performance of vision-language models by incorporating the best practices of retrieval-augmented adaptation.

The research employed retrieval-augmented adaptation for vision-language models, utilizing Caltech101, Birds200, Food101, OxfordPets, and Flowers102 datasets. The approach used a pre-trained CLIP model and external image-caption datasets like LAION to build a feature cache through I2I and T2I retrieval methods. This feature cache was then leveraged to adapt the model for downstream tasks with limited data. The retrieval method gave the model valuable context, enabling it to handle the unique challenges of fine-grained visual categories in these datasets.

The research demonstrated significant performance improvements in retrieval-augmented adaptation for vision-language models. Using I2I retrieval, the method achieved a high accuracy of up to 93.5% on Caltech101, outperforming T2I retrieval by over 10% across various datasets. On datasets like Birds200 and Food101, the proposed model improved classification accuracy by around 15% compared to previous methods. The use of feature cache retrieval led to a 25% reduction in error rates for challenging fine-grained visual categories.

To conclude, the research focused on retrieval-augmented task adaptation, combining I2I and T2I retrieval methods for vision-language models. By utilizing pre-trained models and feature cache retrieval, the study improved model adaptation on several datasets. The approach showed significant advancements in accuracy and error reduction, highlighting the potential of retrieval-augmented adaptation in handling fine-grained visual categories. This research provides valuable insights into enhancing vision-language models, emphasizing the importance of retrieval methods in low-data regimes.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 41k+ ML SubReddit

The post This AI Paper by the University of Wisconsin-Madison Introduces an Innovative Retrieval-Augmented Adaptation for Vision-Language Models appeared first on MarkTechPost.

Source: Read MoreÂ