Many applications have used large language models (LLMs). However, when deployed to GPU servers, their high memory and computing demands result in substantial energy and financial expenditures.Â

Some acceleration solutions can be used with laptop commodity GPUs, but their precision could be better. Although many LLM acceleration methods aim to decrease the number of non-zero weights, sparsity is the quantity of bits divided by weight.Â

Researchers from FAIR, GenAI, and Reality Labs at Meta, University of Toronto, Carnegie Mellon University, University of Wisconsin-Madison, and Dana-Farber Cancer Institute investigate the possibility of decreasing the layer count for each token through early inference exit.Â

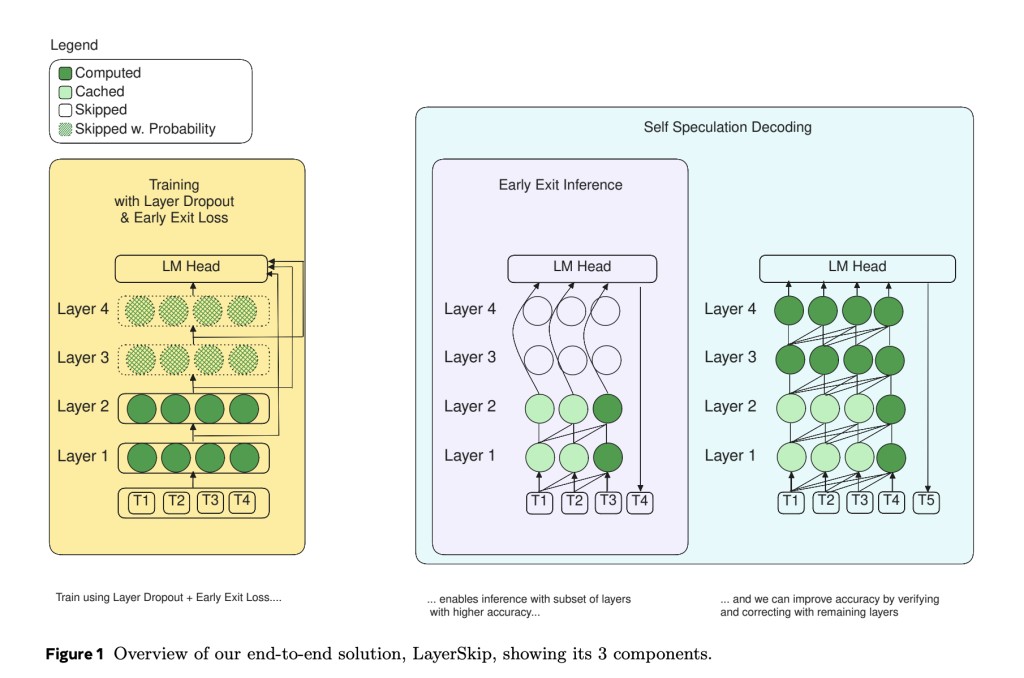

In contrast to quantization or sparsity, accelerating by reducing the number of layers does not require specific hardware or software kernels. In addition, speculative decoding is a common trend in LLM acceleration. This method involves pairing a huge model, called the main model, with a faster model, called the draft model, and does not compromise accuracy. However, keeping the key-value (KV) cache in two separate models takes a lot of work. This work introduces a self-speculative decoding method, a novel approach that doesn’t need extra models or auxiliary layers, combining departing early with speculative decoding.Â

The researchers use an example prompt to examine what occurs in each tier of an LLM to support their approach. They train a Llama1 7B model using the HumanEval coding dataset and feed it its initial prompt. The model defines and auto completes the function’s body when the prompt comprises a docstring and a Python function header. To generate each token, softmax is iteratively applied to the output embeddings of each LLM transformer layer. It is then projected onto the language model (LM) head, consisting of the model’s final normalization and linear layers. Finally, the researchers find the index of the output element with the highest value. At this level, the anticipated token is associated with the generated index. Some sources call this process the unembedding operation since it transforms an embedding into an index.Â

The researchers note a few things about the token prediction in each layer. To begin with, the weights of the LM head are different from those of the model’s embedding layer; hence, the token predictions made in earlier layers are meaningless. The projections of the tokens converge to the final prediction in subsequent layers. Second, using every layer to forecast the token is unnecessary. Among the 32 levels in the model, the study shows that, on average, 23.45 layers are needed for a token. Therefore, they can only get a 26% reduction in computation, even with an ideal predictor with no compute overhead.

Consequently, LLM models should minimize computation spent hesitating or “changing its mind†and increase prediction accuracy with fewer layers per token. Instead of spreading computation across all levels, deep learning models must be more motivated to forecast their final output early. The team shows that all 32 layers were needed to forecast “for†tokens that would normally look easy.Â

The researchers aimed to make their model employ later layers for difficult tokens and rely on them less for easier ones. In an ideal world, the proposed models would depend less on later layers and more on the ones that came before them. To achieve this goal, the team used layer dropout, the practice of omitting layers during training. So that the model isn’t so dependent on subsequent layers, the team employs greater dropout rates for those layers and lower rates for the ones that came before them.

LLM LM heads are taught to remove embeddings from the final transformer layer. They don’t receive any instructions on how to remove subsurface layers. For this reason, the proposed approach incorporates a loss function into the training process so that LM heads may more effectively “understand†the embeddings of previous layers.Â

Although most studies on early departure have used separate LM heads for each transformer layer, some have even included modules for each early exit. The team used a common LM head across the model’s transformer layers. This simplifies deployment and maintenance, shortens training times, and reduces memory consumption during inference and training.

The team suggests that using heuristics or predictors to leave early during inference or modifying the training approach to make models forecast early will likely reduce accuracy. They believe it would be useful to check for an early prediction before running the remaining layers to fix it. They check and fix the early exit prediction using speculative decoding techniques. It is faster to check the prediction of a group of tokens than to generate each token auto-regressively, which is a benefit of speculative decoding. Therefore, they introduce a self-speculative decoding method in which each token is auto-regressively produced using early exit. Then, the remaining layers are used to verify and fix a group of tokens simultaneously.

Some studies suggest a self-speculative decoding method that doesn’t need model weight changes. However, this solution, which involves fine tuning or pre-training the model, does. The learning rate needs to be increased to keep accuracy while starting from scratch with layer dropout pretraining. However, finding the sweet spot for learning rate optimization could be challenging and time-consuming.

The researchers anticipate that this study will encourage engineers and researchers to incorporate the suggested layer dropout and early exit loss into their pretraining and fine tuning recipes. Layer dropout, a technique that can speed up training when starting from fresh with pre-training, holds promise for parameter-efficient strategies like LoRA to be used together for fine tuning, potentially improving model performance.

The team wants to improve the accuracy of early exit layers for future improvements in self-speculative decoding speedups. They hope to investigate dynamic conditions to find a unique exit layer for every token, increasing the token acceptance rate for self-speculative decoding.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post LayerSkip: An End-to-End AI Solution to Speed-Up Inference of Large Language Models (LLMs) appeared first on MarkTechPost.

Source: Read MoreÂ