Search

News & Updates

CVE ID : CVE-2025-53602

Published : July 4, 2025, 9:15 p.m. | 1 hour, 29 minutes ago

Description : Zipkin through 3.5.1 has a /heapdump endpoint (associated with the use of Spring Boot Actuator), a similar issue to CVE-2025-48927.

Severity: 5.3 | MEDIUM

Visit the link for more details, such as CVSS details, affected products, timeline, and more…

CVE ID : CVE-2025-7069

Published : July 4, 2025, 9:15 p.m. | 1 hour, 29 minutes ago

Description : A vulnerability, which was classified as problematic, was found in HDF5 1.14.6. Affected is the function H5FS__sect_link_size of the file src/H5FSsection.c. The manipulation leads to heap-based buffer overflow. It is possible to launch the attack on the local host. The exploit has been disclosed to the public and may be used.

Severity: 3.3 | LOW

Visit the link for more details, such as CVSS details, affected products, timeline, and more…

CVE ID : CVE-2025-53365

Published : July 4, 2025, 10:15 p.m. | 29 minutes ago

Description : The MCP Python SDK, called `mcp` on PyPI, is a Python implementation of the Model Context Protocol (MCP). Prior to version 1.10.0, if a client deliberately triggers an exception after establishing a streamable HTTP session, this can lead to an uncaught ClosedResourceError on the server side, causing the server to crash and requiring a restart to restore service. Impact may vary depending on the deployment conditions, and presence of infrastructure-level resilience measures. Version 1.10.0 contains a patch for the issue.

Severity: 0.0 | NA

Visit the link for more details, such as CVSS details, affected products, timeline, and more…

CVE ID : CVE-2025-53366

Published : July 4, 2025, 10:15 p.m. | 29 minutes ago

Description : The MCP Python SDK, called `mcp` on PyPI, is a Python implementation of the Model Context Protocol (MCP). Prior to version 1.9.4, a validation error in the MCP SDK can cause an unhandled exception when processing malformed requests, resulting in service unavailability (500 errors) until manually restarted. Impact may vary depending on the deployment conditions, and presence of infrastructure-level resilience measures. Version 1.9.4 contains a patch for the issue.

Severity: 0.0 | NA

Visit the link for more details, such as CVSS details, affected products, timeline, and more…

Artificial Intelligence

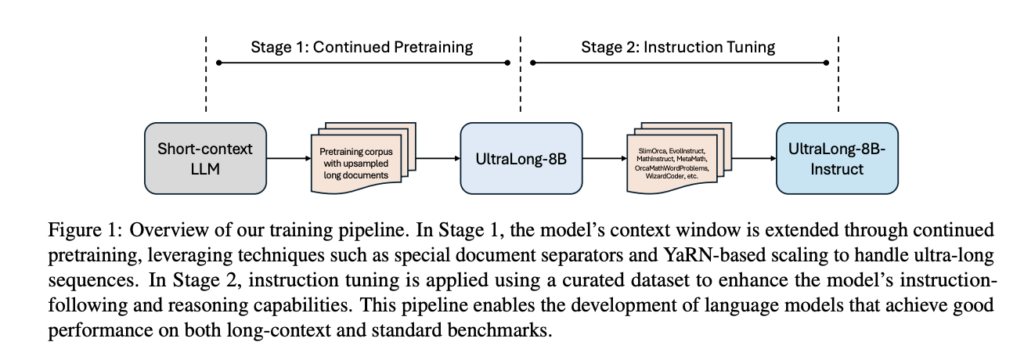

The most capable model you can run on a single GPU or TPU. Source: Read…

Introducing Gemini Robotics and Gemini Robotics-ER, AI models designed for robots to understand, act and…

Native image output is available in Gemini 2.0 Flash for developers to experiment with in…

Training Diffusion Models with Reinforcement Learning We deployed 100 reinforcement learning (RL)-controlled cars into rush-hour…