Large language models (LLMs) are the backbone of numerous computational platforms, driving innovations that impact a broad spectrum of technological applications. These models are pivotal in processing and interpreting vast amounts of data, yet they are often hindered by high operational costs and inefficiencies related to system tool utilization.

Optimizing LLM performance without prohibitive computational expenses is a significant challenge in this field. Traditionally, LLMs operate under systems that engage various tools for any given task, regardless of the specific needs of each operation. This broad tool activation drains computational resources and significantly increases the costs associated with data processing tasks.

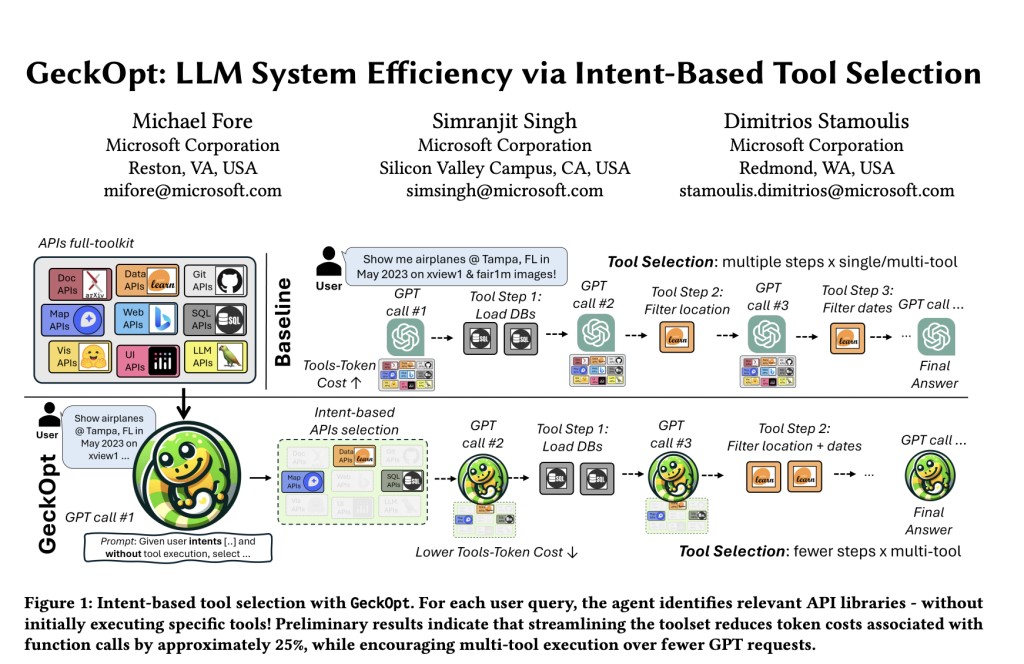

Emerging methodologies are refining the approach to tool selection in LLMs, focusing on the precision of tool deployment based on the task. By identifying the underlying intent of user commands through advanced reasoning capabilities, these systems can selectively streamline the toolset required for task execution. This strategic reduction in tool activation directly contributes to enhanced system efficiency and reduced computational overhead.

The GeckOpt system, developed by Microsoft Corporation researchers, represents a cutting-edge approach to intent-based tool selection. This methodology involves a preemptive user intent analysis, allowing for an optimized selection of API tools before the task execution begins. The system operates by narrowing down the potential tools to those most relevant to the task’s specific requirements, minimizing unnecessary activations, and focusing computational power where it is most needed.

Preliminary results from implementing GeckOpt in a real-world setting, specifically on the Copilot platform with over 100 GPT-4-Turbo nodes, have shown promising outcomes. The system has substantially reduced token consumption by up to 24.6% while maintaining high operational standards. These efficiency gains are reflected in reduced system costs and improved response times without significant sacrifices in performance quality. The trials conducted have shown deviations within a negligible range of 1% in success rates, underscoring the reliability of GeckOpt under varied operational conditions.

The success of GeckOpt in streamlining LLM operations presents a robust case for the widespread adoption of intent-based tool selection methodologies. By effectively reducing the operational load and optimizing tool use, the system curtails costs and enhances the scalability of LLM applications across different platforms. Introducing such technologies is poised to transform the landscape of computational efficiency, offering a sustainable and cost-effective model for the future of large-scale AI implementations.

In conclusion, integrating intent-based tool selection through systems like GeckOpt marks a progressive step towards optimizing the infrastructure of large language models. This approach significantly mitigates the operational demands on LLM systems, promoting a cost-efficient and highly effective computational environment. As these models evolve and their applications expand, technological advancements will be crucial in harnessing AI’s potential while maintaining economic viability.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post Microsoft’s GeckOpt Optimizes Large Language Models: Enhancing Computational Efficiency with Intent-Based Tool Selection in Machine Learning Systems appeared first on MarkTechPost.

Source: Read MoreÂ