Have you ever wondered how we can determine the true impact of a particular intervention or treatment on certain outcomes? This is a crucial question in fields like medicine, economics, and social sciences, where understanding cause-and-effect relationships is essential. Researchers have been grappling with this challenge, known as the “Fundamental Problem of Causal Inference,†– when we observe an outcome, we typically don’t know what would have happened under an alternative intervention. This issue has led to the development of various indirect methods to estimate causal effects from observational data.

Some existing approaches include the S-Learner, which trains a single model with the treatment variable as a feature, and the T-Learner, which fits separate models for treated and untreated groups. However, these methods can suffer from issues like bias towards zero treatment effect (S-Learner) and data efficiency problems (T-Learner).

More sophisticated methods like TARNet, Dragonnet, and BCAUSS have emerged, leveraging the concept of representation learning with neural networks. These models typically consist of a pre-representation component that learns representations from the input data and a post-representation component that maps these representations to the desired output.

While these representation-based approaches have shown promising results, they often overlook a particular source of bias: spurious interactions (see Table 1) between variables within the model. But what exactly are spurious interactions, and why are they problematic? Imagine you’re trying to estimate the causal effect of a treatment on an outcome while considering various other factors (covariates) that might influence the outcome. In some cases, the neural network might detect and rely on interactions between variables that don’t actually have a causal relationship. These spurious interactions can act as correlational shortcuts, distorting the estimated causal effects, especially when data is limited.

To address this issue, researchers from the Universitat de Barcelona have proposed a novel method called Neural Networks with Causal Graph Constraints (NN-CGC). The core idea behind NN-CGC is to constrain the learned distribution of the neural network to better align with the causal model, effectively reducing the reliance on spurious interactions.

Here’s a simplified explanation of how NN-CGC works:

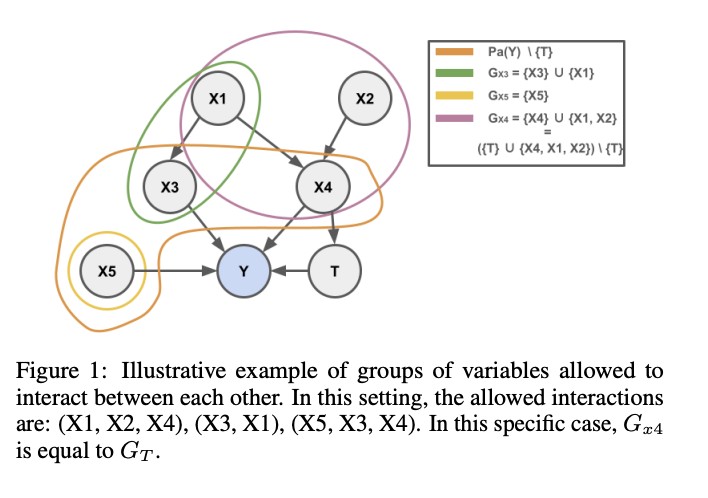

Variable Grouping: The input variables are divided into groups based on the causal graph (or expert knowledge if the causal graph is unavailable). Each group contains variables that are causally related to each other as shown in Figure 1.

Independent Causal Mechanisms: Each variable group is processed independently through a set of layers, modeling the Independent Causal Mechanisms for the outcome variable and its direct causes.

Constraining Interactions: By processing each variable group separately, NN-CGC ensures that the learned representations are free from spurious interactions between variables from different groups.

Post-representation: The outputs from the independent group representations are combined and passed through a linear layer to form the final representation. This final representation can then be fed into the output heads of existing architectures like TARNet, Dragonnet, or BCAUSS.

By incorporating causal constraints in this manner, NN-CGC aims to mitigate the bias introduced by spurious variable interactions, leading to more accurate causal effect estimations.

The researchers evaluated NN-CGC on various synthetic and semi-synthetic benchmarks, including the well-known IHDP and JOBS datasets. The results are quite promising: across multiple scenarios and metrics (like PEHE and ATE), the constrained versions of TARNet, Dragonnet, and BCAUSS (combined with NN-CGC) consistently outperformed their unconstrained counterparts, achieving new state-of-the-art performance.

One interesting observation is that in high-noise environments, the unconstrained models sometimes performed better than the constrained ones. This suggests that in such cases, the constraints might be discarding some causally valid information alongside the spurious interactions.

Overall, NN-CGC presents a novel and flexible approach to incorporating causal information into neural networks for causal effect estimation. By addressing the often-overlooked issue of spurious interactions, it demonstrates significant improvements over existing methods. The researchers have made their code openly available, allowing others to build upon and refine this promising technique.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post A New AI Approach for Estimating Causal Effects Using Neural Networks appeared first on MarkTechPost.

Source: Read MoreÂ