Cohere is the leading enterprise AI platform, building large language models (LLMs) which help businesses unlock the potential of their data. Operating at the frontier of AI, Cohere’s models provide a more intuitive way for users to retrieve, summarize, and generate complex information.

Cohere offers both text generation and embedding models to its customers. Enterprises running mission-critical AI workloads select Cohere because its models offer the best performance-cost tradeoff and can be deployed in production at scale. Cohere’s platform is cloud-agnostic. Their models are accessible through their own API as well as popular cloud managed services, and can be deployed on a virtual private cloud (VPC) or even on-prem to meet companies where their data is, offering the highest levels of flexibility and control.

Cohere’s leading Embed 3 and Rerank 3 models can be used with MongoDB Atlas Vector Search to convert MongoDB data to vectors and build a state-of-the-art semantic search system. Search results also can be passed to Cohere’s Command R family of models for retrieval augmented generation (RAG) with citations.

Check out our AI resource page to learn more about building AI-powered apps with MongoDB.

A new approach to vector embeddings

It is in the realm of embedding where Cohere has made a host of recent advances. Described as “AI for language understanding,†Embed is Cohere’s leading text representation language model. Cohere offers both English and multilingual embedding models, and gives users the ability to specify the type of data they are computing an embedding for (e.g., search document, search query). The result is embeddings that improve the accuracy of search results for traditional enterprise search or retrieval-augmented generation.

One challenge developers faced using Embed was that documents had to be passed one by one to the model endpoint, limiting throughput when dealing with larger data sets. To address that challenge and improve developer experience, Cohere has recently announced its new Embed Jobs endpoint. Now entire data sets can be passed in one operation to the model, and embedded outputs can be more easily ingested back into your storage systems.

Additionally, with only a few lines of code, Rerank 3 can be added at the final stage of search systems to improve accuracy. It also works across 100+ languages and offers uniquely high accuracy on complex data such as JSON, code, and tabular structure. This is particularly useful for developers who rely on legacy dense retrieval systems.

Demonstrating how developers can exploit this new endpoint, we have published the How to use Cohere embeddings and rerank modules with MongoDB Atlas tutorial. Readers will learn how to store, index, and search the embeddings from Cohere. They will also learn how to use the Cohere Rerank model to provide a powerful semantic boost to the quality of keyword and vector search results.

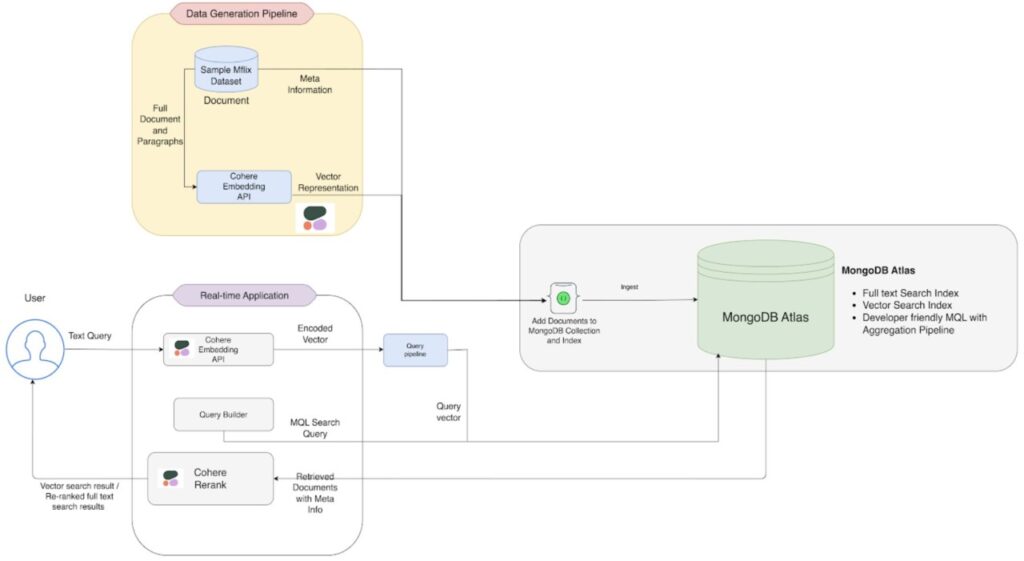

Figure 1: Illustrating the embedding generation and search workflow shown in the tutorial

Why MongoDB Atlas and Cohere?

MongoDB Atlas provides a proven OLTP database handling high read and write throughput backed by transactional guarantees. Pairing these capabilities with Cohere’s batch embeddings is massively valuable to developers building sophisticated gen AI apps. Developers can be confident that Atlas Vector Search will handle high scale vector ingestion, making embeddings immediately available for accurate and reliable semantic search and RAG. Increasing the speed of experimentation, developers and data scientists can configure separate vector search indexes side by side to compare the performance of different parameters used in the creation of vector embeddings.

In addition to batch embeddings, Atlas Triggers can also be used to embed new or updated source content in real time, as illustrated in the Cohere workflow shown in Figure 2.

Figure 2: MongoDB Atlas Vector Search supports Cohere’s batch and real time workflows. (Image courtesy of Cohere)

Supporting both batch and real-time embeddings from Cohere makes MongoDB Atlas well suited to highly dynamic gen AI-powered apps that need to be grounded in live, operational data. Developers can use MongoDB’s expressive query API to pre-filter query predicates against metadata, making it much faster to access and retrieve the more relevant vector embeddings. The unification and synchronization of source application data, metadata, and vector embeddings in a single platform, accessed by a single API, makes building gen AI apps faster, with lower cost and complexity. Those apps can be layered on top of the secure, resilient, and mature MongoDB Atlas developer data platform that is used today by over 45,000 customers spanning startups to enterprises and governments handling mission-critical workloads.

What’s next?

To start your journey into gen AI and Atlas Vector Search, review our 10-minute Learning Byte. In the video, you’ll learn about use cases, benefits, and how to get started using Atlas Vector Search.

Source: Read More