Are you curious about the intricate world of large language models (LLMs) and the technical jargon that surrounds them? Understanding the terminology, from the foundational aspects of training and fine-tuning to the cutting-edge concepts of transformers and reinforcement learning, is the first step towards demystifying the powerful algorithms that drive modern AI language systems. In this article, we delve into 25 essential terms to enhance your technical vocabulary and provide insights into the mechanisms that make LLMs so transformative.

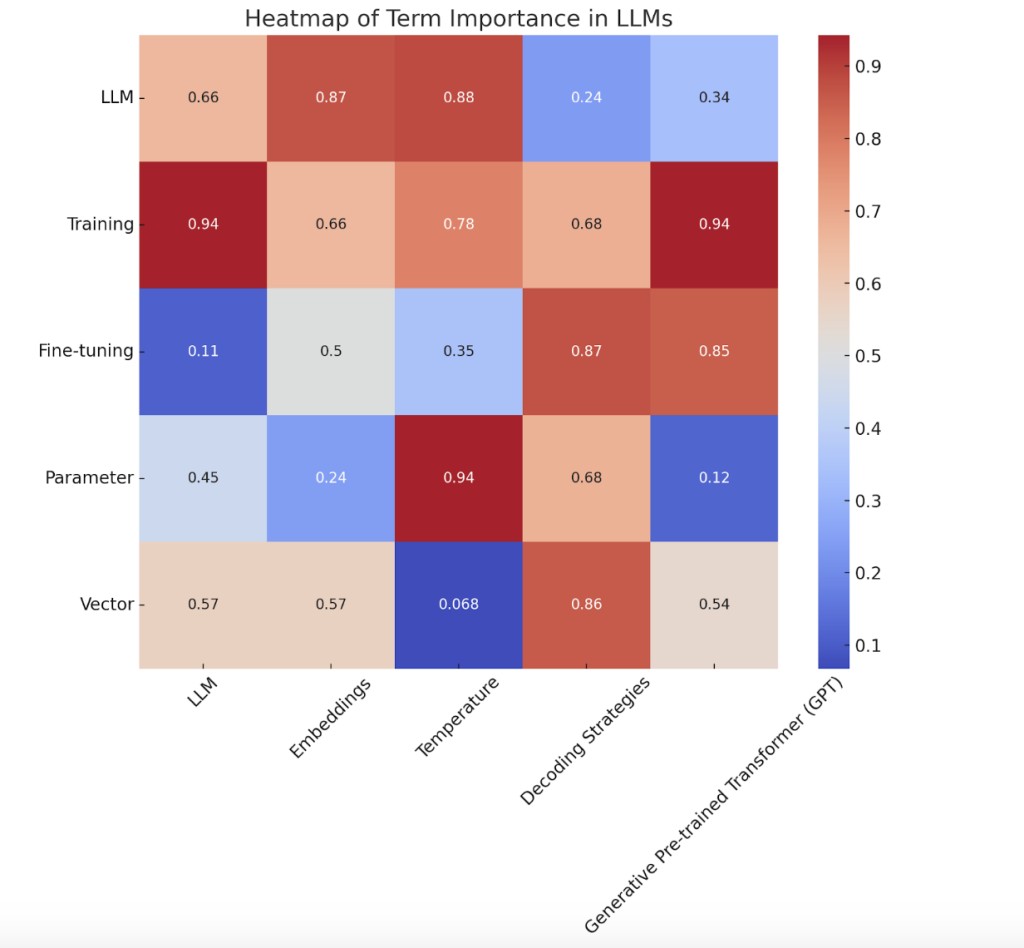

Heatmap representing the relative importance of terms in the context of LLMs

Large Language Models (LLMs) are advanced AI systems trained on extensive text datasets to understand and generate human-like text. They use deep learning techniques to process and produce language in a contextually relevant manner. The development of LLMs, such as OpenAI’s GPT series, Google’s Gemini, Anthropic AI’s Claude, and Meta’s Llama models, marks a significant advancement in natural language processing.

2. Training

Training refers to teaching a language model to understand and generate text by exposing it to a large dataset. The model learns to predict the next word in a sequence, improving its accuracy over time through adjustments to its internal parameters. This process is foundational for developing any AI that handles language tasks.

3. Fine-tuning

Fine-tuning is a process where a pre-trained language model is further trained (or tuned) on a smaller, specific dataset to specialize in a particular domain or task. This allows the model to perform better on tasks not covered extensively in the original training data.

4. Parameter

In the context of neural networks, including LLMs, a parameter is a variable part of the model’s architecture learned from the training data. Parameters (like weights in neural networks) are adjusted during training to reduce the difference between predicted and actual output.Â

5. Vector

In machine learning, vectors are arrays of numbers representing data in a format that algorithms can process. In language models, words or phrases are converted into vectors, often called embeddings, which capture semantic meanings that the model can understand and manipulate.Â

6. Embeddings

Embeddings are dense vector representations of text where familiar words have similar representations in vector space. This technique helps capture the context and semantic similarity between words, crucial for tasks like machine translation and text summarization.

7. Tokenization

Tokenization is splitting text into pieces, called tokens, which could be words, subwords, or characters. This is a preliminary step before processing text using language models, as it helps handle varied text structures and languages.

8. Transformers

Transformers are neural network architecture that relies on mechanisms called self-attention to weigh the influence of different parts of the input data differently. This architecture is very effective for many natural language processing tasks and is at the core of most modern LLMs.

9. Attention

Attention mechanisms in neural networks enable models to concentrate on different segments of the input sequence while generating a response, mirroring how human attention operates during activities such as reading or listening. This capability is essential for comprehending context and producing coherent responses.

10. Inference

Inference refers to using a trained model to make predictions. In the context of LLMs, inference is when the model generates text based on input data using the knowledge it has learned during training. This is the phase where the practical application of LLMs is realized.

11. Temperature

In language model sampling, temperature is a hyperparameter that controls the randomness of predictions by scaling the logits before applying softmax. A higher temperature produces more random outputs, while a lower temperature makes the model’s output more deterministic.

The frequency parameter in language models adjusts the likelihood of tokens based on their frequency of occurrence. This parameter helps balance the generation of common versus rare words, influencing the model’s diversity and accuracy in text generation.

13. Sampling

Sampling in the context of language models refers to generating text by randomly picking the next word based on its probability distribution. This approach allows models to generate varied and often more creative text outputs.

14. Top-k Sampling

Top-k sampling is a technique in which the model’s choice for the next word is limited to the k most likely next words according to the model’s predictions. This method reduces the randomness of text generation while still allowing for variability in the output.

15. RLHF (Reinforcement Learning from Human Feedback)

Reinforcement Learning from Human Feedback is a technique where a model is fine-tuned based on human feedback rather than just raw data. This approach aligns the model’s outputs with human values and preferences, significantly improving its practical effectiveness.

Decoding strategies determine how language models select output sequences during generation. Strategies include greedy decoding, where the most likely next word is chosen at each step, and beam search, which expands on greedy decoding by considering multiple possibilities simultaneously. These strategies significantly affect the output’s coherence and diversity.

Language model prompting involves designing inputs (or prompts) that guide the model in generating specific types of outputs. Effective prompting can improve performance on tasks like question answering or content generation without further training.

18. Transformer-XL

Transformer-XL extends the existing transformer architecture, enabling learning dependencies beyond a fixed length without disrupting temporal coherence. This architecture is crucial for tasks involving long documents or sequences.

19. Masked Language Modeling (MLM)

Masked Language Modeling entails masking certain input data segments during training, prompting the model to predict the concealed words. This method forms a cornerstone in models such as BERT, employing MLM to enhance pre-training effectiveness.

20. Sequence-to-Sequence Models (Seq2Seq)

Seq2Seq models are designed to convert sequences from one domain to another, such as translating text from one language or converting questions to answers. These models typically involve an encoder and a decoder.

21. Generative Pre-trained Transformer (GPT)

Generative Pre-trained Transformer refers to a series of language processing AI models designed by OpenAI. GPT models are trained using unsupervised learning to generate human-like text based on their input.Â

22. Perplexity

Perplexity gauges the predictive accuracy of a probability model on a given sample. Within language models, reduced perplexity suggests superior prediction of test data, typically associated with smoother and more precise text generation.

Multi-head attention, a component in transformer models, enables the model to focus on various representation subspaces at different positions simultaneously. This enhances the model’s ability to concentrate on relevant information dynamically.

Contextual embeddings are representations of words that consider the context in which they appear. Unlike traditional embeddings, these are dynamic and change based on the surrounding text, providing a richer semantic understanding.

Autoregressive models in language modeling predict subsequent words based on previous ones in a sequence. This approach is fundamental in models like GPT, where each output word becomes the next input, facilitating coherent long text generation.

The post Understanding Key Terminologies in Large Language Model (LLM) Universe appeared first on MarkTechPost.

Source: Read MoreÂ