Code generation has emerged as a significant area for evaluating and deploying Large Language Models (LLMs). However, many of the current coding benchmarks, like HumanEval and MBPP, have achieved solution rates above 90% as language models have grown in size and new inference techniques have been created. This saturation points to the need for more difficult benchmarks that can highlight the limitations of existing models and inference techniques while also offering suggestions for improving the capacity of these models for algorithmic reasoning.

Competitive programming offers itself a good path to pursue in this regard. It is intended to objectively evaluate both the development of unique algorithms and human reasoning in challenging situations. There hasn’t been enough problem diversity, in-depth problem analyses, or comprehensive unit test suites in competitive programming evaluation to properly assess algorithmic reasoning abilities.

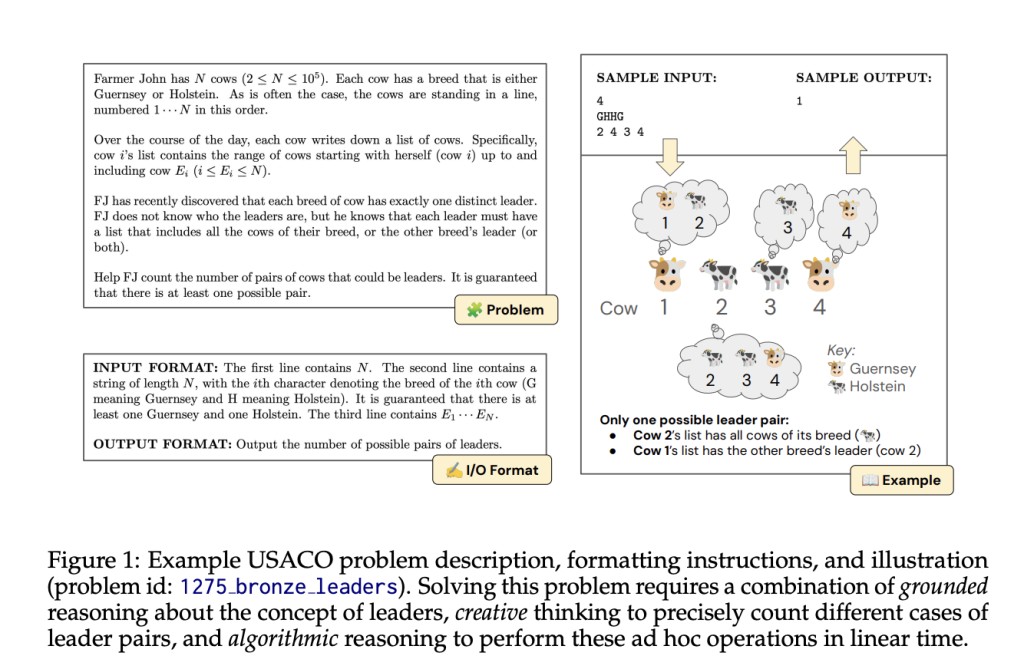

In response to these constraints, USACO, a constructed coding benchmark with 307 difficult tasks drawn from previous USA Computing Olympiad contests, has been presented by a team of researchers. Each challenge includes an example input-output tuple and an explanation, along with a task within a hypothetical setting. It takes a wide range of algorithmic, mathematical, and common sense expertise, as well as innovative and well-founded thinking, to solve these challenges.Â

In contrast to earlier benchmarks that concentrated on program synthesis, models must be able to reason across a variety of settings and create original algorithms specific to each challenge scenario in order to succeed in USACO. Using zero-shot chain-of-thought prompting on USACO, even the most sophisticated language model, GPT-4, only manages an 8.7% zero-shot pass rate@1.

For each challenge, the benchmark also provides official analyses, reference code solutions, high-quality unit tests, and instructional materials similar to competition programming textbooks, with the goal of facilitating the investigation of more inference techniques for competitive programming. A variety of baseline techniques based on self-reflection, retrieval, and their combinations have been created using these resources. Retrieval strategies combined with self-reflection are found to greatly improve performance, more than tripling the zero-shot solve rate of GPT-4. All approaches, meanwhile, are still unable to solve the benchmark above the easiest level, the bronze difficulty tier.

A human-in-the-loop study has also been used to obtain deeper insights into the remaining issues. It has been found that giving GPT-4 tailored suggestions makes it solve 13 out of 15 previously unsolvable problems, outperforming all previous models and methods examined.

The team has summarized their primary contributions as follows.

The USACO benchmark has been introduced. It is the first benchmark to be created from Olympiad programming and includes carefully selected test cases, problem analysis, and additional resources to enable thorough assessment.

LLM inference techniques have been built and analyzed specifically for Olympiad programming challenges. Experimental results have demonstrated that while a combination of these approaches shows promise in improving performance, there is still a large gap in answering the benchmark completely. Examples of these techniques include retrieval and self-reflection.

In contrast to automated tests that only consider execution success, the new study evaluates the potentials and constraints of LLMs for Olympiad programming. This research reveals that only a subset of models can integrate feedback efficiently, providing insight into hidden differences between models when it comes to addressing interactive problem-solving situations.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

For Content Partnership, Please Fill Out This Form Here..

The post Can Language Models Solve Olympiad Programming? Researchers at Princeton University Introduce USACO Benchmark for Rigorously Evaluating Code Language Models appeared first on MarkTechPost.

Source: Read MoreÂ