Do you know those autostereograms with the hidden 3D pictures? Images like the Magic Eye pictures from the 1990s that look like noisy repeating patterns until you defocus your eyes just right?

ChatGPT can generate them!

At least according to ChatGPT.

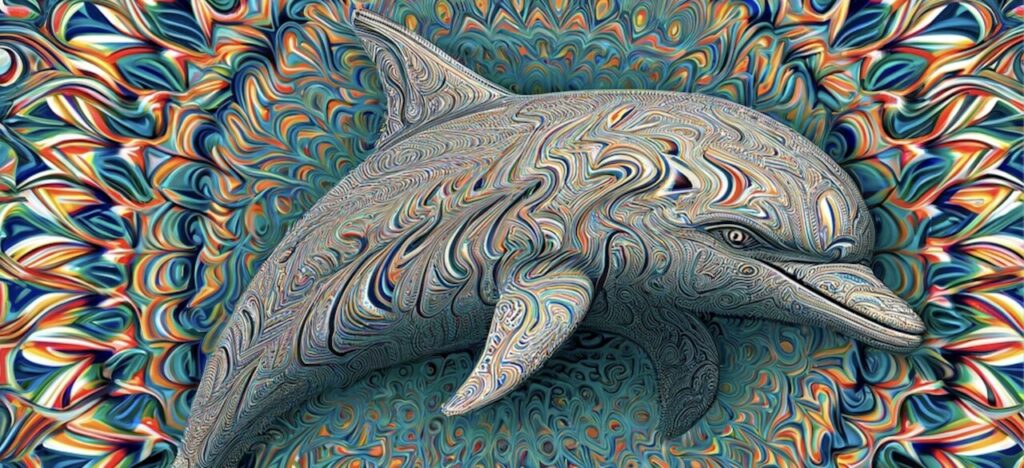

I’ve seen people try making Magic Eye-style images with other image generating models, but I hadn’t thought to try it with ChatGPT until reader Pippin sent me the suggestion and I generated the hilarious dolphin image above. What got me in particular was the caption.

How do you end up with a result that sounds so confident and yet is so completely wrong?

ChatGPT has encountered information about Magic Eye stereograms in its internet training. When I ask “Please generate a magic eye 3D stereo image of a unicorn”, the descriptions ChatGPT passes to DALL-E3 (the image generator that actually makes the images) are extremely particular:

A magic eye 3D stereogram that reveals a hidden image of a unicorn when viewed correctly. The stereogram should be intricate and filled with a variety of colors to ensure the 3D effect is prominent, allowing the unicorn to appear as if it is standing in a magical forest once the viewer’s eyes adjust. The background should consist of complex patterns and mystical elements to enhance the illusion of depth and the magical atmosphere, encouraging viewers to find the hidden unicorn. The unicorn itself should be designed to be a delightful surprise for those adept at viewing magic eye images, with its horn and mane clearly discernable amidst the pattern.

But this is the image DALL-E3 provides to ChatGPT in response:

ChatGPT doesn’t apply any image recognition to the result it gets back – whatever DALL-E3 did is a big blank to it. So ChatGPT then continues with its description as if the image is clearly exactly what it asked for. It gestures to the green screen, where presumably there is a fabulous 3D illusion image appearing, and then continues with no information about its actual shortcomings.

Google’s Gemini (previously Bard) also has a dedicated model (Imagen 2) to do its image generation. Its hidden images are even …less subtle.

Gemini has an image describing model that it can call on, so if I ask it to describe the images it just generated, it should notice that they’re nothing like Magic Eye images. Right?

At least it didn’t give away the solution to the puzzle.

But if I start a new chat and give it one of the “hidden” doves from above, there’s no hint of it being a Magic Eye illusion, just an ordinary image of a dove.

My theory is that Gemini only calls on its image recognition model when the user uploads an image. Otherwise, it’s just a text generator.

People selling “AI” like to present it as an all-purpose computer program but models like Gemini and ChatGPT are more like a phone full of apps. The text generating app can launch the image generating app in particular circumstances but they’re not meaningfully the same program.

I should note that even when ChatGPT is only doing text generation and could in theory check its own work, it still just assumes it does a great job. Here it is generating ASCII text art and then reading back its own messages:

If I start a new chat and send ChatGPT its own ASCII word, it says the word reads “ERROR”. It’s similarly terrible at recognizing and rating its own ASCII art.

Generating ASCII art and 3D images isn’t a big potential application for models like ChatGPT, but it’s a good reminder that these models don’t understand what we’re asking for or the basic steps for doing it right. When ChatGPT adds image recognition or description or some other functionality, it’s not that the original text model got smarter. It just can call on another app.

One last 3D illusion challenge! I asked for a really tricky one, so be prepared not to get the hidden picture at first! When you see it, you’ll know because it’ll look like a sea turtle.

Source: Read MoreÂ