The Large Language Model Transparency Tool (LLM-TT) is an open-source interactive toolkit by Meta Research that analyzes Transformer-based language models. This tool delineates the crucial segments of the input-to-output information flow and permits the inspection of individual attention heads and neurons’ contributions. The TransformerLens hooks offer a plug-and-play experience compatible with a broad range of models from Hugging Face. Users can observe how information moves through the network during a forward pass and explore the attention edges and nodes to inspect the influence of specific components on model outputs.

The need for the LLM-TT arises from the growing complexity and impact of LLMs in various applications, including decision-making processes and content generation. This tool addresses a critical gap in understanding and monitoring how these models function by providing visibility into their decision-making processes. It enhances the ability to verify model behavior, uncover biases, and ensure alignment with ethical standards, thereby improving trust and reliability in AI deployments.

Key functionalities of LLM-TT are as follows:

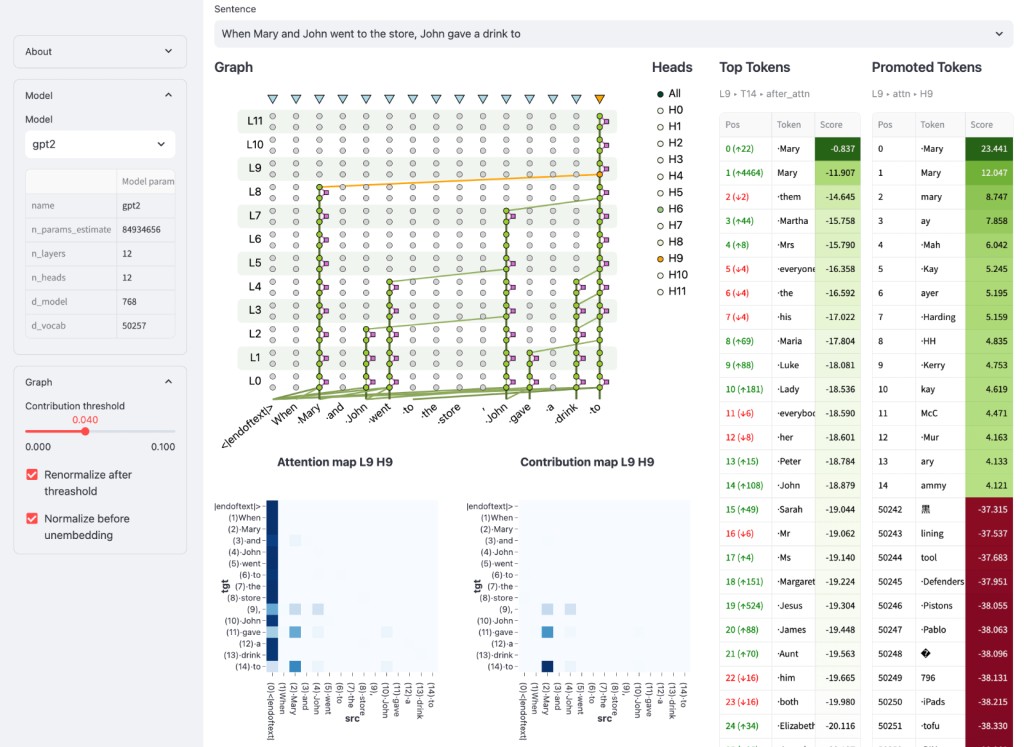

Users select a model and prompt to initiate inference.

The key functionality includes browsing a contribution graph and selecting a token to build this graph.

Users can adjust the contribution threshold and select any token’s representation after any block.

For each token representation, the projection to the output vocabulary is visible, showing which tokens were promoted or suppressed by the previous block.

Interactive elements include clickable edges, which reveal more about the contributing attention head, and heads that show promotion or suppression details when an edge is selected.

Feedforward Network (FFN) blocks and neurons within these blocks are also interactive, allowing for detailed inspection.

In conclusion, the LLM-TT enhances the understanding, fairness, and accountability of Transformer-based language models. It highlights the tool’s capabilities in offering an in-depth look at how these models process information, allowing a detailed examination of individual components’ contributions. By enabling clearer insights into the operational mechanisms of LLMs, the tool supports more ethical and informed use of AI technologies.

Check out the GitHub and HF Page. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

For Content Partnership, Please Fill Out This Form Here..

The post Meta AI Introducing the Language Model Transparency Tool: An Open-Source Interactive Toolkit for Analyzing Transformer-based Language Models appeared first on MarkTechPost.

Source: Read MoreÂ