The absence of a standardized benchmark for Graph Neural Networks GNNs has led to overlooked pitfalls in system design and evaluation. Existing benchmarks like Graph500 and LDBC need to be revised for GNNs due to differences in computations, storage, and reliance on deep learning frameworks. GNN systems aim to optimize runtime and memory without altering model semantics. However, many need help with design flaws and consistent evaluations, hindering progress. More than manually correcting these flaws is required; a systematic benchmarking platform must be established to ensure fairness and consistency across assessments. Such a platform would streamline efforts and promote innovation in GNN systems.

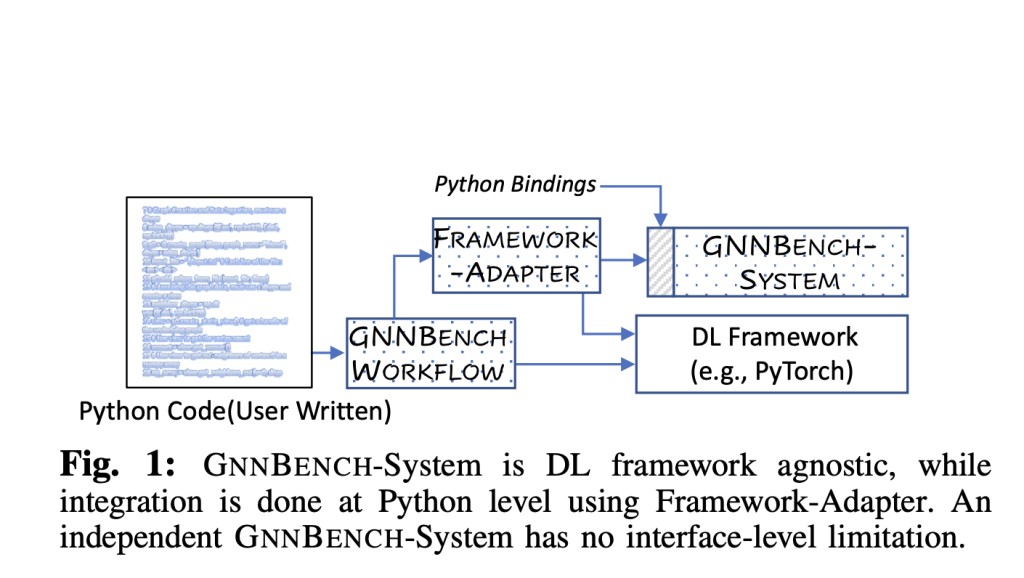

William & Mary researchers have developed GNNBENCH, a versatile platform tailored for system innovation in GNNs. It streamlines the exchange of tensor data, supports custom classes in System APIs, and seamlessly integrates with frameworks like PyTorch and TensorFlow. By combining multiple GNN systems, GNNBENCH exposed critical measurement issues, aiming to alleviate researchers from integration complexities and evaluation inconsistencies. The platform’s stability, productivity enhancements, and framework-agnostic nature enable rapid prototyping and fair comparisons, driving advancements in GNN system research while addressing integration challenges and ensuring consistent evaluations.

In striving for fair and productive benchmarking, GNNBENCH addresses key challenges existing GNN systems face, aiming to provide stable APIs for seamless integration and accurate evaluations. These challenges include instability due to varying graph formats and kernel variants across different systems. PyTorch and TensorFlow plugins present limitations in accepting custom graph objects, while GNN operations require additional metadata in system APIs, leading to inconsistencies. DGL’s framework overhead and complex integration process further complicate system integration. Despite recent DNN benchmark platforms, GNN benchmarking still needs to be explored. PyTorch-Geometric (PyG) faces similar plugin limitations. These challenges underscore the need for a standardized and extensible benchmarking framework like GNNBENCH.

GNNBENCH introduces a producer-only DLPack protocol, simplifying tensor exchange between DL frameworks and third-party libraries. Unlike traditional approaches, this protocol enables GNNBENCH to utilize DL framework tensors without ownership transfer, enhancing system flexibility and reusability. Generated integration codes facilitate seamless integration with different DL frameworks, promoting extensibility. The accompanying domain-specific language (DSL) automates code generation for system integration, offering researchers a streamlined approach to prototype and implement kernel fusion or other system innovations. Such mechanisms empower GNNBENCH to adapt to diverse research needs efficiently and effectively.

GNNBENCH offers versatile integration with popular deep learning frameworks like PyTorch, TensorFlow, and MXNet, facilitating seamless platform experimentation. While the primary evaluation leverages PyTorch, compatibility with TensorFlow, demonstrated particularly for GCN, underscores its adaptability to any mainstream DL framework. This adaptability ensures researchers can explore diverse environments without constraint, enabling precise comparisons and insights into GNN performance. GNNBENCH’s flexibility enhances reproducibility and encourages comprehensive evaluation, which is essential for advancing GNN research in varied computational contexts.

In conclusion, GNNBENCH emerges as a pivotal benchmarking platform, fostering productive research and fair evaluations in GNNs. Facilitating seamless integration of various GNN systems sheds light on accuracy issues in original models like TC-GNN and GNNAdvisor. Through its producer-only DLPack protocol and generation of critical integration code, GNNBENCH enables efficient prototyping with minimal framework overhead and memory consumption. Its systematic approach aims to rectify measurement pitfalls, promote innovation, and ensure unbiased evaluations, thereby advancing the field of GNN research.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post GNNBench: A Plug-and-Play Deep Learning Benchmarking Platform Focused on System Innovation appeared first on MarkTechPost.

Source: Read MoreÂ