Significant progress has been made in LLMs, or large-scale language models, which have absorbed a fundamental linguistic understanding of the environment. However, LLMs, despite their proficiency in historical knowledge and insightful responses, are severely deficient in real-time comprehension.

Imagine a pair of trendy smart glasses or a home robot with an embedded AI agent as its brain. For such an agent to be effective, it must be able to interact with humans using simple, everyday language and utilize senses like vision to understand its surroundings. This is the ambitious goal that Meta AI is pursuing, presenting a significant research challenge.

EQA, a method for testing an AI agent’s comprehension of its environment, has practical implications that extend beyond the realm of research. Even the most basic form of EQA can simplify everyday life. For instance, consider a scenario where you need to leave the house but can’t find your office badge. EQA could help you locate it. However, as Moravec’s paradox suggests, even the most advanced models of today still can’t match human performance in EQA.Â

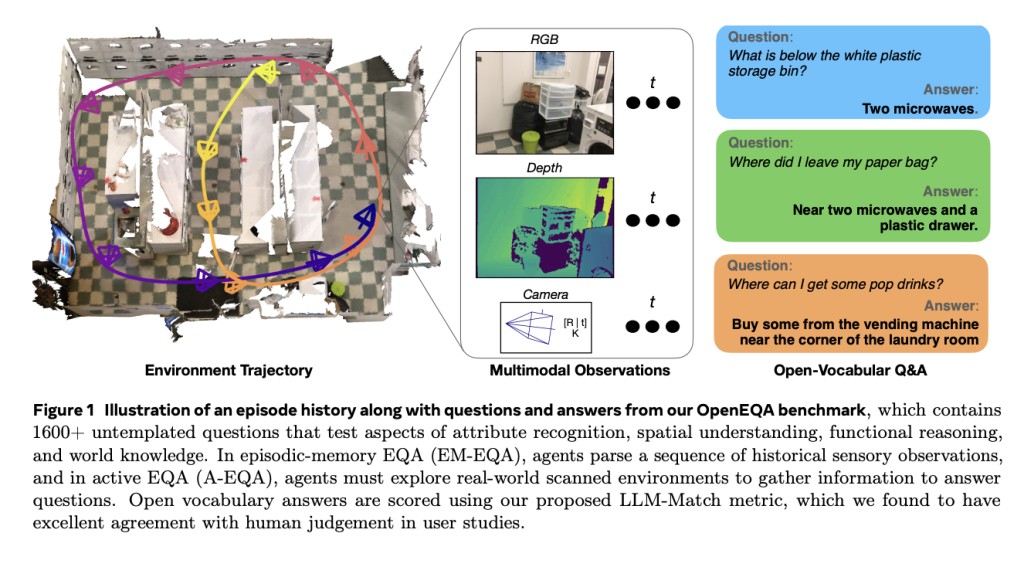

As a pioneering effort, Meta has introduced the Open-Vocabulary Embodied Question Answering (OpenEQA) framework. This innovative metric is designed to assess an AI agent’s understanding of its environment through open-vocabulary inquiries, a novel approach in the field. The concept is akin to testing a person’s comprehension of a topic by asking them questions and analyzing their responses.Â

The first part of OpenEQA is episodic memory EQA, which requires an embodied AI agent to recall prior experiences to answer questions. The second part is active EQA, which requires the agent to actively seek out information from its surroundings to answer questions.

This benchmark includes over 180 movies and scans of physical environments, and over 1,600 non-templated question-and-answer pairs provided by human annotators that reflect real-world scenarios. LLM-Match, an automated evaluation criteria for rating open vocabulary answers, is also included with OpenEQA. Blind user trials demonstrated that LLM-Match is as closely associated with humans as two people are with one another.

The team found a significant gap between human performance (85.9%), even among the most effective models (GPT-4V at 48.5%), and OpenEQA’s benchmarking of various state-of-the-art vision+language foundation models (VLMs). Even the most advanced VLMs struggle with spatial understanding questions, suggesting that models that use visual information aren’t fully utilizing it. Instead, they rely on prior textual knowledge to answer visual questions. This indicates that embodied AI entities driven by these models still have a long way to go in perception and reasoning before they are ready for widespread use.

OpenEQA integrates the capacity to respond in natural language with the ability to tackle difficult open-vocabulary queries. This produces an easy-to-understand metric showing environmental expertise while challenging foundational assumptions. Researchers hope academics can use OpenEQA, the first open-vocabulary benchmark for EQA, to monitor developments in scene interpretation and multimodal learning.

Check out the Paper, Project, and Blog. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post Meta AI Releases OpenEQA: The Open-Vocabulary Embodied Question Answering Benchmark appeared first on MarkTechPost.

Source: Read MoreÂ