In the ever-evolving landscape of artificial intelligence, a revolutionary concept has been turning heads and pushing boundaries: federated learning (FL). This cutting-edge approach allows for the collaborative training of machine learning models across different devices and locations, all while keeping personal data securely locked away from prying eyes. It’s like the best of both worlds when it comes to leveraging data for better models while still respecting privacy.

But as exciting as FL is, conducting research in this space has been a real challenge for data scientists and machine learning engineers. Simulating realistic, large-scale FL scenarios has been a persistent struggle, with existing tools lacking the speed and scalability to keep up with the demands of modern research.

This paper introduces pfl-research, a game-changing Python framework designed to supercharge your PFL (Private Federated Learning) research efforts. This framework is fast, modular, and user-friendly, making it a dream come true for researchers who want to iterate quickly and explore new ideas without being bogged down by computational limitations.

One of the standout features of pfl-research is its versatility. It’s like having a multilingual research assistant that can speak the languages of TensorFlow, PyTorch, and even good old-fashioned non-neural network models. And here’s the real kicker: pfl-research plays nicely with the latest privacy algorithms, ensuring that your data stays snug as a bug while you push the boundaries of what’s possible.

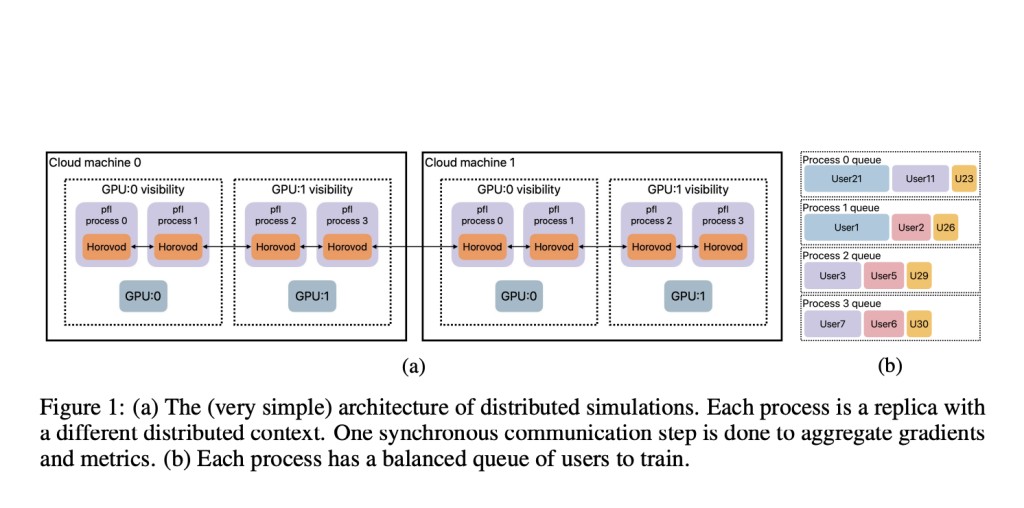

But what really sets pfl-research apart is its building-block approach. It’s like a high-tech Lego set for researchers, with modular components like Dataset, Model, Algorithm, Aggregator, Backend, Postprocessor, and more that you can mix and match to create simulations tailored to your specific needs. Want to test out a novel federated averaging algorithm on a massive image dataset? No problem! Need to experiment with different privacy-preserving techniques for distributed text models? pfl-research has got you covered.

Now, here’s where things get really exciting. In the tests against other FL simulators, pfl-research surpasses the competition, achieving up to 72 times faster simulation times. With pfl-research, you can run experiments on massive datasets without breaking a sweat or compromising the quality of your research.

But the pfl-research crew isn’t resting on their laurels. They’ve got big plans to keep improving this tool, like continuously adding support for new algorithms, datasets, and cross-silo simulations (think federated learning across multiple organizations or institutions). They’re also exploring cutting-edge simulation architectures to push the boundaries of scalability and versatility, ensuring that pfl-research stays ahead of the curve as the field of federated learning continues to evolve.

Just imagine the possibilities that pfl-research unlocks for your research. You could be the one to crack the code on privacy-preserving natural language processing, or develop a groundbreaking federated learning approach for personalized healthcare applications.

In the ever-evolving world of artificial intelligence research, federated learning is a game-changer, and pfl-research is your ultimate sidekick. It’s fast, flexible, and user-friendly, the dream combination for any researcher looking to break new ground in this exciting domain.

miliar ones.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

Want to get in front of 1.5 Million AI Audience? Work with us here

The post Researchers at Apple Introduce ‘pfl-research’: A Fast, Modular, and Easy-to-Use Python Framework for Simulating Federated Learning appeared first on MarkTechPost.

Source: Read MoreÂ