Imagine an AI system that can recognize any object, comprehend any text, and generate realistic images without being explicitly trained on those concepts. This is the enticing promise of “zero-shot†capabilities in AI. But how close are we to realizing this vision?

Major tech companies have released impressive multimodal AI models like CLIP for vision-language tasks and DALL-E for text-to-image generation. These models seem to perform remarkably well on a variety of tasks “out-of-the-box†without being explicitly trained on them – the hallmark of zero-shot learning. However, a new study by researchers from Tubingen AI Center, University of Cambridge, University of Oxford, and Google Deepmind casts doubt on the true generalization abilities of these systems. Â

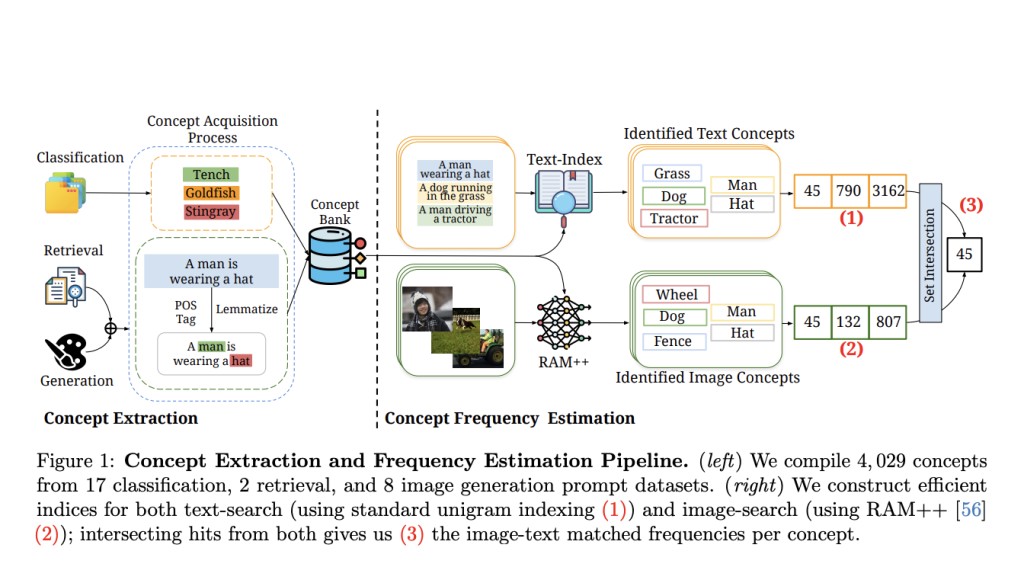

The researchers conducted a large-scale analysis of the data used to pretrain popular multimodal models like CLIP and Stable Diffusion. They looked at over 4,000 concepts spanning images, text, and various AI tasks. Surprisingly, they found that a model’s performance on a particular concept is strongly tied to how frequently that concept appeared in the pretraining data. The more training examples for a concept, the better the model’s accuracy.

But here’s the kicker – the relationship follows an exponential curve. To get just a linear increase in performance, the model needs to see exponentially more examples of that concept during pre-training. This reveals a fundamental bottleneck – current AI systems are extremely data hungry and sample inefficient when it comes to learning new concepts from scratch.

The researchers dug deeper and unearthed some other concerning patterns. Most concepts in the pretraining datasets are relatively rare, following a long-tailed distribution. There are also many cases where the images and text captions are misaligned, containing different concepts. This “noise†likely further impairs a model’s generalization abilities. Â

To put their findings to the test, the team created a new “Let It Wag!†dataset containing many long-tailed, infrequent concepts across different domains like animals, objects, and activities. When evaluated on this dataset, all models – big and small, open and private – showed significant performance drops compared to more commonly used benchmarks like ImageNet. Qualitatively, the models often failed to properly comprehend or render images for these rare concepts.

The study’s key revelation is that while current AI systems excel at specialized tasks, their impressive zero-shot capabilities are somewhat of an illusion. What seems like broad generalization is largely enabled by the models’ immense training on similar data from the internet. As soon as we move away from this data distribution, their performance craters.

So where do we go from here? One path is improving data curation pipelines to cover long-tailed concepts more comprehensively. Alternatively, model architectures may need fundamental changes to achieve better compositional generalization and sample efficiency when learning new concepts. Lastly, retrieval mechanisms that can enhance or “look up†a pre-trained model’s knowledge could potentially compensate for generalization gaps. Â

In summary, while zero-shot AI is an exciting goal, we aren’t there yet. Uncovering blind spots like data hunger is crucial for sustaining progress towards true machine intelligence. The road ahead is long, but clearly mapped by this insightful study.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

The post The “Zero-Shot†Mirage: How Data Scarcity Limits Multimodal AI appeared first on MarkTechPost.

Source: Read MoreÂ