King’s College London researchers have highlighted the importance of developing a theoretical understanding of why transformer architectures, such as those used in models like ChatGPT, have succeeded in natural language processing tasks. Despite their widespread usage, the theoretical foundations of transformers have yet to be fully explored. In their paper, the researchers aim to propose a theory that explains how transformers work, providing a definite perspective on the difference between traditional feedforward neural networks and transformers.

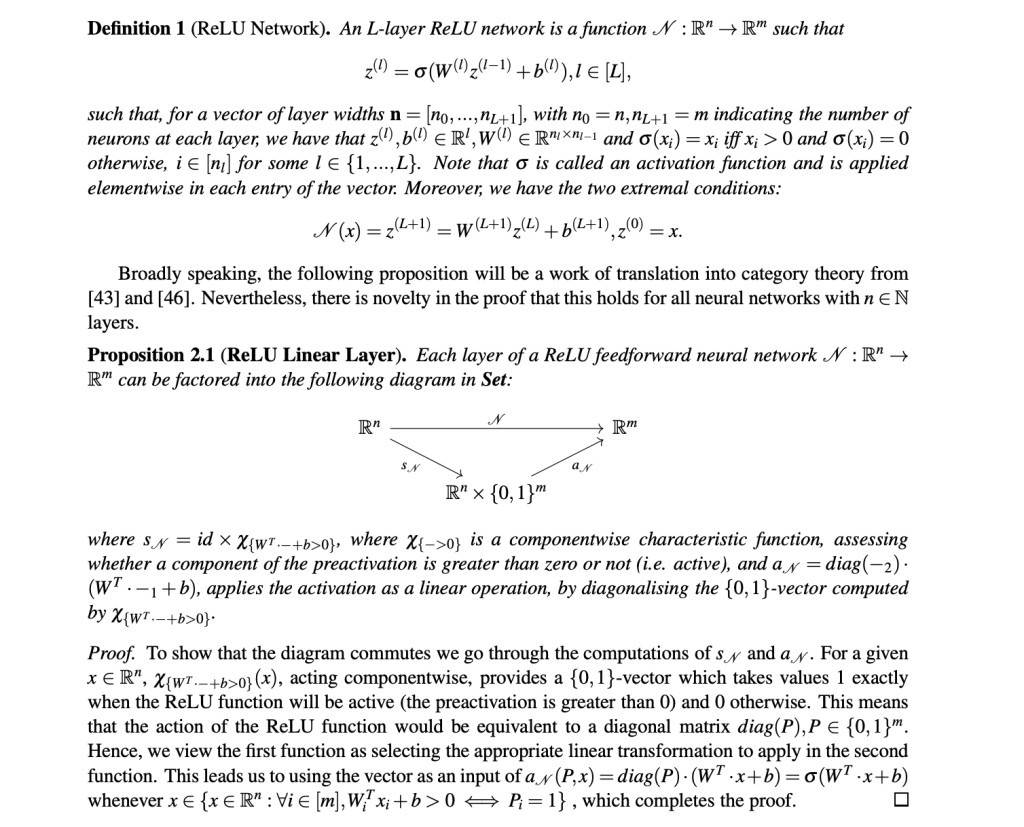

Transformer architectures, exemplified by models like ChatGPT, have revolutionized natural language processing tasks. However, the theoretical underpinnings behind their effectiveness still need to be better understood. The researchers propose a novel approach rooted in topos theory, a branch of mathematics that studies the emergence of logical structures in various mathematical settings. By leveraging topos theory, the authors aim to provide a deeper understanding of the architectural differences between traditional neural networks and transformers, particularly through the lens of expressivity and logical reasoning.

The proposed approach was explained by analyzing neural network architectures, particularly transformers, from a categorical perspective, specifically utilizing topos theory. While traditional neural networks can be embedded in pretopos categories, transformers necessarily reside in a topos completion. This distinction suggests that transformers exhibit higher-order reasoning capabilities compared to traditional neural networks, which are limited to first-order logic. By characterizing the expressivity of different architectures, the authors provide insights into the unique qualities of transformers, particularly their ability to implement input-dependent weights through mechanisms like self-attention. Additionally, the paper introduces the notion of architecture search and backpropagation within the categorical framework, shedding light on why transformers have emerged as dominant players in large language models.

In conclusion, the paper offers a comprehensive theoretical analysis of transformer architectures through the lens of topos theory, analyzing their unparalleled success in natural language processing tasks. The proposed categorical framework not only enhances our understanding of transformers but also offers a novel perspective for future architectural advancements in deep learning. Overall, the paper contributes to bridging the gap between theory and practice in the field of artificial intelligence, paving the way for more robust and explainable neural network architectures.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post This AI Paper from King’s College London Introduces a Theoretical Analysis of Neural Network Architectures Through Topos Theory appeared first on MarkTechPost.

Source: Read MoreÂ