Within natural language processing (NLP), reference resolution is a critical challenge as it involves determining the antecedent or referent of a word or phrase within a text, which is essential for understanding and successfully handling different types of context. Such contexts can range from previous dialogue turns in a conversation to non-conversational elements, like entities on a user’s screen or background processes.

Researchers aim to tackle the core issue of how to enhance the capability of large language models (LLMs) in resolving references, especially for non-conversational entities. Existing research includes models like MARRS, focusing on multimodal reference resolution, especially for on-screen content. Vision transformers and vision+text models have also contributed to the progress, although heavy computational requirements limit their application.Â

Apple researchers propose Reference Resolution As Language Modeling (ReALM) by reconstructing the screen using parsed entities and their locations to generate a purely textual representation of the screen visually representative of the screen content. The parts of the screen that are entities are then tagged so that the LM has context around where entities appear and what the text surrounding them is (Eg: call the business number). They also claim that this is the first work using an LLM that aims to encode context from a screen to the best of their knowledge.

For fine-tuning the LLM, they used the FLAN-T5 model. First, they provided the parsed input to the model and fine-tuned it, sticking to the default fine-tuning parameters only. For each data point consisting of a user query and the corresponding entities, they convert it to a sentence-wise format that can be fed to an LLM for training. The entities are shuffled before being sent to the model so that the model does not overfit particular entity positions.

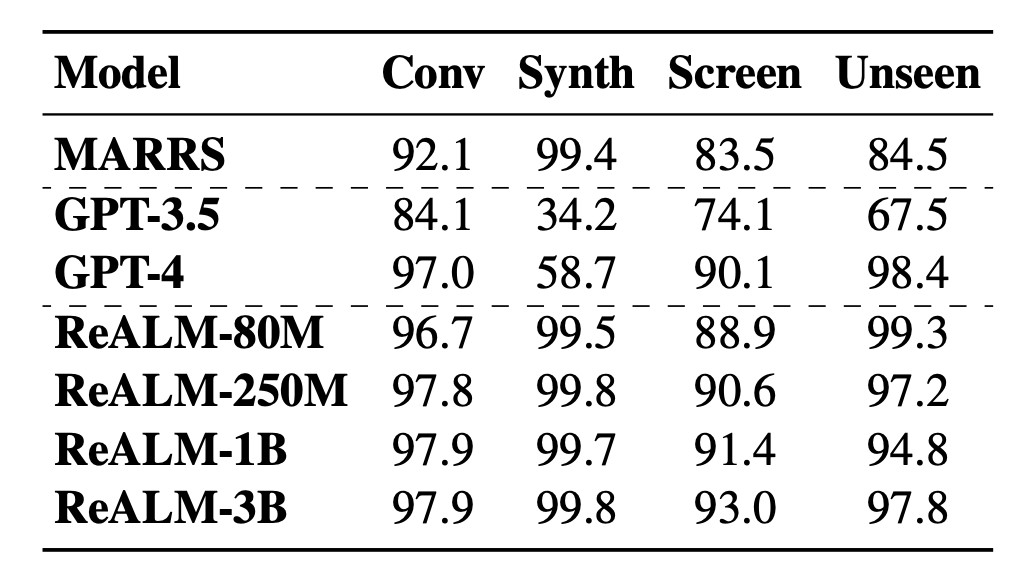

ReALM outperforms the MARRS model in all types of datasets. It can also outperform GPT-3.5, which has a significantly larger number of parameters than the ReALM model by several orders of magnitude. ReALM performs in the same ballpark as the latest GPT-4 despite being a much lighter (and faster) model. Researchers have highlighted the gains on onscreen datasets and found that the ReALM model with the textual encoding approach can perform almost as well as GPT-4 despite the latter being provided with screenshots.

In conclusion, this research introduces ReALM, which uses LLMs to perform reference resolution by encoding entity candidates as natural text. They demonstrated how entities on the screen can be passed into an LLM using a unique textual representation that effectively summarizes the user’s screen while retaining the relative spatial positions of these entities. ReaLM outperforms previous approaches and performs roughly as well as the state-of-the-art LLM today, GPT-4, despite having fewer parameters, even for onscreen references, despite being purely in the textual domain. It also outperforms GPT-4 for domain-specific user utterances, thus making ReaLM an ideal choice for a practical reference resolution system.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

The post Apple Researchers Present ReALM: An AI that Can ‘See’ and Understand Screen Context appeared first on MarkTechPost.

Source: Read MoreÂ