Recent advances in natural language processing (NLP), led by large-scale pre-trained models such as GPT-3 and BERT, have transformed text generation and sentiment analysis tasks. These models’ ability to adapt to various applications with less data has contributed to their popularity in sensitive industries such as healthcare and finance. However, implementing these models creates significant privacy and security concerns, especially when dealing with sensitive data.

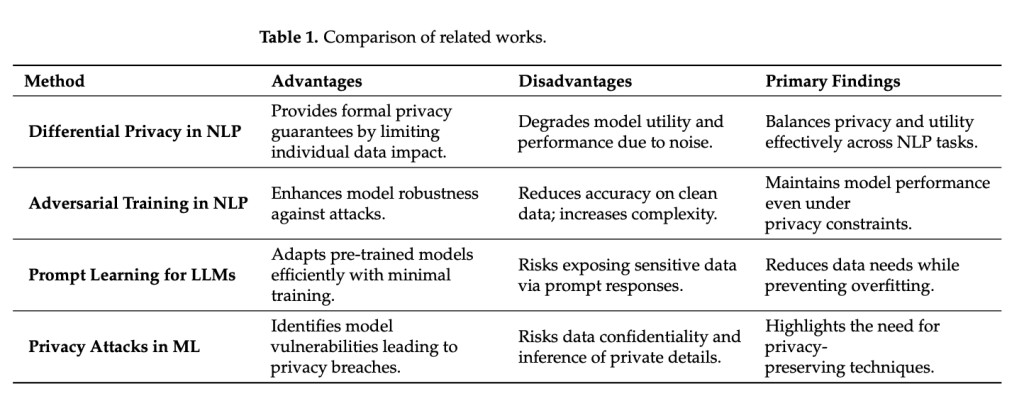

Differential privacy (DP) and adversarial training are key answers to these problems. DP protects privacy by providing noise that masks individual data contributions, while adversarial training improves the robustness of the model against malicious inputs. Recent efforts to integrate these techniques hold promise for simultaneously addressing privacy and security, especially in sensitive natural language processing applications.

Combining DP and adversarial training in NLP requires noise, utility, and robustness trade-offs. Moreover, rapid learning, a widely used adaptation method, risks exposing sensitive data via rapid interactions with model representations. Addressing these challenges is essential to deploy secure and reliable NLP systems in sensitive domains.

To address the challenges of privacy and robustness in natural language processing, a recent paper by a Chinese research team proposes a novel framework that combines DP and adversarial training. This dual approach aims to create a secure and robust training environment, protecting sensitive data while improving the resilience of natural language processing models against adversarial attacks. By integrating these two paradigms, the proposed method simultaneously addresses concerns about data privacy and model vulnerability in high-risk deployment environments.

In more detail, the framework uses PD during the gradient update process to mask the influence of individual data points. Gaussian noise is strategically added to the gradients, ensuring the model remains statistically indistinguishable when a single data point is changed or deleted. On the robustness side, adversarial training generates perturbed versions of the input data to simulate worst-case scenarios, thereby exposing the model to adversarial attacks during training. These adversarial gradients are also privatized by Gaussian noise, preserving privacy guarantees even when handling perturbed data. Final model updates combine these privatized gradients in a weighted manner, balancing natural and adversarial training to achieve a trade-off between privacy, robustness, and utility.

The research team validated their privacy-preserving prompt learning framework through experiments on three NLP tasks: sentiment analysis, question answering, and topic classification, using IMDB, SQuAD, and AG News datasets. BERT was fine-tuned with task-specific prompts and differential privacy was applied by varying privacy budgets (ε = 1.0, 0.5, 0.1). The noise was added to gradients, and clipping ensured bounded sensitivity.

Adversarial training was incorporated to enhance robustness against attacks, using adversarial examples generated with FGSM. The trade-off between accuracy and robustness was controlled by adjusting the hyperparameter λ. Model performance was evaluated using metrics like accuracy, F1 scores, and Exact Match (EM) alongside robustness tests with adversarial examples.

Results showed that stricter privacy constraints reduced accuracy but improved robustness with adversarial training. For instance, in sentiment analysis, accuracy dropped as ε decreased, but adversarial robustness improved significantly with higher λ values. These findings highlight the framework’s ability to effectively balance privacy, utility, and robustness.

To conclude, the authors propose a novel framework combining differential privacy and adversarial training in prompt learning for NLP systems, improving privacy and robustness. Their experiments show that while stricter privacy settings reduce performance, adversarial training enhances resilience to attacks. This is crucial for privacy-sensitive fields like finance and healthcare. However, the framework faces challenges balancing privacy and utility and scaling to larger datasets. According to them, future work will focus on optimizing these trade-offs and extending the framework for broader applications, advancing secure NLP systems.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 60k+ ML SubReddit.

The post Balancing Privacy and Robustness in NLP: A New Approach for Secure Prompt Learning in LLMs appeared first on MarkTechPost.

Source: Read MoreÂ

‘

‘