Email remains a vital communication channel for business customers, especially in HR, where responding to inquiries can use up staff resources and cause delays. The extensive knowledge required can make it overwhelming to respond to email inquiries manually. In the future, high automation will play a crucial role in this domain.

Using generative AI allows businesses to improve accuracy and efficiency in email management and automation. This technology allows for automated responses, with only complex cases requiring manual review by a human, streamlining operations and enhancing overall productivity.

The combination of retrieval augmented generation (RAG) and knowledge bases enhances automated response accuracy. The combination of retrieval-based and generation-based models in RAG allows for accessing databases and generating accurate and contextually relevant responses. Access to reliable information from a comprehensive knowledge base helps the system provide better responses. This hybrid approach makes sure automated replies are not only contextually relevant but also factually correct, enhancing the reliability and trustworthiness of the communication.

In this post, we illustrate automating the responses to email inquiries by using Amazon Bedrock Knowledge Bases and Amazon Simple Email Service (Amazon SES), both fully managed services. By linking user queries to relevant company domain information, Amazon Bedrock Knowledge Bases offers personalized responses. Amazon Bedrock Knowledge Bases can achieve greater response accuracy and relevance by integrating foundation models (FMs) with internal company data sources for RAG. Amazon SES is an email service that provides a straightforward way to send and receive email using your own email addresses and domains.

Retrieval Augmented Generation

RAG is an approach that integrates information retrieval into the natural language generation process. It involves two key workflows: data ingestion and text generation. The data ingestion workflow creates semantic embeddings for documents and questions, storing document embeddings in a vector database. By comparing vector similarity to the question embedding, the text generation workflow selects the most relevant document chunks to enhance the prompt. The obtained information empowers the model to generate more knowledgeable and precise responses.

Amazon Bedrock Knowledge Bases

For RAG workflows, Amazon Bedrock offers managed knowledge bases, which are vector databases that store unstructured data semantically. This managed service simplifies deployment and scaling, allowing developers to focus on building RAG applications without worrying about infrastructure management. For more information on RAG and Amazon Bedrock Knowledge Bases, see Connect Foundation Models to Your Company Data Sources with Agents for Amazon Bedrock.

Solution overview

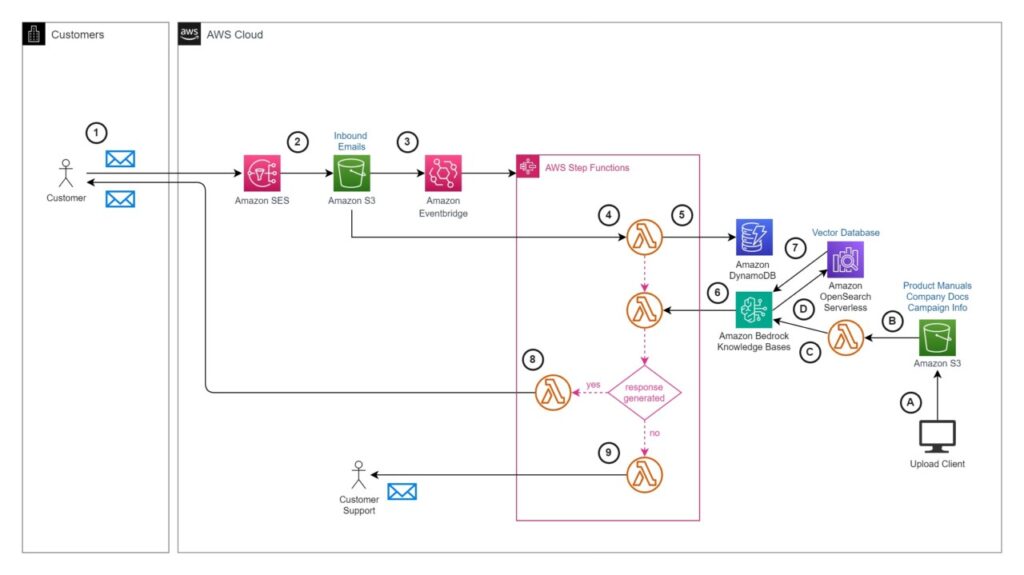

The solution presented in this post responds automatically to email inquiries using the following solution architecture. The primary functions are to enhance the RAG support knowledge base with domain-specific documents and automate email responses.

The workflow to populate the knowledge base consists of the following steps, as noted in the architecture diagram:

- The user uploads company- and domain-specific information, like policy manuals, to an Amazon Simple Storage (Amazon S3) bucket.

- This bucket is designated as the knowledge base data source.

- Amazon S3 invokes an AWS Lambda function to synchronize the data source with the knowledge base.

- The Lambda function starts data ingestion by calling the

StartIngestionJobAPI function. The knowledge base splits the documents in the data source into manageable chunks for efficient retrieval. The knowledge base is set up to use Amazon OpenSearch Serverless as its vector store and an Amazon Titan embeddings text model on Amazon Bedrock to create the embeddings. During this step, the chunks are converted to embeddings and stored in a vector index in the OpenSearch Serverless vector store for Knowledge Bases of Amazon Bedrock, while also keeping track of the original document.

The workflow for automating email responses using generative AI with the knowledge base includes the following steps:

- A customer sends a natural language email inquiry to an address configured within your domain, such as

info@example.com. - Amazon SES receives the email and sends the entire email content to an S3 bucket with the unique email identifier as the object key.

- An Amazon EventBridge rule is invoked upon receipt of the email in the S3 bucket and starts an AWS Step Functions state machine to coordinate generating and sending the email response.

- A Lambda function retrieves the email content from Amazon S3.

- The email identifier and a received timestamp is recorded in an Amazon DynamoDB table. You can use the DynamoDB table to monitor and analyze the email responses that are generated.

- By using the body of the email inquiry, the Lambda function creates a prompt query and invokes the Amazon Bedrock

RetrieveAndGenerateAPI function to generate a response. - Amazon Bedrock Knowledge Bases uses the Amazon Titan embeddings model to convert the prompt query to a vector embedding, and then finds chunks that are semantically similar. The prompt is then augmented with the chunks that are retrieved from the vector store. We then send the prompt alongside the additional context to a large language model (LLM) for response generation. In this solution, we use Anthropic’s Claude Sonnet 3.5 on Amazon Bedrock as our LLM to generate user responses using additional context. Anthropic’s Claude Sonnet 3.5 is fast, affordable, and versatile, capable of handling various tasks like casual dialogue, text analysis, summarization, and document question answering.

- A Lambda function constructs an email reply from the generated response and transmits the email reply using Amazon SES to the customer. Email tracking and disposition information is updated in the DynamoDB table.

- When there’s no automated email response, a Lambda function forwards the original email to an internal support team for them to review and respond to the customer. It updates the email disposition information in the DynamoDB table.

Prerequisites

To set up this solution, you should have the following prerequisites:

- A local machine or virtual machine (VM) on which you can install and run AWS Command Line Interface (AWS CLI) tools.

- A local environment prepared to deploy the AWS Cloud Development Kit (AWS CDK) stack as documented in Getting started with the AWS CDK. You can bootstrap the environment with

cdk bootstrap aws://{ACCOUNT_NUMBER}/{REGION}. - A valid domain name with configuration rights over it. If you have a domain name registered in Amazon Route 53 and managed in this same account, the AWS CDK will configure Amazon SES for you. If your domain is managed elsewhere, then some manual steps will be necessary (as detailed later in this post).

- Amazon Bedrock models enabled for embedding and querying. For more information, see Access Amazon Bedrock foundation models. In the default configuration, the following models are required to be enabled:

- Amazon Titan Text Embeddings V2

- Anthropic’s Claude 3.5 Sonnet

Deploy the solution

To deploy the solution, complete the following steps:

- Configure an SES domain identity to allow Amazon SES to send and receive messages.

If you want to receive an email address for a domain managed in Route 53, it will automatically configure this for you if you provide theROUTE53_HOSTED_ZONEcontext variable. If you manage your domain in a different account or in a registrar besides Route 53, refer to Creating and verifying identities in Amazon SES to manually verify your domain identity and Publishing an MX record for Amazon SES email receiving to manually add the MX record required for Amazon SES to receive email for your domain. - Clone the repository and navigate to the root directory:

- Install dependencies:

npm install - Deploy the AWS CDK app, replacing

{EMAIL_SOURCE}with the email address that will receive inquiries,{EMAIL_REVIEW_DEST}with the email address for internal review for messages that fail auto response, and{HOSTED_ZONE_NAME}with your domain name:

At this point, you have configured Amazon SES with a verified domain identity in sandbox mode. You can now send email to an address in that domain. If you need to send email to users with a different domain name, you need to request production access.

Upload domain documents to Amazon S3

Now that you have a running knowledge base, you need to populate your vector store with the raw data you want to query. To do so, upload your raw text data to the S3 bucket serving as the knowledge base data source:

- Locate the bucket name from the AWS CDK output (

KnowledgeBaseSourceBucketArn/Name). - Upload your text files, either through the Amazon S3 console or the AWS CLI.

If you’re testing this solution out, we recommend using the documents in the following open source HR manual. Upload the files in either the markdown or PDF folders. Your knowledge base will then automatically sync those files to the vector database.

Test the solution

To test the solution, send an email to the address defined in the “sourceEmail†context parameter. If you opted to upload the sample HR documents, you could use the following example questions:

- “How many days of PTO do I get?â€

- “To whom do I report an HR violation?â€

Clean up

Deploying the solution will incur charges. To clean up resources, run the following command from the project’s folder:

Conclusion

In this post, we discussed the essential role of email as a communication channel for business users and the challenges of manual email responses. Our description outlined the use of a RAG architecture and Amazon Bedrock Knowledge Bases to automate email responses, resulting in improved HR prioritization and enhanced user experiences. Lastly, we created a solution architecture and sample code in a GitHub repository for automatically generating and sending contextual email responses using a knowledge base.

For more information, see the Amazon Bedrock User Guide and Amazon SES Developer Guide.

About the Authors

Darrin Weber is a Senior Solutions Architect at AWS, helping customers realize their cloud journey with secure, scalable, and innovative AWS solutions. He brings over 25 years of experience in architecture, application design and development, digital transformation, and the Internet of Things. When Darrin isn’t transforming and optimizing businesses with innovative cloud solutions, he’s hiking or playing pickleball.

Darrin Weber is a Senior Solutions Architect at AWS, helping customers realize their cloud journey with secure, scalable, and innovative AWS solutions. He brings over 25 years of experience in architecture, application design and development, digital transformation, and the Internet of Things. When Darrin isn’t transforming and optimizing businesses with innovative cloud solutions, he’s hiking or playing pickleball.

Marc Luescher is a Senior Solutions Architect at AWS, helping enterprise customers be successful, focusing strongly on threat detection, incident response, and data protection. His background is in networking, security, and observability. Previously, he worked in technical architecture and security hands-on positions within the healthcare sector as an AWS customer. Outside of work, Marc enjoys his 3 dogs, 4 cats, and over 20 chickens, and practices his skills in cabinet making and woodworking.

Marc Luescher is a Senior Solutions Architect at AWS, helping enterprise customers be successful, focusing strongly on threat detection, incident response, and data protection. His background is in networking, security, and observability. Previously, he worked in technical architecture and security hands-on positions within the healthcare sector as an AWS customer. Outside of work, Marc enjoys his 3 dogs, 4 cats, and over 20 chickens, and practices his skills in cabinet making and woodworking.

Matt Richards is a Senior Solutions Architect at AWS, assisting customers in the retail industry. Having formerly been an AWS customer himself with a background in software engineering and solutions architecture, he now focuses on helping other customers in their application modernization and digital transformation journeys. Outside of work, Matt has a passion for music, singing, and drumming in several groups.

Matt Richards is a Senior Solutions Architect at AWS, assisting customers in the retail industry. Having formerly been an AWS customer himself with a background in software engineering and solutions architecture, he now focuses on helping other customers in their application modernization and digital transformation journeys. Outside of work, Matt has a passion for music, singing, and drumming in several groups.

Source: Read MoreÂ