Amazon DynamoDB is a NoSQL database, renowned for its ability to scale automatically based on demand, but there are scenarios where you need to handle large traffic spikes from the moment a table is created or when a table has to support a sudden surge in traffic for an upcoming event. We’re introducing warm throughput, a new capability that provides insight into the throughput your DynamoDB tables and indexes can instantly support and allows you to pre-warm for optimized performance.

In this post, we’ll introduce warm throughput, explain how it works, and explore the benefits it offers for handling high-traffic scenarios. We’ll also cover best practices and practical use cases to help you make the most of this feature for your DynamoDB tables and indexes.

DynamoDB capacity modes

Before we explore warm throughput, it’s useful to understand DynamoDB’s capacity modes and throughput units.

Provisioned capacity mode allows you to set specific throughput, ideal for predictable workloads, while on-demand mode scales automatically to meet demand, suiting unpredictable workloads. Throughput is measured in Read Capacity Units (RCUs) and Write Capacity Units (WCUs): RCUs allow one 4 KB read per second, and WCUs enable one 1 KB write per second.

For more details, refer to DynamoDB throughput capacity in the developer guide.

What is warm throughput?

Warm throughput provides insight into the read and write operations your table or index can immediately support, with these values growing as usage increases. You can also pre-warm your table or index by proactively setting higher warm throughput values. This approach is especially beneficial in scenarios with anticipated instant traffic surges, such as product launches, flash sales, or major online events.

Understanding warm throughput

It’s important to clarify warm throughput value isn’t a maximum limit on your DynamoDB table’s capacity—rather, it’s the minimum throughput that your table is prepared to handle instantaneously. When you proactively pre-warm your table, you’re essentially setting the number of reads and writes your table can instantaneously support, helping to make sure that it can handle a specific level of traffic right from the start.

Pre-warming a table is an asynchronous, non-blocking operation, allowing you to carry out other table updates simultaneously while the pre-warm process is underway. This flexibility also means you can easily adjust your warm throughput values at any time, even during an active pre-warm operation by sending a new request with updated values. The time it takes for a pre-warm operation to complete depends on the requested warm throughput values and the storage size of the table or index.

The scaling capabilities of DynamoDB extend beyond the initial pre-warming activity, dynamically adjusting as your application grows. As your workload increases over time, DynamoDB automatically increases the warm throughput value to handle higher traffic demands, helping to ensure consistent performance without manual intervention.

For instance, if you pre-warm a table to support 100,000 write requests per second, your table will be ready to handle that traffic immediately. If your application’s traffic increases—reaching, for example, 150,000 write requests per second because of organic growth—DynamoDB will automatically scale to accommodate this additional load, allowing your table to seamlessly meet the evolving needs of your application. The warm throughput value will be adjusted to accurately reflect your table’s current capacity and performance capabilities.

To keep track of your warm throughput values and see how they evolve over time, you can use the DescribeTable API. This API provides detailed information about the current warm throughput values for both your tables and your global secondary indexes, helping you stay informed and make adjustments proactively based on traffic patterns and future requirements.

Common use cases for pre-warming

Before deciding if you need to pre-warm your DynamoDB tables, it’s important to understand the peak throughput that your application might require. Estimating peak throughput helps make sure your DynamoDB table is ready to handle the expected load without throttling or performance issues. Here’s a step-by-step guide to help you calculate your application’s peak throughput and determine whether pre-warming is necessary. These steps apply to both on-demand and provisioned capacity mode tables.

Step 1: Understand your workload patterns

The first step in calculating peak throughput is to gain a clear understanding of your workload’s traffic patterns. Consider the following:

- Type of operations: Are you primarily handling read requests, write requests, or a mix of both?

- Nature of traffic: Do you experience predictable spikes (such as daily or weekly patterns), or are there occasional surges (for example, flash sales, product launches, or major events)?

Step 2: Estimate peak requests per second

After you understand your workload, the next step is to estimate the number of read and write requests per second that your application needs to handle at peak times. This can be done in two ways:

- Historical data: If your application is already live, review traffic logs or monitoring data to identify the highest number of read and write requests per second during peak times. Use these values as a baseline for calculating peak throughput.

- Forecasting: If you’re preparing for an upcoming event or launch, you can estimate peak throughput by forecasting the expected increase in traffic. Consider the number of users, expected actions per user (for example, the number of product views or transactions), and the duration of the peak period.

Step 3: Calculate read and write capacity units

After you have the estimated number of requests per second, you can calculate the read request units (RRUs) and write request units (WRUs) required for your DynamoDB table. In this example, we use on-demand capacity mode values, though the process is similar for tables using provisioned capacity mode.

- RRUs: One RRU is consumed to read one strongly consistent item (up to 4 KB in size). For eventually consistent reads, one RRU is enough for two reads requests (up to 4 KB in size). To calculate RRUs:

- Calculate the average size of your items in KB.

- Divide the average size of your items by 4.

- Multiply by the number of read requests per second.

- Adjust based on whether you use eventually consistent or strongly consistent reads.

- Note: If you work with small items, you will consume 1 RRU for strongly consistent read requests or 0.5 RRU for eventually consistent read requests.

- WRUs: One WRU is consumed to write one item (up to 1 KB in size). To calculate WRUs:

- Calculate the average size of your items in KB.

- Multiply by the number of write requests per second.

To learn more about capacity units, refer to the developer guide.

Step 4: Account for variability

Traffic is rarely uniform, so it’s important to account for potential traffic bursts beyond your initial estimates. Consider adding a buffer to your peak throughput calculations to accommodate unexpected spikes.

For example, If you estimate 80,000 WRUs per second at peak, consider pre-warming for 100,000 WRUs per second to handle any sudden increases in demand. Pre-warming with a buffer may incur additional costs.

Step 5: Determine if pre-warming is needed

After you’ve calculated the peak RRUs and WRUs, compare these values to the current warm throughput values for your table. If the calculated peak throughput is significantly higher than your current warm throughput values or if you anticipate immediate traffic surges (such as during a product launch or flash sale), then pre-warming is recommended. This allows your table to handle peak traffic, avoiding potential throttling, which occur when a partition’s capacity limits are exceeded and can take time based on the amount of stored data. Throttling and partition splitting can result in elevated latency for the client as the system adjusts to handle increased demand.

Use case examples

Now that we’ve explored the concept of warm throughput, let’s dive into some real-world use cases where this feature can be particularly beneficial.

Preparing a new on-demand table for high initial traffic

A new DynamoDB on-demand table starts with initial warm throughput values of 4,000 write requests per second and 12,000 read requests per second. If traffic suddenly spikes, such as during a new application launch, DynamoDB will gradually scale capacity to meet the increased demand. However, if the table instantly demands more than 4000 write requests per second, some requests might be throttled during the scaling process. This throttling can lead to increased latency or failures, impacting the user experience and potentially resulting in lost revenue.

To prevent these issues, pre-warming the table is recommended if you anticipate high traffic from launch. Pre-warming ensures that your table is ready to handle the expected load immediately, reducing the risk of throttling and providing a seamless experience for users, without waiting for DynamoDB to scale reactively.

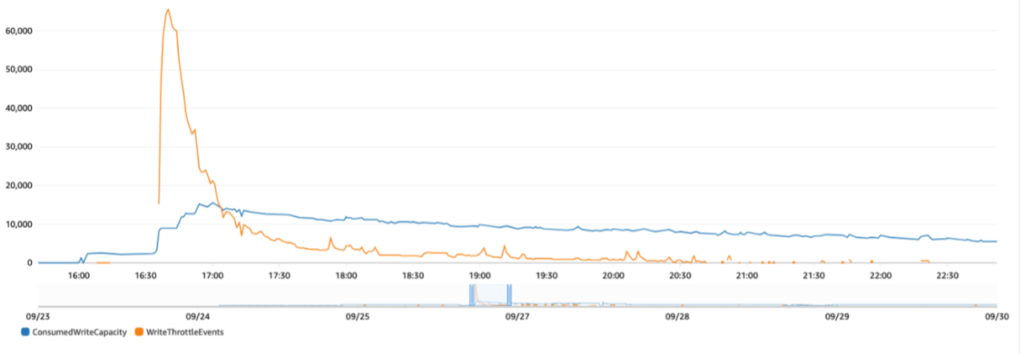

The following graph highlights a load test taken on a new on-demand table, which resulted in excessive throttling (orange line) until the table scaled to accommodate the larger than expected throughput (blue line).

By pre-warming in advance, you can set a baseline of 100,000 write requests per second. DynamoDB scales the table to handle this level of traffic immediately, avoiding delays in scaling and eliminating the risk of throttling.

This preparation helps create a smooth user experience, where customers can complete transactions quickly, even during peak demand. There’s no need to worry about failed requests, slow performance, or scaling delays, giving you confidence that your system is prepared for the event.

The following graphs shows the same load test we ran previously, except this time we pre-warmed the table to 100,000 WRU. This time the table was already scaled out and ready to handle our throughput, resulting in no throttling.

Preparing for a large scale event

Imagine you’re an e-commerce company preparing for a flash sale during the Super Bowl, one of the highest-traffic events of the year. You already have a DynamoDB table in place to handle requests, but with the anticipated spike in traffic, it’s important to confirm that the table is prepared to handle the expected load. Based on estimations, you calculate that the peak traffic during this event could reach 100,000 write requests per second, including a 10% buffe.

To prepare, first calculate your anticipated load, as outlined above, and compare it to the current warm throughput values of your table. If your estimated peak is higher than the existing warm throughput values, pre-warming the table is recommended. This will help prepare the table to handle the traffic immediately, reducing the risk of throttling or delays during this high-demand event.

Preparing for a migration to DynamoDB

When migrating an existing application to DynamoDB, making sure that your tables are ready to handle the expected traffic from the start is important for a smooth transition. Migrating from a traditional relational database or another NoSQL solution often involves dealing with large volumes of data and traffic spikes as your extract, transform, and load (ETL) job writes to DynamoDB.

You can pre-warm your DynamoDB tables to the required capacity, making them immediately ready to handle the expected traffic and immediately capable of handling the surge in read and write requests that can occur during a migration. Pre-warming helps eliminate the uncertainty that often accompanies a migration, particularly when there’s little room for downtime or performance degradation. As you migrate your data, DynamoDB can scale to the expected throughput levels, allowing your application to handle high traffic instantly.

Whether you’re migrating a high-traffic e-commerce platform or a critical enterprise workload, increasing warm throughput values for your tables guarantees your application will have the necessary performance baseline, avoiding potential throttling issues or delays as users begin interacting with the system. Now that we’ve discussed the benefits and use cases of warm throughput, let’s walk through how you can get started setting it up.

Getting started with warm throughput

With just a few steps in the AWS Management Console, AWS Command Line Interface (AWS CLI), or the Software Development Kit you can configure your DynamoDB table to help ensure its prepared for high traffic loads in advance.

Set a warm throughput value by using the AWS Management Console:

- Navigate to the DynamoDB console and choose Create table.

- Specify your table’s primary key attributes.

- Under Table settings, select Customize settings.

- For Read/write capacity settings, choose On-demand.

- Under Warm Throughput, input your anticipated maximum read and write request units.

- Complete the table creation process.

For guidance on applying a warm throughput value to existing tables or indexes, refer to the developer guide.

Set warm throughput values by using the AWS Command Line Interface (AWS CLI):

Best practices

The best practices for pre-warming include:

- Estimate accurately: Analyze past traffic patterns or use forecasting tools to estimate peak throughput accurately.

- Apply to critical tables: Focus on tables that support high-profile events or applications where immediate traffic spikes are expected.

- Adjust as needed: Pre-warm your tables as you refine your understanding of your workload requirements.

Monitoring warm throughput values

Understanding and managing your DynamoDB table’s current capabilities is crucial, especially ahead of large events. You can monitor warm throughput values using the DescribeTable API, which is available for all on-demand and provisioned mode tables. This call provides you with the current warm throughput values for your tables, helping you verify everything is in place before a significant traffic event.

aws dynamodb describe-table --table-name FlashSaleTable

By regularly checking these settings, you can confidently prepare for any large-scale operation, helping to ensure that your DynamoDB tables are always ready to perform at their best.

Warm throughput compatibility

Warm throughput is fully integrated into essential DynamoDB features, including global secondary indexes and global tables, helping to ensure consistent performance across your entire system.

Secondary indexes

For global secondary indexes (GSI), you can individually specify the warm throughput values, allowing you to fine-tune performance to match your workload requirements. To avoid bottlenecks when replicating writes from the base table to the GSI, it’s recommended that you set the WriteUnitsPerSecond for your GSIs to be at least equal to that of the base table. However, if your index frequently updates one or both of its keys (partition or sort key), it’s advisable to increase the WriteUnitsPerSecond to 1.5 times the base table’s value, supporting optimal performance and helping to prevent write contention.

The following example code snippet demonstrates adding a pre-warmed GSI:

For instructions on updating global secondary indexes, see the developer guide.

DynamoDB global tables

Warm throughput is fully compatible with DynamoDB Global Tables v2019.11.21 (current), supporting efficient management of global workloads with consistent performance. You can pre-warm tables in all replicated AWS Regions, helping to make sure that they’re ready to handle high traffic simultaneously, regardless of the user’s geographic location.

By default, requests to update warm throughput value are automatically synchronized across all replicas for both read and write operations, supporting consistent performance across all Regions.

Infrastructure as Code (IaC)

One of the key advantages of warm throughput is its integration with infrastructure as code (IaC) tools such as AWS CloudFormation. Previously, pre-warming a DynamoDB on-demand table required a several steps: you needed to switch the table to provisioned mode, manually increase the capacity, and then revert it back to on-demand mode after a certain period. This approach achieves the outcome but requires multiple cycles of deployments and adjustments.

With warm throughput, managing DynamoDB tables using IaC becomes significantly more straightforward. Now, you can pre-warm your table by passing in values for warm throughput during the table creation process. This removes the need for manual intervention and complex workflows, enabling you to define your table’s performance baseline directly within your IaC templates.

The following CloudFormation template defines a DynamoDB table named FlashSaleTable in on-demand (PAY_PER_REQUEST) mode. The table has a primary key ProductID of type String. The warm throughput property sets an initial read units per second to 50,000 RCUs and a write units per second to 100,000 WCUs.

The following template creates a global DynamoDB table named FlashSaleTable, replicated across the eu-west-1 and eu-west-2 regions. Similar to the single-region example, it sets warm throughput value to 50,000 RCUs and 100,000 WCUs.

Pricing for warm throughput

The pricing for warm throughput is based on the cost of provisioned WCUs and RCUs in the specific Region where your table is located. When you pre-warm a table, the cost is calculated as a one-time charge based on the difference between the new values and the current warm throughput that the table or index can support.

By default, on-demand tables have a baseline warm throughput of 4,000 WCUs and 12,000 RCUs. When pre-warming a newly created on-demand table, you are only charged for the difference between your specified values and these baseline values.

For example, if you pre-warm an existing table with 40,000 WCUs and 40,000 RCUs, but your table already has 10,000 WCUs and 12,000 RCUs, the one-time charge applies to the additional 30,000 WCUs and 28,000 RCUs needed. The pre-warming uses provisioned capacity for the Standard table class, regardless of the Table Class or Capacity Mode the table currently uses.

In the Virginia Region (us-east-1), the costs are as follows:

Calculation:

Total cost: $23.14

This prepares your table to immediately handle high traffic volumes without any scaling delays; it also means you’re only charged for the capacity that you configure. To better manage your costs, it’s important to accurately estimate your anticipated throughput needs and pre-warm accordingly. To learn more, see the DynamoDB pricing guide.

Conclusion

Warm throughput is a new feature that you can use to prepare DynamoDB tables for high traffic from the moment they’re created and for existing tables. Whether you’re launching a new product or preparing for a massive online event such as the Super Bowl, warm throughput helps ensure that your tables are prepared and ready to deliver a consistent and reliable performance.

To learn more about warm throughput, refer to the Amazon DynamoDB Developer Guide.

About the Author

Lee Hannigan, is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads leveraging DynamoDB’s capabilities.

Lee Hannigan, is a Sr. DynamoDB Specialist Solutions Architect based in Donegal, Ireland. He brings a wealth of expertise in distributed systems, backed by a strong foundation in big data and analytics technologies. In his role as a DynamoDB Specialist Solutions Architect, Lee excels in assisting customers with the design, evaluation, and optimization of their workloads leveraging DynamoDB’s capabilities.

Source: Read More