In recent years, the field of text-to-speech (TTS) synthesis has seen rapid advancements, yet it remains fraught with challenges. Traditional TTS models often rely on complex architectures, including deep neural networks with specialized modules such as vocoders, text analyzers, and other adapters to synthesize realistic human speech. These complexities make TTS systems resource-intensive, limiting their adaptability and accessibility, especially for on-device applications. Moreover, current methods often require large datasets for training and typically lack flexibility in voice cloning or adaptation, hindering personalized use cases. The cumbersome nature of these approaches and the increasing demand for versatile and efficient voice synthesis have prompted researchers to explore innovative alternatives.

OuteTTS-0.1-350M: Simplifying TTS with Pure Language Modeling

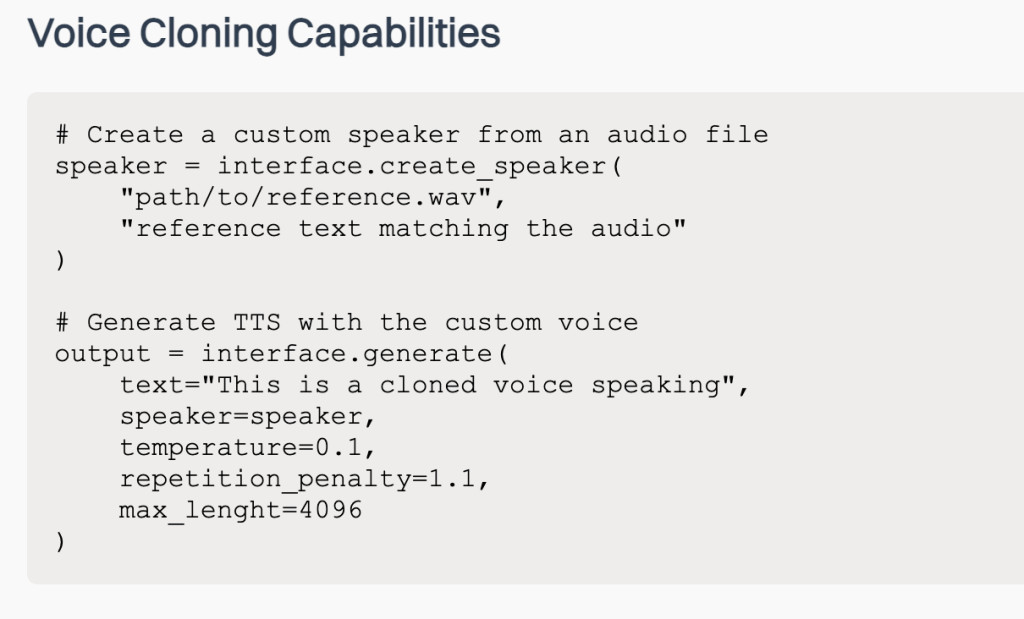

Oute AI releases OuteTTS-0.1-350M: a novel approach to text-to-speech synthesis that leverages pure language modeling without the need for external adapters or complex architectures. This new model introduces a simplified and effective way of generating natural-sounding speech by integrating text and audio synthesis in a cohesive framework. Built on the LLaMa architecture, OuteTTS-0.1-350M utilizes audio tokens directly without relying on specialized TTS vocoders or complex intermediary steps. Its zero-shot voice cloning capability allows it to mimic new voices using only a few seconds of reference audio, making it a groundbreaking advancement in personalized TTS applications. Released under the CC-BY license, this model paves the way for developers to experiment freely and integrate it into various projects, including on-device solutions.

Technical Details and Benefits

Technically, OuteTTS-0.1-350M employs a pure language modeling approach to TTS, effectively bridging the gap between text input and speech output through the use of a structured yet simplified process. It employs a three-step approach: audio tokenization using WavTokenizer, connectionist temporal classification (CTC) for forced alignment of word-to-audio token mapping, and the creation of structured prompts containing transcription, duration, and audio tokens. The WavTokenizer, which produces 75 audio tokens per second, enables efficient conversion of audio to token sequences that the model can understand and generate. The adoption of LLaMa-based architecture allows the model to represent speech generation as a task similar to text generation, which drastically reduces model complexity and computation costs. Additionally, the compatibility with llama.cpp ensures that OuteTTS can run effectively on-device, offering real-time speech generation without the need for cloud services.

Why OuteTTS-0.1-350M Matters

The importance of OuteTTS-0.1-350M lies in its potential to democratize TTS technology by making it accessible, efficient, and easy to use. Unlike conventional models that require extensive pre-processing and specific hardware capabilities, this model’s pure language modeling approach reduces the dependency on external components, thereby simplifying deployment. Its zero-shot voice cloning capability is a significant advancement, allowing users to create custom voices with minimal data, opening doors for applications in personalized assistants, audiobooks, and content localization. The model’s performance is particularly impressive considering its size of only 350 million parameters, achieving competitive results without the overhead seen in much larger models. Initial evaluations have shown that OuteTTS-0.1-350M can effectively generate natural-sounding speech with accurate intonation and minimal artifacts, making it suitable for diverse real-world applications. The success of this approach demonstrates that smaller, more efficient models can perform competitively in domains that traditionally relied on extremely large-scale architectures.

Conclusion

In conclusion, OuteTTS-0.1-350M marks a pivotal step forward in text-to-speech technology, leveraging a simplified architecture to deliver high-quality speech synthesis with minimal computational requirements. Its integration of LLaMa architecture, use of WavTokenizer, and ability to perform zero-shot voice cloning without needing complex adapters set it apart from traditional TTS models. With its capacity for on-device performance, this model could revolutionize applications in accessibility, personalization, and human-computer interaction, making advanced TTS accessible to a broader audience. Oute AI’s release not only highlights the power of pure language modeling for audio generation but also opens up new possibilities for the evolution of TTS technology. As the research community continues to explore and expand upon this work, models like OuteTTS-0.1-350M may well pave the way for smarter, more efficient voice synthesis systems.

Key Takeaways

- OuteTTS-0.1-350M offers a simplified approach to TTS by leveraging pure language modeling without complex adapters or external components.

- Built on the LLaMa architecture, the model uses WavTokenizer to directly generate audio tokens, making the process more efficient.

- The model is capable of zero-shot voice cloning, allowing it to replicate new voices with only a few seconds of reference audio.

- OuteTTS-0.1-350M is designed for on-device performance and is compatible with llama.cpp, making it ideal for real-time applications.

- Despite its relatively small size of 350 million parameters, the model performs competitively with larger, more complex TTS systems.

- The model’s accessibility and efficiency make it suitable for a wide range of applications, including personalized assistants, audiobooks, and content localization.

- Oute AI’s release under a CC-BY license encourages further experimentation and integration into diverse projects, democratizing advanced TTS technology.

Check out the Model on Hugging Face. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

[Sponsorship Opportunity with us] Promote Your Research/Product/Webinar with 1Million+ Monthly Readers and 500k+ Community Members

The post OuteTTS-0.1-350M Released: A Novel Text-to-Speech (TTS) Synthesis Model that Leverages Pure Language Modeling without External Adapters appeared first on MarkTechPost.

Source: Read MoreÂ