Blockchain and generative AI are two technical fields that have received a lot of attention in the recent years. There is an emerging set of use cases that can benefit from these two technologies. In January 2024, Vitalik Buterin, co-founder of Ethereum and prominent figure of the crypto community (more on Vitalik on this article or this recently released movie), shared his views on this subject in The promise and challenges of crypto + AI applications. One of the use cases that he mentioned is the possibility of using a decentralized autonomous organization (DAO) to govern the different steps that compose the lifecycle of an AI model:

“’DAOs to democratically govern AI’ might actually make sense: we can create an on-chain DAO that governs the process of who is allowed to submit training data […], who is allowed to make queries, and how many, and use cryptographic techniques like MPC to encrypt the entire pipeline of creating and running the AI from each individual user’s training input all the way to the final output of each query.â€

The proposed solution can enable decentralized and transparent governance of AI training data, promoting data integrity and accountability. It allows diverse stakeholders to contribute to AI model development democratically, enhancing the model’s fairness and inclusivity. By using blockchain, the solution maintains secure and verifiable data transactions, mitigating risks of data tampering. Additionally, integrating AI with blockchain can streamline compliance with data privacy regulations, offering robust, user-centric data management.

In this four-part series, we explore how to implement such a use case on AWS. We build a solution that governs the training data ingestion process of an AI model, using a smart contract and serverless components. We guide you through the different steps to build the solution:

- In Part 1, you will review the overall architecture of the solution, and set up a large language model (LLM) knowledge base

- In Part 2, you create and deploy a contract on Ethereum that represents the DAO

- In Part 3, you set up Amazon API Gateway and deploy AWS Lambda functions to copy data from InterPlanetary File System (IPFS) to Amazon Simple Storage Service (Amazon S3) and start a knowledge base ingestion job

- In Part 4, you configure MetaMask authentication, create a frontend interface, and test the solution.

The proposed solution serves as a compelling example of how you can build innovative approaches using both AI and blockchain technologies on AWS to address AI governance. This solution offers a solid foundation that you can extend to cover additional important areas such as responsible AI and securing, governing, and enriching the LLM lifecycle using blockchain.

Solution overview

In the proposed solution, a series of serverless components govern the training data ingestion process of an AI model by checking on a smart contract whether a given user is allowed to provide a specific training dataset.

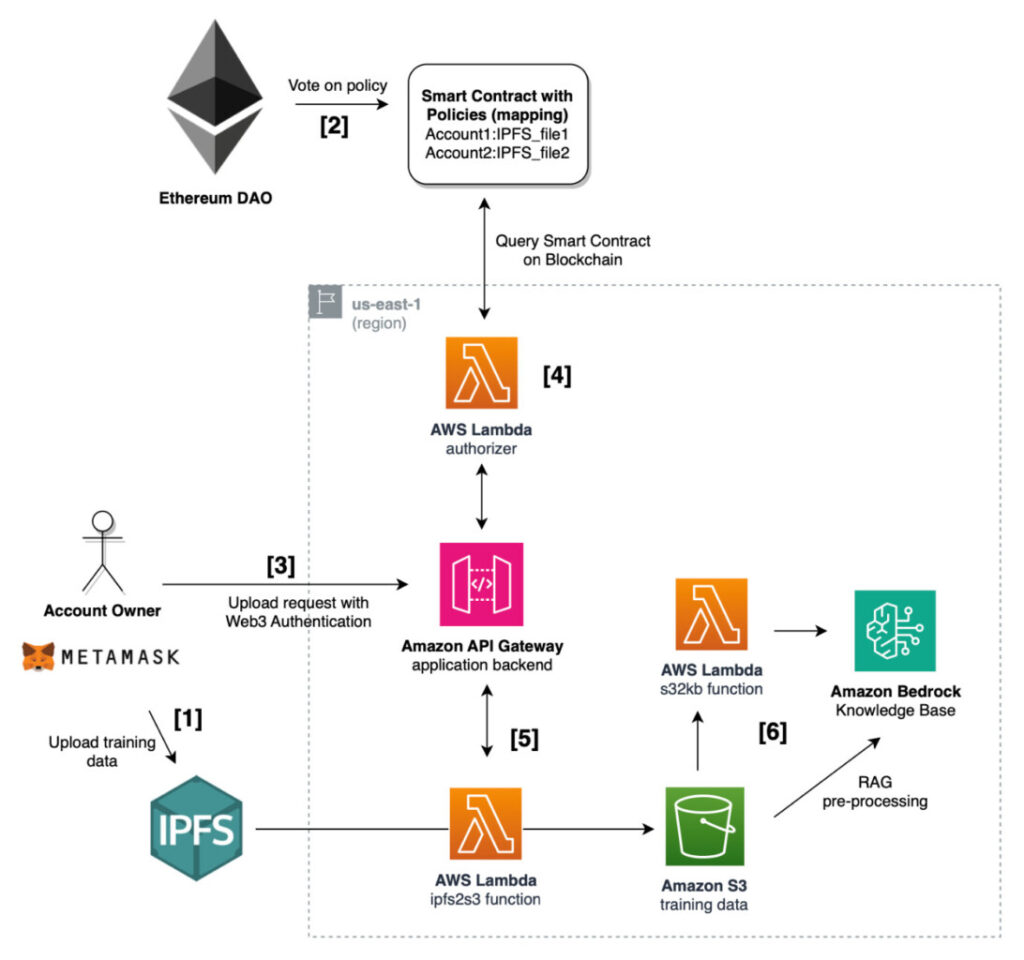

The following diagram shows the different components of the solution.

The architecture showcases a decentralized governance model for AI training data using an Ethereum DAO:

- Account owners upload training data to IPFS and submit proposals to the DAO.

- The DAO votes on policies and updates the smart contract with approved data mappings.

- The account owner requests the training data to be uploaded.

- A successful authentication invokes a Lambda function.

- The function transfers data from IPFS to Amazon S3.

- Another Lambda function processes the data for ingestion into Amazon Bedrock Knowledge Bases, using Retrieval Augmented Generation (RAG) for preprocessing.

The following are some key elements to consider:

- The AI model lifecycle event that we consider is the ingestion of training data to an Amazon Bedrock knowledge base. However, you could extend the solution to additional events, or apply it to another process, such as the creation of an Amazon Bedrock custom model.

- The smart contract contains a mapping between addresses and the IPFS files that the address owners are allowed to upload to train the model. To create a governance mechanism on top of it, you could use a Governor contract (for example, using the Governor contracts from OpenZeppelin), creating the opportunity for more advanced use cases. For example, you could use the governor not only to select training data, but also to collect feedback from users of the AI model.

- For this post, we assume that the training data is public and can be stored in a decentralized manner on IPFS. The user providing the training data can upload the data onto IPFS. After the DAO reviews and accepts the training data (thereby updating the smart contract), the user can authenticate against an API gateway and invoke the import of the training data.

- The user authentication is done using a Lambda authorizer. Upon receiving a signed message from the user, the Lambda authorizer looks up the signer Ethereum account and matches the account and the IPFS CID with the content of the smart contract.

- Upon successful authentication, a Lambda function is invoked to upload the content of the IPFS file to an S3 bucket.

- After the data is uploaded to S3, an ingestion job to the Amazon Bedrock knowledge base is invoked automatically using a S3 Lambda trigger.

Prerequisites

To recreate the solution outlined in this series, make sure you have the following in place:

- The ability upload files into Amazon S3 in your AWS account.

- The ability to use Amazon Bedrock Knowledge Bases (one of the tools in Amazon Bedrock) in your AWS account.

- Access to the Amazon Titan Text Embeddings V2 and Anthropic’s Claude 3.5 Sonnet models on Amazon Bedrock. To request access, see Access Amazon Bedrock foundation models.

- Familiarity with MetaMask and Remix. To learn more, review Getting started with MetaMask and Remix.

- Familiarity with IPFS and a pinning service such as Filebase. Review IPFS on AWS and Filebase for more details.

- A basic understanding of Amazon S3, API Gateway, Lambda, and AWS CloudShell.

- Access to the

us-east-1AWS Region (if you decide to use another Region, refer to AWS Services by Region to verify that all the required services are available).

Set up the LLM knowledge base

Amazon Bedrock Knowledge Bases is a fully managed RAG service that enables you to amass data sources into a repository of information. With knowledge bases, you can quickly build an application that takes advantage of RAG, a technique in which the retrieval of information from data sources augments the generation of model responses.

You can use a knowledge base not only to answer user queries, but also to augment prompts provided to foundation models (FMs) by providing context to the prompt. Knowledge base responses also come with citations, so you can find more information by looking up the exact text that a response is based on and check that the response makes sense and is factually correct. For a more detailed introduction to RAG, you can refer to What is RAG? .

Create the S3 bucket

To create the knowledge base, you start by creating an S3 bucket. Complete the following steps:

- On the Amazon S3 console, choose Buckets in the navigation pane.

- Choose Create bucket.

- For Bucket name, enter a name (for example,

crypto-ai-kb-<your_account_id>). - Choose Create bucket.

Create the Amazon Bedrock knowledge base

You can now create a knowledge base using the instructions from Create an Amazon Bedrock knowledge base:

- On the Amazon Bedrock console, choose Knowledge bases in the navigation pane (under Builder tools).

- Choose Create knowledge base.

- For Knowledge base name, enter a name (for example,

crypto-ai-kb). - Under IAM permissions, choose Create and use a new service role.

- Under Choose data source, choose Amazon S3.

- Choose Next.

- For Data source name, enter a name (for example,

EIPs) and for Data source location, choose This AWS account. - Under S3 URI, choose Browse S3 and choose the S3 bucket you created.

- Choose Next.

- For Embeddings model, choose Titan Text Embeddings v2.

Amazon Text Embeddings V2 is a lightweight, efficient model ideal for high accuracy retrieval tasks at different dimensions. The model supports flexible embeddings sizes (256, 512, 1024) and prioritizes accuracy maintenance at smaller dimension sizes, helping reduce storage costs without compromising on accuracy. - For Vector database, choose Quick create a new vector store.

- Choose Next.

- Choose Create knowledge base.

Wait for the knowledge base to be created. This might take a couple of minutes.

Knowledge base sample use case

A knowledge base can serve multiple purposes. In the context of this post, we want to build a knowledge base on Ethereum Improvement Proposals (EIPs).

We feed the knowledge base with the EIP definitions from the EIP GitHub repository:

- Open a CloudShell terminal and enter the following commands:

- Validate that the EIP .md files have been uploaded to the S3 bucket:

- On the Amazon Bedrock console, choose Knowledge bases in the navigation pane, then choose the

crypto-ai-kbknowledge base. - Under Data source, choose the

EIPsdata source and choose Sync.

Wait for the Status to be Available. Given the size of the data, this should take around 5 minutes. Before you can test the knowledge base, you need to select an LLM model to query the database. - Under Test knowledge base in the right pane, choose Select model.

- Choose Anthropic’s Claude 3.5 Sonnet model.

- Choose Apply.

You can now use the prompt to query the knowledge base using natural language. For example, you can enter a query similar to the following:

Which EIPs are related to danksharding?

You will see a response similar to the one in the following screenshot (due to the non-deterministic nature of an LLM, the response you get may not be identical).

The answer contains pointers to the different documents that you uploaded to the knowledge base. The knowledge base responses also come with citations, so you can find more information by looking up the exact text that a response is based on and check that the response makes sense and is factually correct.

You can continue the conversation by submitting new queries, such as the following:

Can you summarize the content of – EIP-4844: Shard Blob Transactions - in 200 words?

You will see a response similar to the one in the following screenshot.

Clean up

You can keep the components that you built in this post, because you’ll reuse them throughout the series. Alternatively, you can follow the cleanup instructions in Part 4 to delete them.

Conclusion

In this post, we demonstrated how to create and test an Amazon Bedrock knowledge base, summarizing information contained in various EIPs. In Part 2, we implement a mechanism to govern the submission of new documents to this knowledge base.

About the Authors

Guillaume Goutaudier is a Sr Enterprise Architect at AWS. He helps companies build strategic technical partnerships with AWS. He is also passionate about blockchain technologies, and is a member of the Technical Field Community for blockchain.

Guillaume Goutaudier is a Sr Enterprise Architect at AWS. He helps companies build strategic technical partnerships with AWS. He is also passionate about blockchain technologies, and is a member of the Technical Field Community for blockchain.

Shankar Subramaniam is a Sr Enterprise Architect in the AWS Partner Organization aligned with Strategic Partnership Collaboration and Governance (SPCG) engagements. He is a member of the Technical Field Community for Artificial Intelligence and Machine Learning.

Shankar Subramaniam is a Sr Enterprise Architect in the AWS Partner Organization aligned with Strategic Partnership Collaboration and Governance (SPCG) engagements. He is a member of the Technical Field Community for Artificial Intelligence and Machine Learning.

Source: Read More